Deep Learning(CS7015): Lec 1.6 The Curious Case of Sequences

Summary

TLDRThis script delves into the significance of sequences in data, highlighting their prevalence in various forms such as time series, speech, music, and text. It emphasizes the importance of capturing interactions within sequences for natural language processing and introduces Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) cells as pivotal models for sequence interaction. The script also touches upon the challenges of training RNNs, such as the vanishing and exploding gradient problem, and how advancements in optimization and regularization have enabled their practical application. Furthermore, it mentions the introduction of Attention Mechanisms in 2014, which revolutionized sequence prediction problems, like translation, and led to a surge in deep neural network adoption in NLP.

Takeaways

- 📈 Sequences are fundamental in data processing, including time series, speech, music, text, and video.

- 🗣️ Speech data is characterized by the interaction of units within a sequence, where individual words gain meaning through their arrangement in sentences.

- 🎵 Similar to speech, music and other forms of sequence data involve elements that interact with each other to convey a collective message or melody.

- 🤖 The need for models to capture these interactions led to the development of Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) cells.

- 📚 RNNs were first proposed in 1986 by Jordan, with a variant introduced by Elman in 1990, emphasizing their long-standing presence in the field.

- 💡 The popularity of RNNs and LSTMs has surged recently due to advances in computation, data availability, and improvements in training techniques such as optimization algorithms and regularization.

- 🚀 The 'exploding and vanishing gradient problem' has historically made it difficult to train RNNs on long sequences, but advancements have mitigated this issue.

- 🧠 LSTMs, proposed in 1997, are now a standard for many NLP tasks due to their ability to overcome the limitations of traditional RNNs.

- 🔄 Gated Recurrent Units (GRUs) and other variants of LSTMs have also emerged, contributing to the versatility of sequence modeling in deep learning.

- 🌐 The Attention Mechanism, introduced around 2014, has been pivotal for sequence prediction problems like translation, enhancing the capabilities of deep neural networks in handling complex tasks.

- 🔍 The concept of attention has roots in Reinforcement Learning from the early 1990s, highlighting the evolution of ideas in neural network research.

Q & A

What is a sequence in the context of data?

-A sequence refers to an ordered series of elements, such as time series data, speech, music, text, or video frames, where each element interacts with others to convey meaning or a pattern.

Why is capturing interaction between elements in a sequence important for natural language processing?

-Capturing interactions is crucial because the meaning of words in natural language often depends on their context within a sentence or text, and this contextual understanding is essential for accurate processing and interpretation.

What are Recurrent Neural Networks (RNNs)?

-Recurrent Neural Networks are a class of artificial neural networks where connections between nodes form a directed graph along a temporal sequence. They are designed to capture the temporal dynamics in sequence data.

When were Recurrent Neural Networks first proposed?

-The concept of Recurrent Neural Networks was first proposed by Jordan in 1986.

What is the significance of Long Short-Term Memory (LSTM) cells in sequence modeling?

-LSTM cells are a special kind of RNN that can capture long-term dependencies in data. They help overcome the vanishing gradient problem, allowing for effective training on longer sequences.

When were Long Short-Term Memory cells proposed?

-Long Short-Term Memory cells were proposed in 1997 to address the challenges of training RNNs on longer sequences.

What is the exploding and vanishing gradient problem in the context of RNNs?

-The exploding and vanishing gradient problem refers to the difficulty in training RNNs on long sequences due to gradients becoming too large to manage (exploding) or too small to be effective (vanishing) during backpropagation.

What is an Attention Mechanism in deep learning?

-An Attention Mechanism allows a model to focus on certain parts of the input sequence when making predictions. It helps the model to better understand and process sequence data by emphasizing relevant parts of the input.

When did Attention Mechanisms become widely used in deep learning?

-Attention Mechanisms became widely used in deep learning around 2014, following advances in optimization and regularization techniques that made training deep neural networks more feasible.

What is a sequence-to-sequence translation problem?

-A sequence-to-sequence translation problem involves translating a sequence from one language to another, where the model must learn to generate an equivalent sequence in the target language based on the input sequence.

How has the field of NLP evolved with the advent of deep learning?

-The field of NLP has seen a significant shift towards using deep neural networks, with many traditional algorithms being replaced by neural network-based approaches. This has been driven by the ability of these networks to better model complex patterns and interactions in language data.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantVoir Plus de Vidéos Connexes

Linguistik Digital - Video Material 2

Incidence vs Prevalence: Understanding Disease Metrics

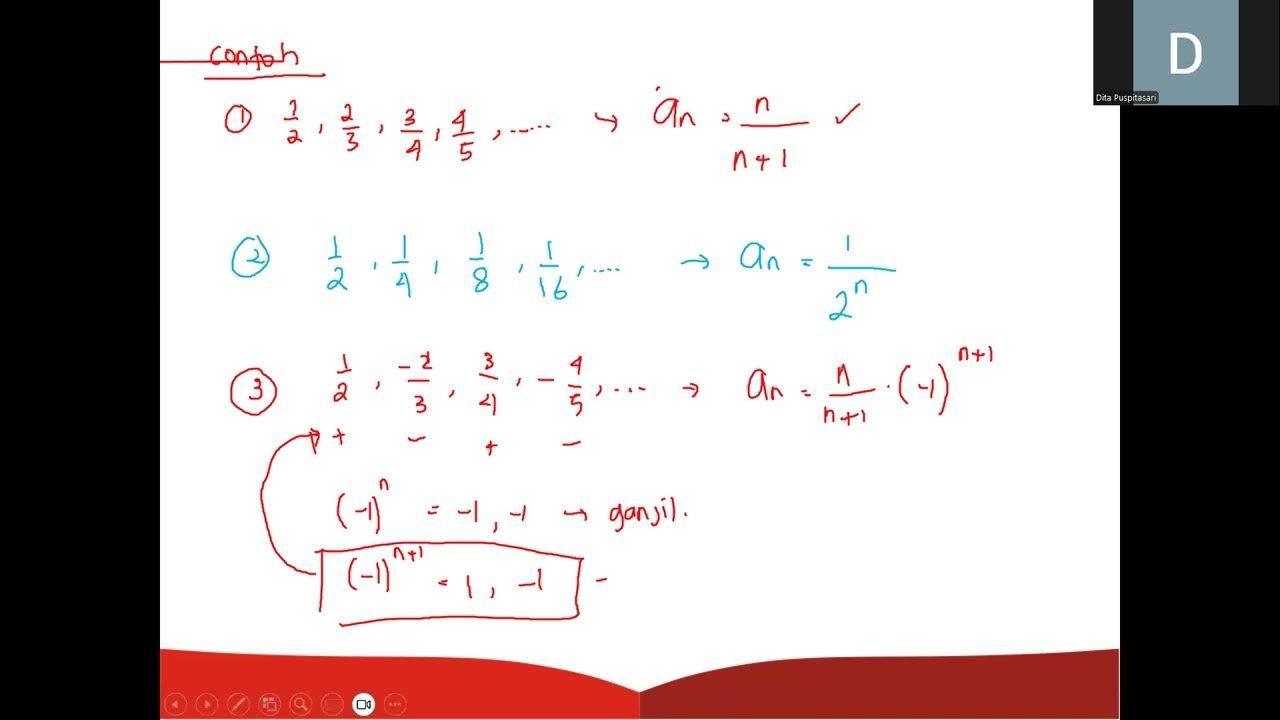

Kalkulus 1 - Barisan dan Deret (Part 1)

Database Design Tips | Choosing the Best Database in a System Design Interview

S6E10 | Intuisi dan Cara kerja Recurrent Neural Network (RNN) | Deep Learning Basic

Grade 9 Music:Music of Medieval, Renaissance, & Baroque Period with composers -Week2 (Deped Module).

5.0 / 5 (0 votes)