Instrumenting Elastic APM Agent on DataBricks

Summary

TLDRIn this video, Neil Mangy, Senior Principal Solution Architect at Elastic, demonstrates how to instrument the Elastic APM agent on Azure Databricks. He walks through setting up an init script to deploy the APM agent across driver and executor nodes, configuring Spark with extra Java options, and verifying the installation within a Databricks notebook. Neil showcases a sample Spark job to confirm the agent captures metrics and visualizes application performance in Elastic Observability dashboards. The tutorial provides a clear, step-by-step approach for gaining actionable insights and monitoring applications in a Databricks environment using Elastic APM.

Takeaways

- 😀 The video demonstrates how to instrument the Elastic APM agent on Azure Databricks to monitor application performance.

- 😀 Databricks is built on Apache Spark, which has a driver and executor architecture that the APM agent needs to be applied to.

- 😀 The Elastic APM agent can be bootstrapped using extra Java options for both the Spark driver and executor.

- 😀 Databricks init scripts allow users to run arbitrary shell scripts on all nodes, which is used to install the Elastic APM agent.

- 😀 The getagent.sh script is used within the init script to pull and distribute the Elastic APM agent across all nodes.

- 😀 Users need an Azure Databricks account and an Elastic instance to replicate the setup.

- 😀 Elastic APM URL and token can be retrieved via the 'Add Integrations' section in Elastic, specifying the language (Java in this case).

- 😀 Extra Java options for the driver and executor must be copied from the Spark UI environment and appended with the Elastic APM configuration in a single line.

- 😀 After configuring the cluster and restarting it, a sanity check can be performed in a notebook to ensure the APM agent exists and runs sample Spark jobs.

- 😀 The Elastic Observability dashboard will show metrics for both the driver and executor, verifying that the APM agent is successfully monitoring Spark jobs.

- 😀 Care must be taken with sensitive data and correct formatting when appending Java options in Spark configuration.

Q & A

What is the main purpose of the demonstration in this video?

-The main purpose of the demonstration is to show how to instrument the Elastic APM agent on Databricks, specifically within Azure, to gain better insights and observability into application performance.

Why is the Elastic APM agent being integrated with Databricks?

-The integration is being done to address the growing adoption of Azure and Databricks in the industry, and to provide users with the ability to monitor and gain insights into the performance of their applications through APM.

What does the architecture involve for adding the Elastic APM agent to Databricks?

-The architecture involves bootstrapping the Elastic APM agent using extra Java options for both the driver and executor in Apache Spark, which is the foundation of Azure Databricks. The agent is hosted locally and distributed to all Databricks nodes using an init script.

How does the init script help in the process of distributing the Elastic APM agent?

-The init script runs an arbitrary shell script that pulls the Elastic APM agent from a publicly or privately accessible URL, and then distributes it to all nodes in the Azure Databricks cluster.

What are the prerequisites to follow along with the demonstration?

-To follow along, you need an Azure Databricks or Databricks account, an Elastic service or instance, and the appropriate access to the Databricks notebook for configuring and running the necessary scripts.

How do you obtain the Elastic APM URL and token?

-You can obtain the Elastic APM URL and token by navigating to 'Add Integrations' in Elastic, selecting Elastic APM, choosing the language (Java in this case), and then copying the necessary details such as the URL and token for use in the init script.

What is the role of the `getagent.sh` script in the setup?

-The `getagent.sh` script is responsible for pulling the Elastic APM agent onto the Databricks file system. This script is then referenced in the init script to distribute the agent across all Databricks nodes.

What configuration changes need to be made to the Databricks cluster to instrument the APM agent?

-You need to go into the Databricks cluster's advanced settings and configure the 'driver extra Java options' and 'spark executor extra Java options'. These options should include the APM agent configurations, such as the Java agent path, service name, token, and URL.

Why is it important to copy and paste the existing driver and executor extra Java options before adding APM configurations?

-It's important because the existing Java options for the driver and executor must be preserved, and the APM configurations need to be appended to these options without altering the original settings. This ensures the application continues to run as expected while adding APM monitoring.

How can you verify that the Elastic APM agent is correctly installed and running?

-You can verify the installation by running a simple sanity check in the Databricks notebook, checking if the APM agent file exists, and then performing some sample processing (like counting lines of Spark data) to ensure the agent is reporting metrics to Elastic's observability dashboard.

What is the final verification step to confirm that the APM agent is working correctly on Databricks?

-The final verification step is to check the Elastic observability page, where you should see metrics for the driver and executor. If everything is set up correctly, you will see the dashboards populated with data reflecting the performance of the Spark jobs running on Databricks.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantVoir Plus de Vidéos Connexes

Build a Powerful Home SIEM Lab Without Hassle! (Step by Step Guide)

How To Setup ELK | Elastic Agents & Sysmon for Cybersecurity

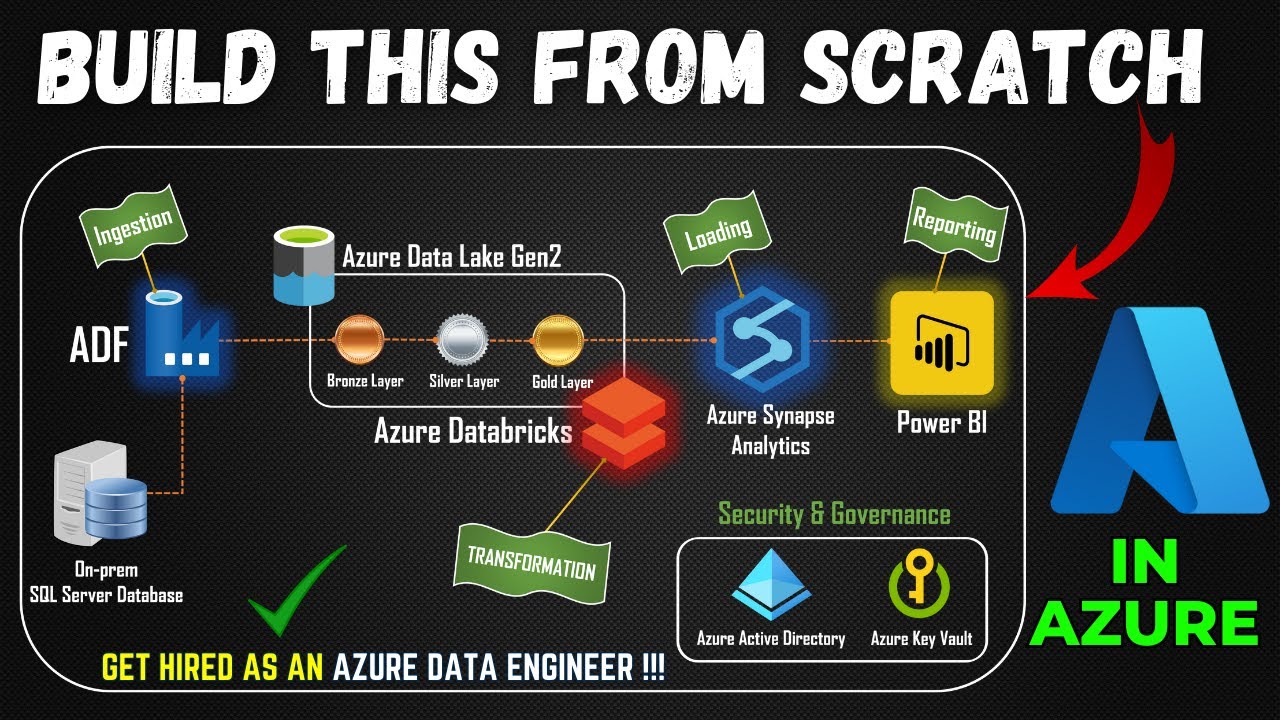

Part 1- End to End Azure Data Engineering Project | Project Overview

83. Databricks | Pyspark | Databricks Workflows: Job Scheduling

Price elasticity of supply

10 Mister Ekonomi | Elastisitas

5.0 / 5 (0 votes)