ChatGPT in 3 Minuten erklärt

Summary

TLDRThis video explains the workings of generative artificial intelligence, specifically models like ChatGPT. It highlights how these systems are trained using vast amounts of text data, including Wikipedia, and how they learn language patterns and the probabilities of word sequences. By breaking input into small units called tokens, AI calculates the most likely response. However, the system doesn’t verify factual accuracy and may sometimes generate convincing but incorrect answers. The video concludes that while these models are based on probability and language patterns, they can significantly impact daily life as text generators, chatbots, and even humanoid robots.

Takeaways

- 😀 AI models like ChatGPT rely heavily on human input to improve and function effectively.

- 😀 These models are trained on vast amounts of text, including entire sources like Wikipedia.

- 😀 AI language models analyze the probability of words following each other based on language patterns.

- 😀 At the beginning of training, human feedback is crucial to eliminate incorrect or unwanted responses.

- 😀 ChatGPT divides the input text into smaller units called tokens to understand and process the meaning of words and sentences.

- 😀 Tokens are the smallest components in a sentence and can include words, syllables, abbreviations, or punctuation marks.

- 😀 The AI model calculates the probability of the next token based on its position and relation to other tokens.

- 😀 The meaning of a word or phrase is determined by its surrounding context, such as 'bank' meaning a financial institution or the side of a river.

- 😀 The model generates responses by selecting the most statistically probable answer based on the input and training data.

- 😀 AI models like ChatGPT do not have factual knowledge and cannot verify the accuracy of the answers they generate.

- 😀 Despite their lack of factual verification, AI-generated responses can sound convincing, but should always be independently checked for accuracy.

Q & A

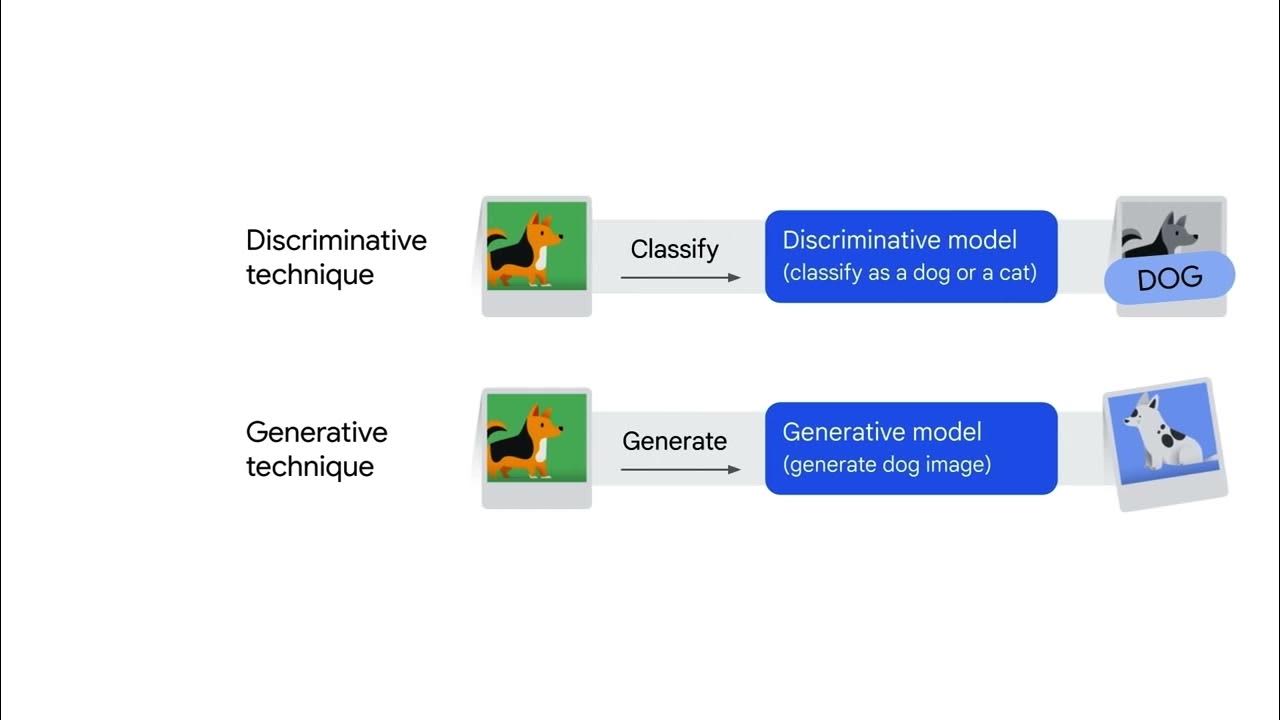

What is generative artificial intelligence and how does it work?

-Generative artificial intelligence (AI), like GPT, works by analyzing large datasets of text and learning patterns in language. It generates responses based on statistical probabilities, predicting the next word or token based on previous input.

How does GPT learn to generate text?

-GPT is trained on large amounts of text, including sources like Wikipedia. Through this training, it learns language patterns and the probability of words following one another. Feedback from human reviewers helps refine its responses over time.

What are tokens in the context of GPT?

-Tokens are small units of text, such as words, syllables, abbreviations, or punctuation marks. The input text is broken down into tokens, which the model processes to understand the meaning and context.

How does GPT determine the next token in a sentence?

-GPT calculates the probability of which token (word or symbol) is most likely to follow a given sequence. This prediction is based on the context of the surrounding tokens and the patterns it has learned during training.

What happens when GPT processes an input text?

-The input text is divided into tokens. GPT then analyzes the position of these tokens and their relationships to each other, calculating the most likely response by predicting the sequence of the next tokens based on learned patterns.

Can GPT verify facts in its responses?

-No, GPT does not have the ability to verify facts. It generates responses based on statistical probabilities and patterns in its training data, but it cannot check the accuracy of its answers.

Why can GPT's responses sometimes sound convincing but be incorrect?

-GPT's responses are based on statistical probabilities rather than factual verification. When it has limited data or insufficient context, it can generate responses that sound plausible but are actually incorrect.

How does GPT handle incorrect or unwanted answers during training?

-During training, human reviewers check the answers generated by GPT, excluding incorrect or undesirable responses. The model learns from this feedback to improve its future responses.

What role do training datasets play in the performance of GPT?

-Training datasets are crucial for GPT's performance. The model learns language patterns and meaning from the vast amount of data it's trained on. The quality and variety of the data directly affect the accuracy and relevance of the generated responses.

How does GPT's language model differ from actual factual knowledge?

-GPT's language model is based on probability and linguistic patterns, not factual knowledge. It generates text based on patterns learned during training, but it cannot independently verify the accuracy or truth of the content it produces.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenant5.0 / 5 (0 votes)