Системи числення

Summary

TLDRThis video explains the basics of number systems used in computing, focusing on binary, floating-point representation, and conversions between different numerical bases. The speaker discusses how computers handle fractional numbers using floating-point format, highlighting issues of precision and approximation in calculations. Practical techniques for estimating large binary numbers are shared, such as approximating powers of two with powers of ten for easier mental estimation. The video emphasizes the importance of these concepts in programming, helping viewers understand the internal workings of computers and the representation of large or small numbers in memory systems.

Takeaways

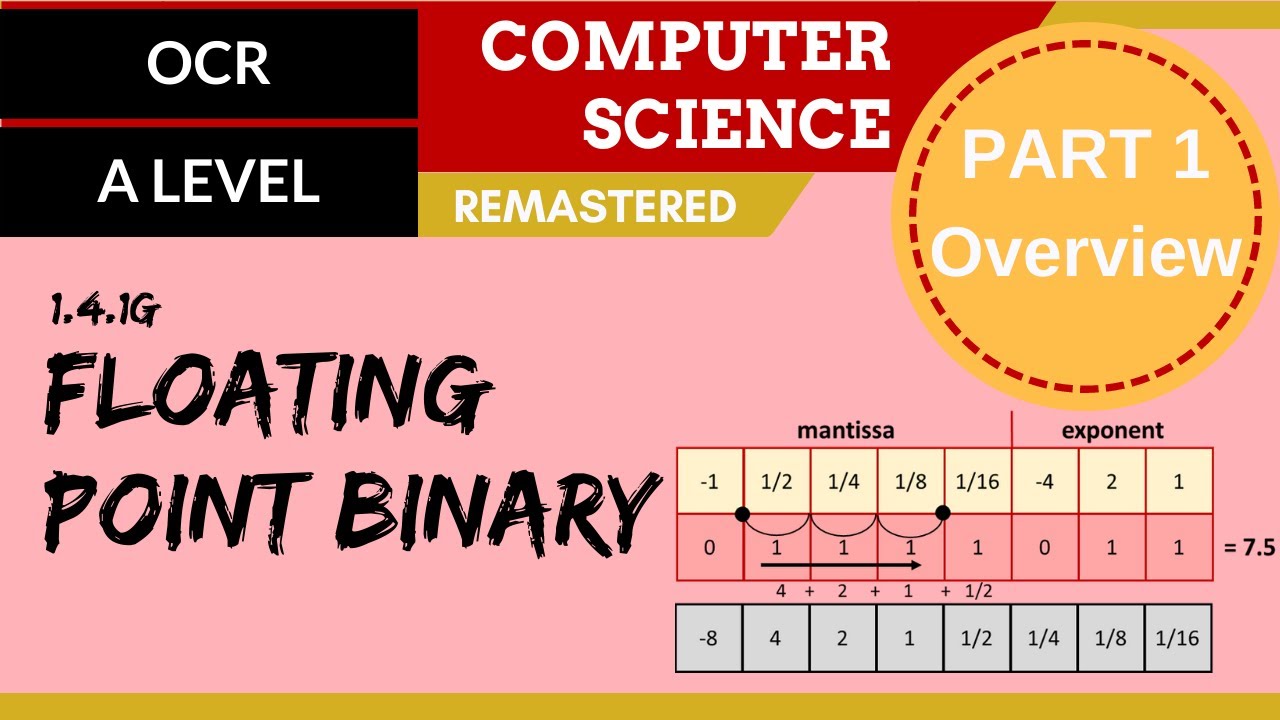

- 😀 Floating-point numbers in computers are represented using scientific notation, consisting of a sign, exponent, and mantissa.

- 😀 The mantissa represents the significant digits of the number, while the exponent adjusts its scale by applying a power of two.

- 😀 This method of representation allows for efficient handling of both very large and very small numbers without increasing the bit size excessively.

- 😀 However, floating-point numbers are not always precise due to rounding errors and binary representation limitations.

- 😀 For example, adding 0.1 and 0.2 might result in a value slightly off from the expected 0.3 due to these inaccuracies.

- 😀 To increase precision in calculations, using more bits in the floating-point representation can help minimize errors.

- 😀 Powers of two are a fundamental concept in computing, with 2^10 being approximately 1000, useful for converting between binary and decimal.

- 😀 This approximation helps when working with large powers of two, such as 2^30, which can be roughly translated to 10^9 for quick estimations.

- 😀 A simple rule of thumb is to divide the exponent by 3 when approximating powers of two to powers of ten for easier mental calculations.

- 😀 This method of approximation is particularly useful when working with large numbers, like the size of an IPv6 address (128 bits), which can be quickly recognized as very large.

- 😀 The video encourages practical exercises to further develop familiarity with binary and floating-point number systems through hands-on activities in programming.

Q & A

What is the main focus of the video?

-The video primarily focuses on explaining the binary and other numeral systems, particularly how floating-point numbers are represented in computers, and how to deal with their precision and scale.

Why are floating-point numbers represented in the format of sign, exponent, and mantissa?

-This format allows for the efficient representation of both very large and very small numbers without needing excessive memory space, as the exponent can vary widely to scale the number accordingly.

What problem arises when working with floating-point numbers in computers?

-The main issue with floating-point numbers is their limited precision, which can lead to rounding errors. For example, adding 0.1 and 0.2 might result in a value like 0.3001 instead of the expected 0.3.

How does the representation of fractional numbers in computers differ from how we normally express them?

-In computers, fractional numbers are often represented using floating-point notation, which involves an approximation. This is different from how we represent fractions in the decimal system, as computers cannot store all decimal values exactly.

What is the significance of the 2^10 = 1024 approximation?

-The approximation of 2^10 being close to 10^3 (1000) helps programmers quickly estimate large binary numbers in a more familiar decimal form. This simplification aids in understanding and dealing with binary calculations.

Why is it difficult to conceptualize powers of two compared to powers of ten?

-Powers of ten are easier to grasp because they align with our daily experience and number system (e.g., millions, billions), whereas powers of two grow much faster and can be more challenging to intuitively understand.

How can the trick involving dividing the exponent by 3 help with understanding powers of two?

-By dividing the exponent of a power of two by approximately 3, one can quickly estimate the order of magnitude in terms of powers of ten. For example, 2^30 can be roughly estimated as 10^9 by this method.

What practical scenario does the video mention where understanding binary exponents is useful?

-The video mentions IPv6 addresses, which are 128 bits long. Using the trick for estimating powers of two, one can quickly understand that 2^128 is a very large number, which is useful when conceptualizing the size of such addresses.

What is the relationship between binary and decimal systems highlighted in the video?

-The video emphasizes the difficulty of working with binary numbers compared to decimal numbers, which are more intuitive for most people. It offers strategies like approximating powers of two using powers of ten to bridge this gap.

How can larger bit widths help improve precision with floating-point numbers?

-Using larger bit widths in floating-point representation increases the available precision, reducing rounding errors and providing more accurate results, especially for operations involving very small or very large numbers.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantVoir Plus de Vidéos Connexes

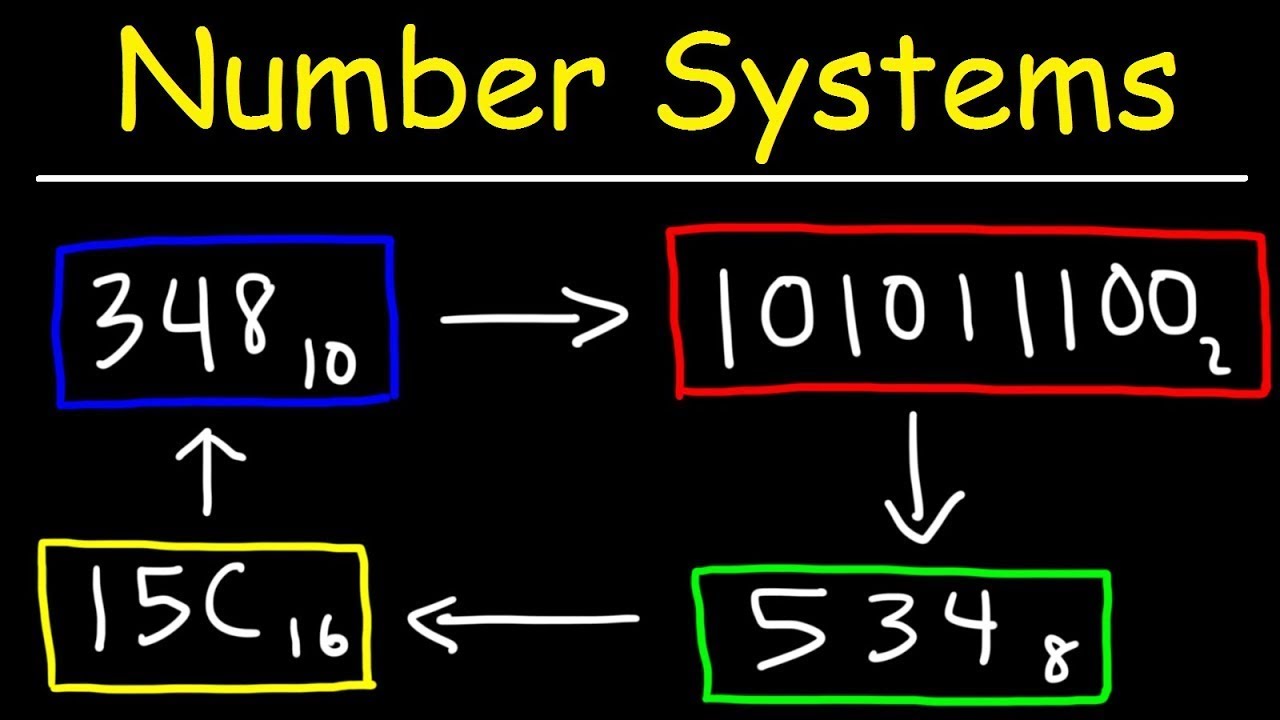

Number Systems Introduction - Decimal, Binary, Octal & Hexadecimal

Tutorial Lengkap: Cara Konversi Bilangan Desimal ke Biner, Oktal dan Hexadesimal

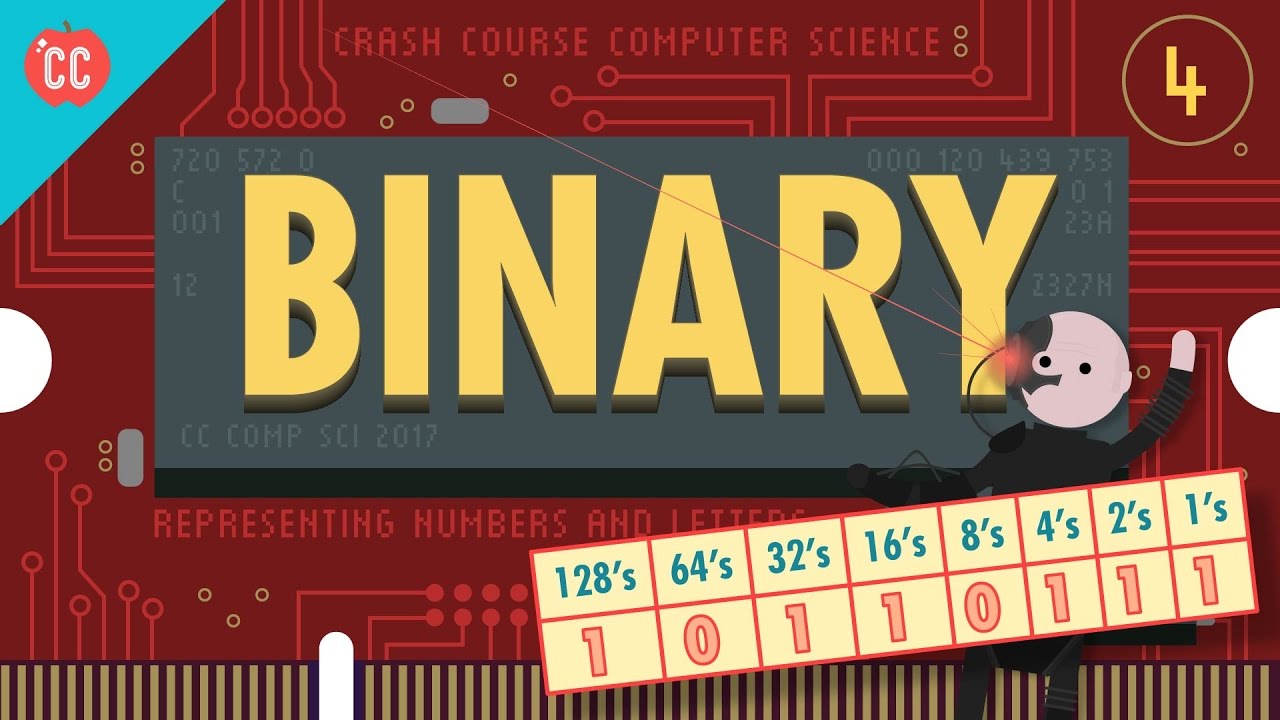

Representing Numbers and Letters with Binary: Crash Course Computer Science #4

SISTEM BILANGAN - Sistem Komputer

79. OCR A Level (H046-H446) SLR13 - 1.4 Floating point binary part 1 - Overview

Teknologi Digital • Part 1: Pengertian Teknologi Digital, Sistem Bilangan, dan Kode Biner

5.0 / 5 (0 votes)