How Prompt Compression Can Make You a Better Prompt Engineer

Summary

TLDRIn this video, Mark from Prompt Advisors explains the concept of 'prompt compression,' a technique for streamlining large prompts without losing their effectiveness. He demonstrates two methods: a 'lazy' approach using natural language to trim unnecessary tokens, and a more technical approach using algorithms to identify and remove low-value tokens. Mark highlights the benefits of prompt compression, including cost savings on tokens, especially in business and enterprise settings. He also shares tools and frameworks, such as a custom GPT tool and Microsoft’s LLM Lingua, to help with the compression process. The video is aimed at enhancing prompt engineering skills for more efficient AI use.

Takeaways

- 😀 Prompt compression helps reduce the length of a prompt without losing essential information, improving efficiency in AI interactions.

- 😀 Longer prompts are not necessarily more effective; compressing a prompt can achieve similar outcomes with fewer tokens.

- 😀 Large language models (LLMs) are probabilistic, meaning they predict the most likely outcome based on tokens, and unnecessary tokens can be removed without losing accuracy.

- 😀 The lazy method of prompt compression involves manually identifying and removing filler words or phrases while keeping the core meaning intact.

- 😀 The technical method involves using algorithms to identify and remove low-value tokens while preserving high-value ones.

- 😀 Tools like custom GPTs and frameworks such as Microsoft’s LLM Lingua can be used to automate prompt compression.

- 😀 A compressed prompt can save a significant number of tokens, which translates to cost savings, especially in enterprise applications.

- 😀 Using prompt compression, businesses can save money while still generating high-quality outputs from LLMs.

- 😀 Compression techniques are useful for different applications, including custom GPTs and voice agent systems, where character or token limits are important.

- 😀 The balance between reducing token count and maintaining output quality is essential; even small reductions in tokens can lead to significant cost savings.

- 😀 Prompt compression is a crucial skill for prompt engineers, allowing them to create more efficient, effective, and cost-effective AI prompts.

Q & A

What is prompt compression and why is it important?

-Prompt compression involves shortening a large or verbose prompt while retaining its essential meaning and functionality. It is important because it helps improve efficiency, reduce resource consumption, and optimize AI interactions, particularly when working within token or character limits.

How does the 'lazy method' for prompt compression work?

-The lazy method involves manually identifying and removing unnecessary words or phrases from a prompt. This is done by focusing on the core instructions and eliminating superfluous elements, making the prompt more concise without losing important context.

What is the technical method for prompt compression?

-The technical method uses algorithms and tools like **LLM Lingua** to automatically identify high-value tokens and remove or paraphrase low-value ones. This method is more systematic and can handle complex prompts more efficiently.

What are some practical use cases for prompt compression?

-Prompt compression is especially useful for custom GPTs with character constraints, voice agents, or any system that requires concise prompts to improve performance and reduce costs. It helps when handling large-scale data or repetitive tasks in business applications.

How does token reduction help save costs in AI applications?

-By reducing the number of tokens used in a prompt, you lower the computational resources required to process that prompt. In business settings, especially with large volumes of prompts, this can translate to significant cost savings.

What role do 'command words' play in prompt compression?

-Command words like 'create,' 'analyze,' or 'generate' are often retained during prompt compression because they are high-value, directionally important terms that guide the AI's response. These words are essential for maintaining the prompt's core function.

What is the relationship between token compression and the performance of an AI model?

-Token compression reduces the size of the prompt without compromising its essential meaning, helping the AI model to process the input more efficiently. Although the output may be more succinct, the AI can still produce a coherent result, similar to the original prompt.

Can prompt compression be applied to both technical and non-technical users?

-Yes, prompt compression can be applied by both technical and non-technical users. For non-technical users, tools like the **Bolt UI** provide a simple, user-friendly interface to compress prompts, while technical users can implement more advanced algorithms and frameworks.

What tools were demonstrated for prompt compression in the video?

-The video demonstrated tools like a custom GPT for prompt compression and **Google Colab** for running a technical compression algorithm. Additionally, a tool called **Bolt** was showcased for an easy-to-use UI for compressing prompts.

Why are LLMS not considered inherently 'smart' according to the video?

-LLMs (Large Language Models) are not inherently 'smart' because they do not understand in the human sense. They are probabilistic models that generate responses by predicting the most likely tokens based on input, which means their behavior is based on probability, not true understanding.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantVoir Plus de Vidéos Connexes

95% of People Writing Prompts Miss These Two Easy Techniques

Getting Started with Prompt Engineering: How to Sell ChatGPT Prompts on Promptbase

How to Unlock ANY Framework's Potential with Meta Prompting

The Perfect Prompt Generator No One Knows About

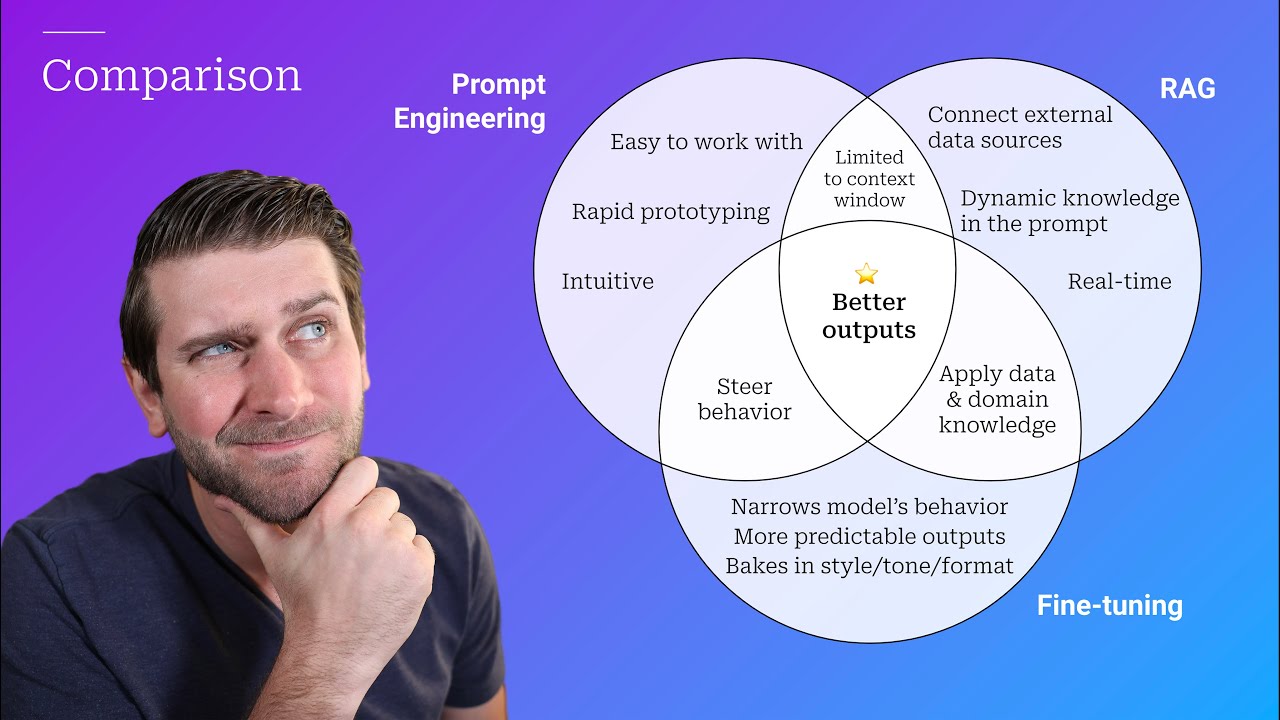

Prompt Engineering, RAG, and Fine-tuning: Benefits and When to Use

Lesson 5 of Prompt Engineering: Evaluating & Refining Prompts

5.0 / 5 (0 votes)