Training an AI to create poetry (NLP Zero to Hero - Part 6)

Summary

TLDRIn this video, Laurence Moroney walks viewers through the process of using TensorFlow to create a text generation model based on traditional Irish song lyrics. He covers the key steps of tokenizing text, generating sequences, padding, and training a neural network to predict the next word in a sequence. The model is trained using LSTMs and bi-directional layers, with categorical cross-entropy as the loss function. By the end, the model generates poetry by predicting words based on input sequences, achieving about 70-75% accuracy in doing so.

Takeaways

- 🤖 The series focuses on teaching the basics of Natural Language Processing (NLP) using TensorFlow.

- 🔡 Tokenization and sequencing of text are key steps in preparing text for training neural networks.

- 💡 Sentiment in text is represented through embeddings, and long text sequences are learned via recurrent neural networks (RNNs) and LSTMs.

- 🎶 The video demonstrates training a model on the lyrics of traditional Irish songs to generate poetry.

- 📜 The corpus is tokenized, with the addition of a special out-of-vocabulary token to help pad sequences.

- 🔢 Input sequences are generated by breaking down each line of lyrics into smaller tokenized sequences for training.

- 🧩 Padding sequences is important to ensure that all input sequences have a uniform length for model training.

- 💻 X (features) and Y (labels) are created by using the input sequences to predict the next word in a sentence.

- 📊 The model architecture includes an embedding layer, a bi-directional LSTM, and a dense layer to output predictions.

- 🔄 The final model can be used to predict the next word in a sequence, generating new text based on learned patterns.

Q & A

What is the primary goal of the video?

-The primary goal of the video is to show how to create a neural network model using TensorFlow to generate poetry based on the lyrics of traditional Irish songs.

What NLP techniques have been discussed in this video series?

-The video series covers techniques like tokenizing and sequencing text, using embeddings, and learning text semantics over long sequences using recurrent neural networks and LSTMs.

What is the significance of padding in this model?

-Padding is used to ensure that all sequences have the same length by adding zeros, which allows the model to process input sequences of varying lengths consistently.

Why doesn’t the model require a validation dataset for generating text?

-For text generation, the model is focused on spotting patterns in the training data, so all available data is used to learn those patterns, without needing validation.

What is the purpose of generating n-grams from tokenized text?

-Generating n-grams allows the model to learn to predict the next word based on the previous sequence of words. This is essential for building a model that can generate coherent text.

Why does the model use an LSTM in a bidirectional setup?

-Using a bidirectional LSTM helps the model learn from both past and future contexts in the sequence, improving its ability to predict the next word.

What loss function is used, and why?

-Categorical cross entropy is used as the loss function because the model is predicting across a large set of word classes, and this type of loss function is well-suited for multi-class classification problems.

How is the model trained to predict the next word in a sequence?

-The model is trained by taking sequences of words (input) and the following word (label). It learns to predict the next word based on the previous sequence using a categorical prediction approach.

What does the model's accuracy of 70-75% indicate?

-An accuracy of 70-75% means the model predicts the correct next word 70-75% of the time for sequences it has learned during training.

How does the model generate poetry after being trained?

-After training, the model can generate poetry by being seeded with a sequence of words. It predicts the next word, adds it to the sequence, and repeats the process to generate a longer sequence of text.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantVoir Plus de Vidéos Connexes

Training a model to recognize sentiment in text (NLP Zero to Hero - Part 3)

Sequencing - Turning sentences into data (NLP Zero to Hero - Part 2)

ML with Recurrent Neural Networks (NLP Zero to Hero - Part 4)

Prepare your dataset for machine learning (Coding TensorFlow)

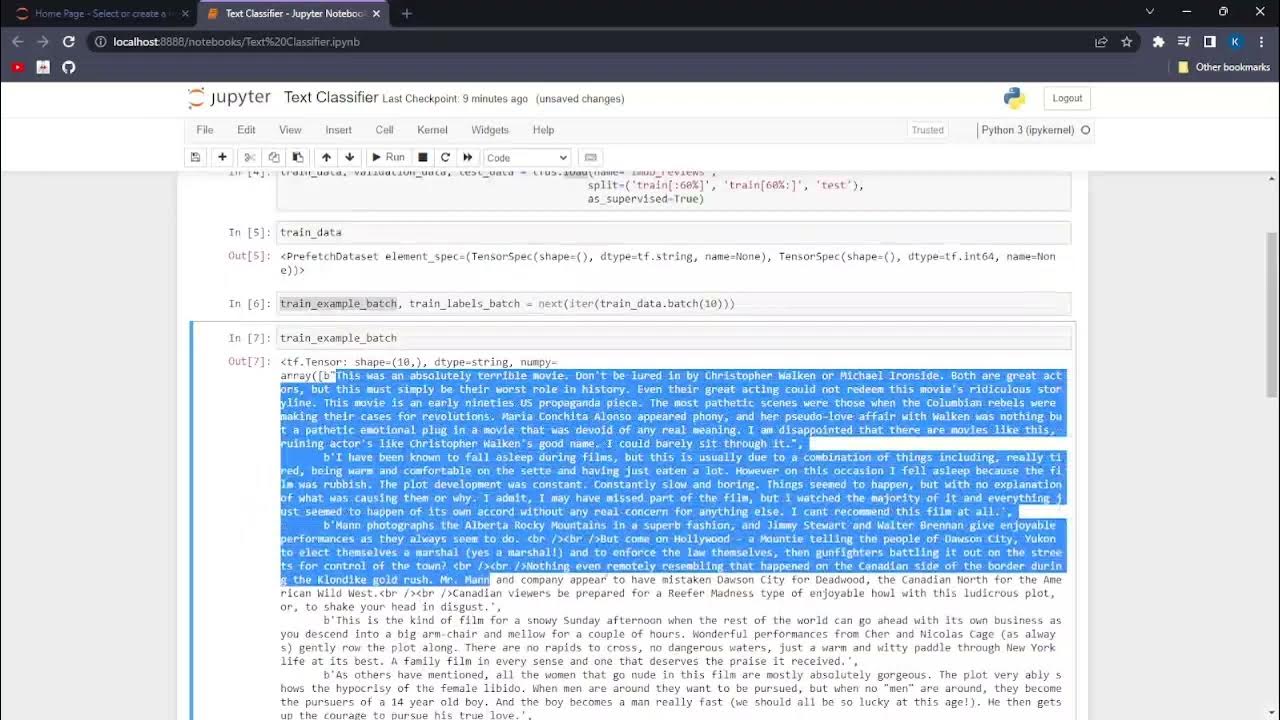

AIP.NP1.Text Classification with TensorFlow

Encoder-Decoder Architecture: Lab Walkthrough

5.0 / 5 (0 votes)