Recurrent Neural Networks (RNNs), Clearly Explained!!!

Summary

TLDRIn this StatQuest episode, Josh Starmer introduces recurrent neural networks (RNNs), emphasizing their ability to handle sequential data of varying lengths, making them ideal for tasks like stock price prediction. He explains how RNNs use feedback loops to process sequential inputs and demonstrates their predictive capabilities using simplified stock market data from StatLand. Despite their potential, Starmer highlights the challenges RNNs face, such as the Vanishing/Exploding Gradient Problem, which hinders training effectiveness. The episode concludes with a teaser for future discussions on advanced solutions like Long Short-Term Memory Networks.

Takeaways

- 🧠 Recurrent Neural Networks (RNNs) are designed to handle sequential data, making them suitable for tasks like predicting stock prices in StatLand.

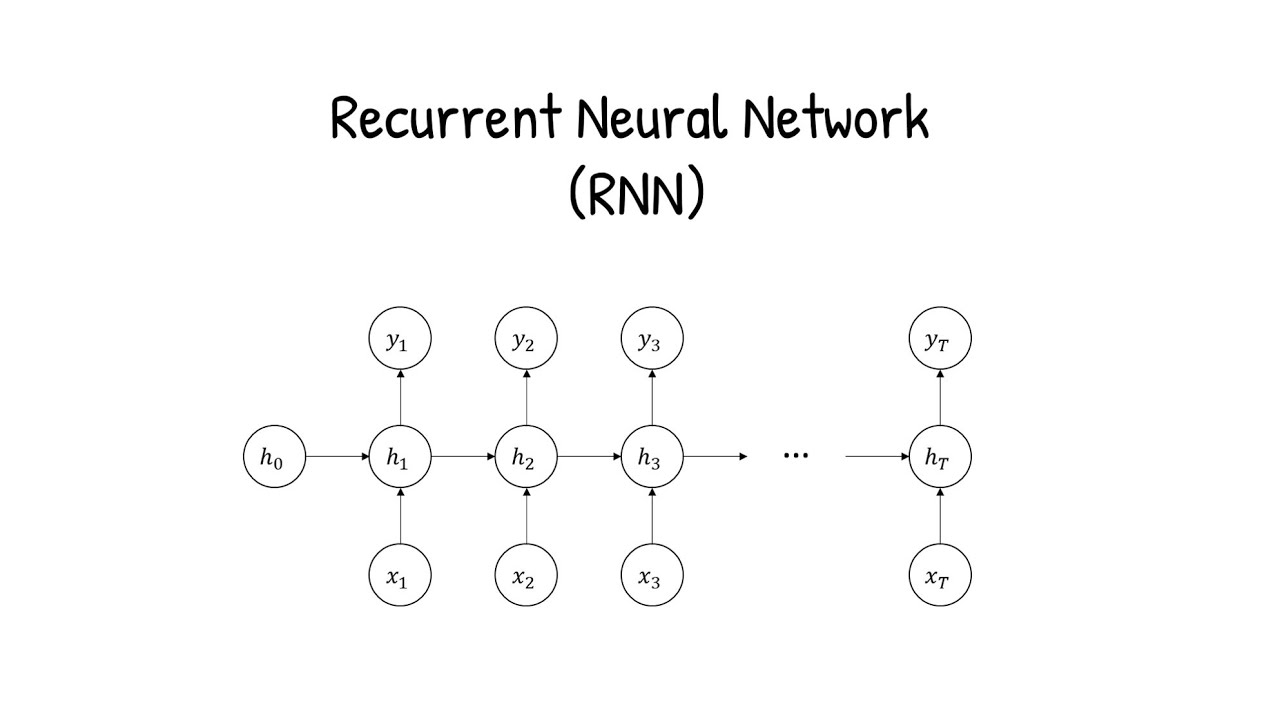

- 🔄 RNNs have a unique feature: feedback loops, allowing them to process sequences of data over time.

- 📈 The script explains how RNNs can be 'unrolled' to visually represent the processing of sequential inputs, simplifying understanding.

- 🔢 When unrolling an RNN, weights and biases are shared across all time steps, which is crucial for efficient training.

- 📉 The Vanishing Gradient Problem occurs when gradients become too small, causing the network to learn slowly or not at all.

- 💥 The Exploding Gradient Problem happens when gradients become too large, leading to unstable training and large updates.

- 🚀 The script introduces the concept of Long Short-Term Memory Networks (LSTMs) as a solution to the Vanishing/Exploding Gradient Problem.

- 📚 The presenter, Josh Starmer, suggests resources for further learning, including his book and StatQuest website.

- 🌟 The script concludes with a call to action for viewers to support the channel through subscriptions and donations.

- 🔮 The video serves as an introduction to RNNs, setting the stage for more advanced topics like LSTMs and Transformers in future episodes.

Q & A

What is the main topic of the StatQuest video presented by Josh Starmer?

-The main topic of the video is recurrent neural networks (RNNs), which are clearly explained with a focus on their application in predicting stock prices in a simplified model called StatLand.

What are the prerequisites for understanding the content of this StatQuest video?

-To fully understand the video, viewers should already be familiar with the main ideas behind neural networks, backpropagation, and the ReLU activation function.

Why are vanilla recurrent neural networks considered a stepping stone to more advanced models?

-Vanilla RNNs are considered a stepping stone because they provide foundational knowledge for understanding more complex models like Long Short-Term Memory Networks (LSTMs) and Transformers, which are discussed in future StatQuests.

How does the stock market data's sequential nature challenge traditional neural network models?

-Traditional neural networks require a fixed number of input values, but stock market data is sequential and can vary in length. RNNs are needed to handle different amounts of sequential data for making predictions.

What is a key feature of recurrent neural networks that sets them apart from other neural networks?

-A key feature of RNNs is the presence of feedback loops that allow them to process sequential data, such as time-series data like stock prices.

How does the feedback loop in an RNN contribute to making predictions?

-The feedback loop in an RNN allows the network to use information from previous inputs to influence the prediction of future outputs, making it suitable for tasks like time-series prediction.

What is the unrolling process in the context of RNNs?

-Unrolling is a visualization technique where the RNN's feedback loop is 'unrolled' to create a copy of the network for each time step, showing how each input influences the output.

What is the Vanishing/Exploding Gradient Problem in RNNs?

-The Vanishing/Exploding Gradient Problem refers to the difficulty in training RNNs when gradients become too small (vanish) or too large (explode) during backpropagation, which can prevent the network from learning effectively.

Why might gradients explode in RNNs?

-Gradients can explode when the weights in the RNN's feedback loop are set to values greater than one, causing the gradients to grow exponentially with each time step during backpropagation.

How does the Vanishing Gradient Problem manifest in RNNs?

-The Vanishing Gradient Problem occurs when the weights in the feedback loop are set to values less than one, causing the gradients to shrink exponentially, making it difficult for the network to learn long-range dependencies.

What is a potential solution to the Vanishing/Exploding Gradient Problem mentioned in the video?

-A popular solution to the Vanishing/Exploding Gradient Problem is the use of Long Short-Term Memory Networks (LSTMs), which are designed to address these issues and will be discussed in a future StatQuest.

Outlines

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantMindmap

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantKeywords

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantHighlights

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantTranscripts

Cette section est réservée aux utilisateurs payants. Améliorez votre compte pour accéder à cette section.

Améliorer maintenantVoir Plus de Vidéos Connexes

S6E10 | Intuisi dan Cara kerja Recurrent Neural Network (RNN) | Deep Learning Basic

What is Recurrent Neural Network (RNN)? Deep Learning Tutorial 33 (Tensorflow, Keras & Python)

Long Short-Term Memory (LSTM), Clearly Explained

Recurrent Neural Networks - Ep. 9 (Deep Learning SIMPLIFIED)

3 Deep Belief Networks

Pengenalan RNN (Recurrent Neural Network)

5.0 / 5 (0 votes)