Tutorial 34- Performance Metrics For Classification Problem In Machine Learning- Part1

Summary

TLDRIn this educational video, the host Krishna introduces key metrics for evaluating classification problems in machine learning. The script covers confusion matrices, accuracy, type 1 and 2 errors, recall, precision, and the F-beta score. It emphasizes the importance of selecting the right metrics for model evaluation, especially in the context of balanced and imbalanced datasets. The video promises a continuation with ROC curves, AUC scores, and PR curves, followed by practical implementation on an imbalanced dataset to demonstrate the impact of different metrics on model accuracy.

Takeaways

- 📈 The video discusses various metrics for evaluating classification problems in machine learning, emphasizing the importance of selecting the right metrics for model evaluation.

- 📊 The script introduces the concept of a confusion matrix, explaining its components like true positives, false positives, true negatives, and false negatives, which are crucial for understanding model performance.

- 🔢 The video explains accuracy as a metric for balanced datasets, where the model's performance is measured by the proportion of correct predictions over the total predictions.

- ⚖️ It highlights the difference between balanced and imbalanced datasets, noting that accuracy may not be a reliable metric for the latter due to potential bias towards the majority class.

- 👀 The importance of recall (true positive rate) and precision (positive prediction value) is emphasized for evaluating models on imbalanced datasets, as they focus on reducing type 1 and type 2 errors respectively.

- 🤖 The script introduces the F-beta score, a metric that combines recall and precision, allowing for the weighting of the importance of false positives versus false negatives through the beta parameter.

- 📚 The video outlines the use of precision in scenarios where false positives are particularly costly, such as in spam detection, while recall is prioritized in cases like medical diagnosis to avoid missing critical conditions.

- 🧩 The F-beta score is detailed with its formula and application, showing how it can be adjusted with the beta value to reflect the relative importance of precision and recall in different contexts.

- 🔍 The video promises to cover additional metrics like Cohen's Kappa, ROC curve, AUC score, and PR curve in the next part of the series, indicating a comprehensive approach to model evaluation.

- 🛠️ Part three of the video series will include a practical implementation on an imbalanced dataset, demonstrating how to apply these metrics and understand their implications in real-world scenarios.

- 🔄 The script concludes by stressing the need for continuous learning and revision of these concepts to ensure a thorough understanding of machine learning model evaluation.

Q & A

What is the main topic of the video?

-The main topic of the video is discussing various metrics used in classification problems for evaluating machine learning algorithms.

What are the two types of classification problem statements mentioned in the video?

-The two types of classification problem statements are those based on class labels and those based on probabilities.

What is a confusion matrix?

-A confusion matrix is a 2x2 table used in binary classification problems to evaluate the performance of a classification model. It includes true positives, true negatives, false positives, and false negatives.

What is the default threshold value used in binary classification problems?

-The default threshold value used in binary classification problems is 0.5.

What is the importance of selecting the right metrics for evaluating a machine learning model?

-Selecting the right metrics is crucial for understanding how well a machine learning model is predicting. Incorrect metrics can lead to a false sense of accuracy, which may result in poor model performance when deployed in production.

What is the difference between a balanced and an imbalanced dataset in the context of classification problems?

-A balanced dataset has approximately the same number of instances for each class, while an imbalanced dataset has a significantly higher number of instances for one class compared to others.

Why is accuracy not a reliable metric for imbalanced datasets?

-Accuracy can be misleading for imbalanced datasets because a model could predict the majority class for all instances and still achieve high accuracy, even if it fails to correctly classify the minority class.

What are recall and precision, and when should they be used?

-Recall (or true positive rate) measures the proportion of actual positives correctly predicted by the model. Precision (or positive prediction value) measures the proportion of predicted positives that are actual positives. They should be used when dealing with imbalanced datasets to focus on reducing false negatives or false positives, respectively.

What is the F beta score and how is it related to the F1 score?

-The F beta score is a measure that combines precision and recall, with a parameter beta that allows for weighting the importance of recall over precision (or vice versa). When beta is equal to 1, the F beta score is known as the F1 score, which gives equal importance to both recall and precision.

What are the additional metrics that will be discussed in the next part of the video?

-In the next part of the video, the presenter plans to discuss Cohen's Kappa, ROC curve, AUC score, and PR curve, along with two more metrics.

How will the presenter demonstrate the application of these metrics in the third part of the video?

-In the third part of the video, the presenter will implement a practical problem statement using an imbalanced dataset and apply the discussed metrics to show how they affect the evaluation of a machine learning model.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

Lecture 1.6 | well defined learning problems | Machine Learning Techniques | #aktu #mlt #unit1 #ml

Python Exercise on kNN and PCA

Why Deep Learning Is Becoming So Popular?🔥🔥🔥🔥🔥🔥

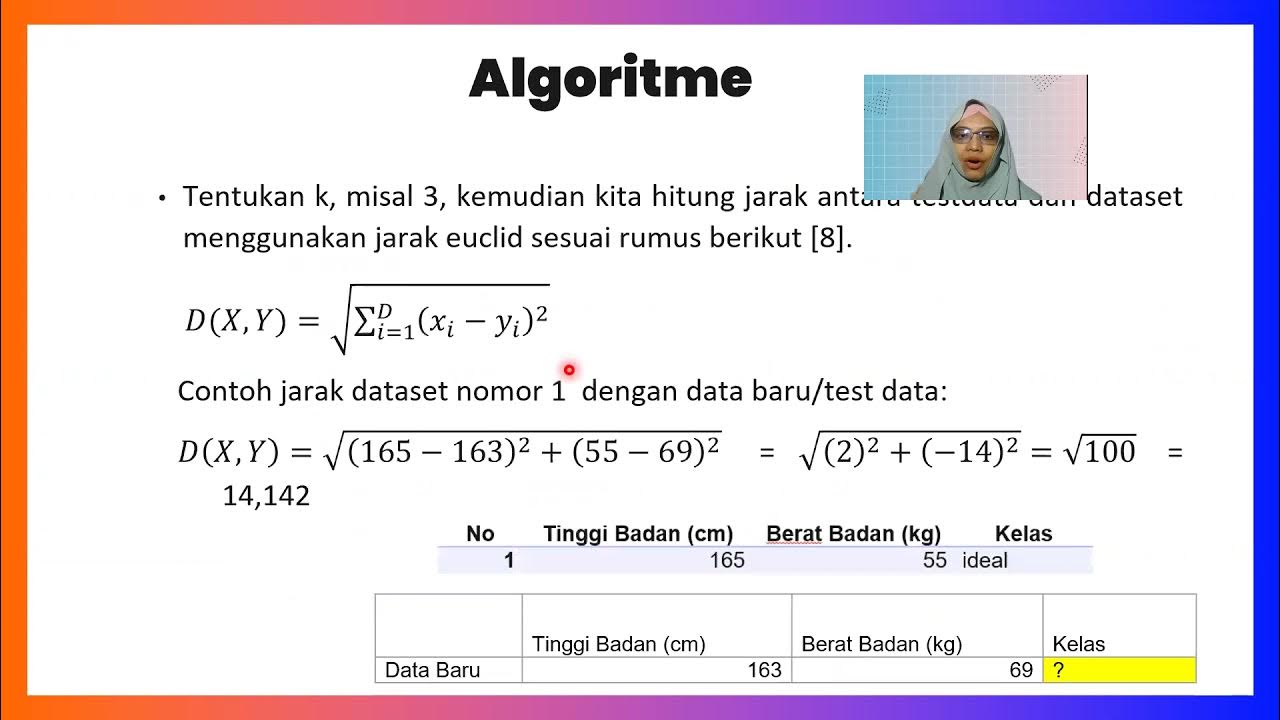

K-Nearest Neighbors Classifier_Medhanita Dewi Renanti

Machine Learning Interview Questions 2024 | ML Interview Questions And Answers 2024 | Simplilearn

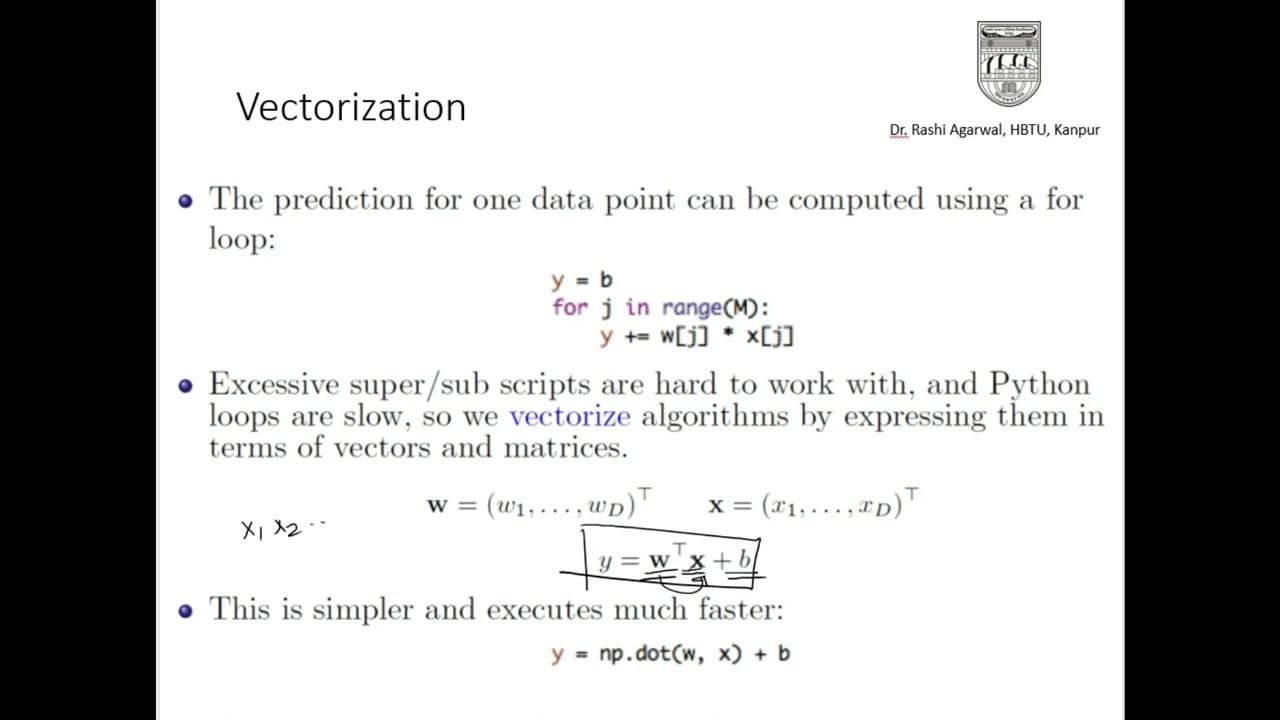

GEOMETRIC MODELS ML(Lecture 7)

5.0 / 5 (0 votes)