EfficientML.ai Lecture 5 - Quantization Part I (MIT 6.5940, Fall 2024)

Summary

TLDRThis lecture delves into the principles of linear quantization in neural networks, emphasizing the advantages of utilizing low-precision integer calculations over floating-point operations. It explores the simplification of mathematical equations governing weights and biases, highlighting how these adjustments facilitate efficient computations while preserving accuracy. The discussion underscores the maturity of 8-bit quantization as a solution for optimizing neural networks on edge devices. Additionally, the lecture foreshadows future explorations into binary and ternary quantization methods, positioning these advancements as critical for further enhancing performance and efficiency in neural network applications.

Takeaways

- 😀 Quantization is essential for reducing model size and computational load in deep learning.

- 😀 The weight and activation distributions are assumed to follow a normal distribution, simplifying calculations.

- 😀 Setting ZW and ZB to zero allows for simpler scaling and calculations, reducing complexity.

- 😀 Integer multiplication with 8-bit integers is sufficient for most operations, leading to efficiency gains.

- 😀 The bias can also be quantized, allowing for further simplification in the computations.

- 😀 Summations are performed in 32-bit registers to prevent overflow, ensuring numerical stability.

- 😀 Quantization improves both storage efficiency and computational speed compared to floating-point operations.

- 😀 The lecture emphasizes the maturity of 8-bit quantization for deployment on edge devices.

- 😀 Future discussions will explore even lower precision quantization techniques, such as binary and ternary quantization.

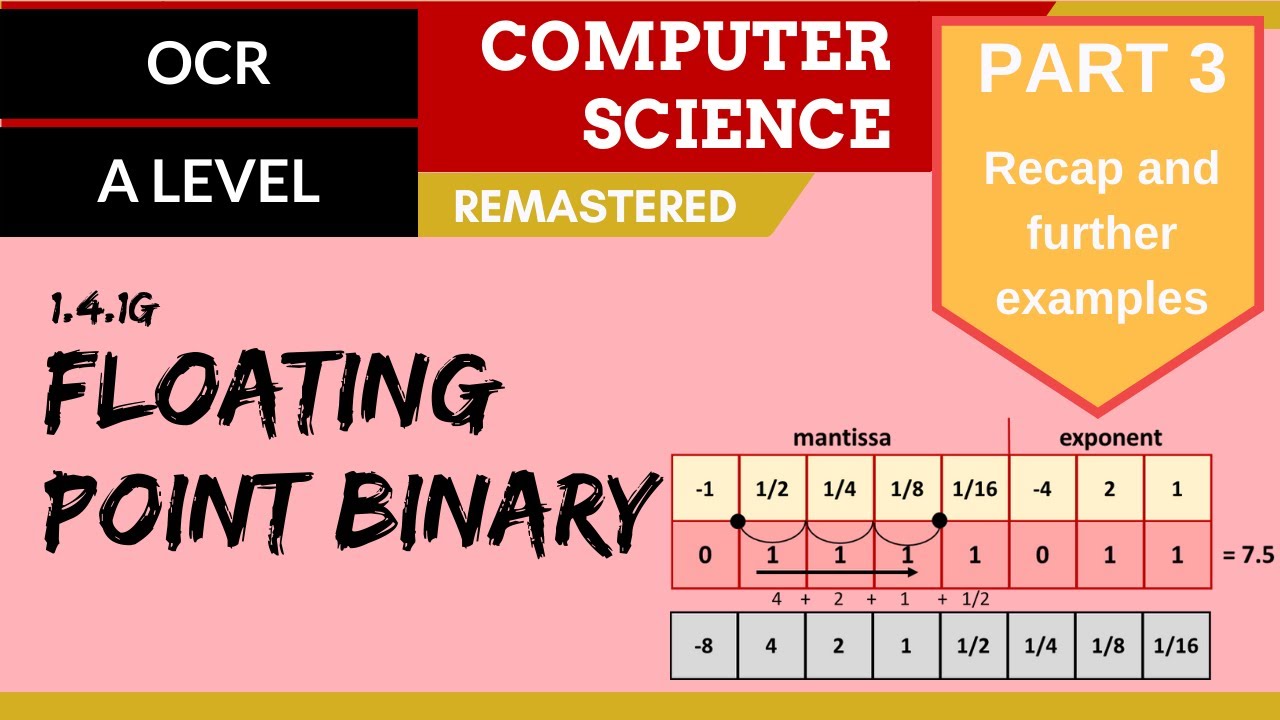

- 😀 Understanding floating-point representation is crucial for implementing quantization effectively.

Q & A

What is the significance of setting ZW and ZB to zero in the quantization process?

-Setting ZW and ZB to zero simplifies calculations by assuming symmetry in the weight and bias distributions, allowing for more straightforward integer arithmetic.

How does linear quantization differ from k-means quantization?

-Linear quantization reduces both storage and computation requirements, whereas k-means quantization primarily focuses on reducing storage without significant computational savings.

What role do scaling factors play in the quantization of neural networks?

-Scaling factors normalize the weights and biases to facilitate integer representation, ensuring that the quantized values maintain the necessary range and precision.

Why is integer computation preferred over floating-point computation in neural networks for edge devices?

-Integer computations are more efficient in terms of both storage and processing speed, which is crucial for resource-constrained environments like mobile devices and microcontrollers.

What is the computational advantage of using 8-bit integers?

-Using 8-bit integers reduces memory usage and accelerates processing times compared to higher precision data types, enabling faster execution of neural network operations.

How does the architecture of convolutional layers impact quantization?

-Convolutional layers can utilize similar quantization strategies as fully connected layers, but they replace multiplication operations with convolution operations, maintaining linearity in computations.

What are the potential future directions in quantization techniques discussed in the lecture?

-Future directions include exploring binary and ternary quantization, which use even fewer bits to represent weights and biases, potentially leading to greater reductions in model size and computation.

How does the latency versus accuracy trade-off compare between floating-point and integer computations?

-The lecture suggests that 8-bit quantization typically achieves better accuracy for similar latencies compared to floating-point computations, indicating a favorable trade-off.

What implications does quantization have for the deployment of neural networks?

-Quantization enables the deployment of neural networks on devices with limited computational resources by reducing the model size and enhancing processing efficiency.

What basic concepts of floating-point representation were revisited in the lecture?

-The lecture revisited concepts such as the structure of floating-point numbers, including exponent bits, mantissa bits, and how they represent large numbers.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

EfficientML.ai Lecture 6 - Quantization Part II (MIT 6.5940, Fall 2024)

Quantization: How LLMs survive in low precision

TIPE DATA PADA JAVASCRIPT : ANGKA

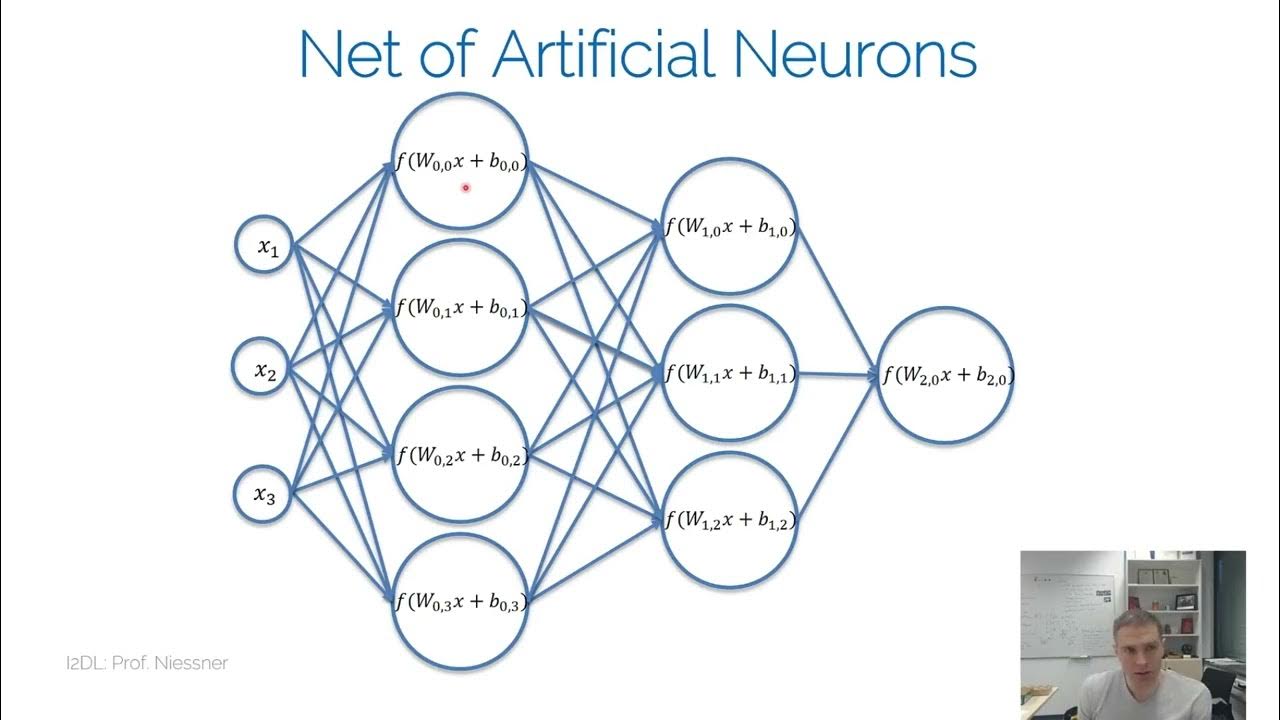

I2DL NN

81. OCR A Level (H446) SLR13 - 1.4 Floating point binary part 3 - Recap and further examples

82. OCR A Level (H446) SLR13 - 1.4 Floating point arithmetic

5.0 / 5 (0 votes)