What is HDFS | Name Node vs Data Node | Replication factor | Rack Awareness | Hadoop🐘🐘Framework

Summary

TLDRThis video explains the role of HDFS (Hadoop Distributed File System) in big data management. It covers how HDFS handles the storage of large datasets across multiple nodes, ensuring efficient storage and fault tolerance through replication and distributed blocks. The video also discusses the structure of HDFS, including the role of the NameNode (master) and DataNodes (workers), as well as key concepts like block size, replication factor, and rack awareness for data organization. Additionally, it highlights the functions of the secondary NameNode and heartbeat messages for ensuring system health.

Takeaways

- 📁 HDFS (Hadoop Distributed File System) is designed to store and manage large amounts of data efficiently.

- 🗂️ HDFS divides large files into smaller blocks (128 MB by default) for distributed storage across data nodes.

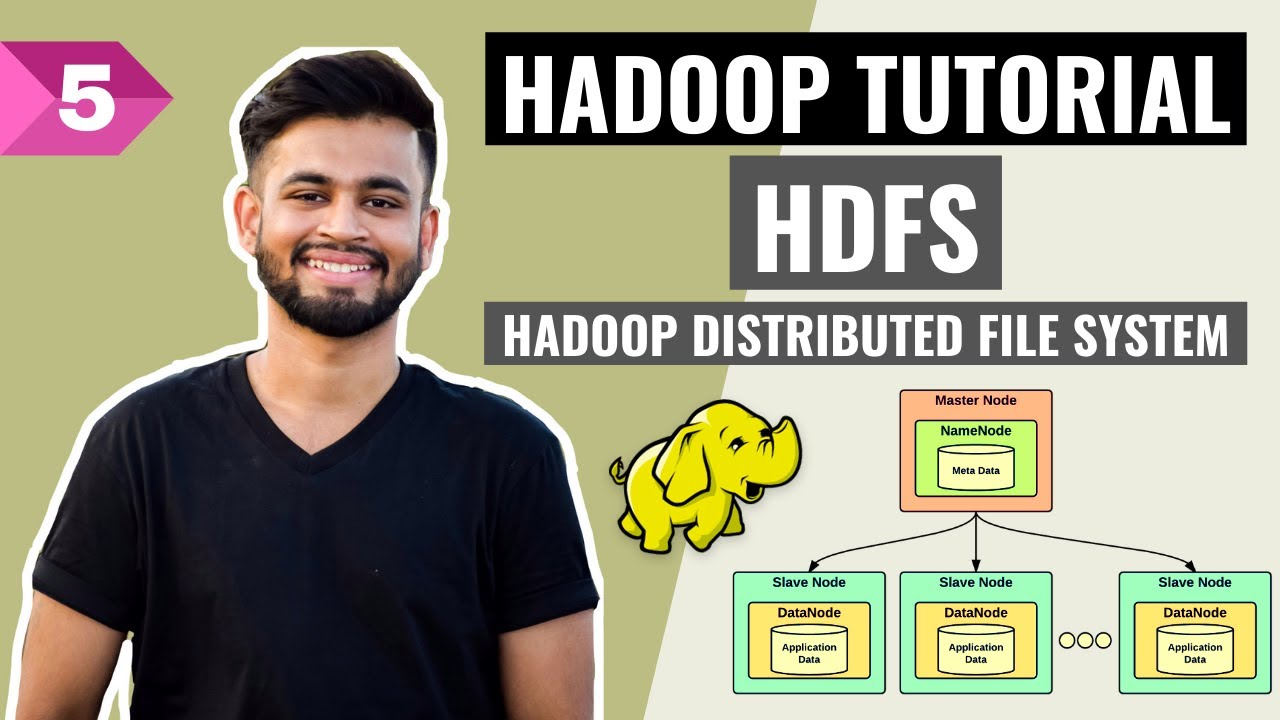

- 🛠️ HDFS consists of two main components: NameNode (master) and DataNode (workers). NameNode manages file metadata, while DataNodes store the actual data.

- 📊 The NameNode acts like the 'boss,' deciding where data is stored, and DataNodes are the 'employees' where the data resides.

- 🔄 Replication is a key feature of HDFS, where each data block is stored in three different locations by default, providing fault tolerance.

- ⚠️ If a DataNode fails, HDFS retrieves the data from the replicated copies, ensuring availability and minimizing data loss.

- 🛡️ Rack awareness in HDFS ensures that copies of data blocks are stored in different racks for redundancy and protection from rack failures.

- 📥 NameNode maintains metadata for files, including their locations, names, permissions, and replication details.

- 🗒️ Secondary NameNode assists the primary NameNode by keeping an updated copy of the file system metadata (FSImage), allowing efficient management of edits and changes.

- 💡 The heartbeat mechanism ensures that DataNodes send regular status updates to NameNode, indicating that they are operational and healthy.

Q & A

What is the role of the `hdfs-site.xml` file in Big Data?

-`hdfs-site.xml` plays a critical role in defining how data is stored in Hadoop Distributed File System (HDFS), which is responsible for storing and managing large amounts of data efficiently.

What are the two main challenges in data processing as mentioned in the video?

-The two main challenges are: 1) Storing huge amounts of data efficiently, and 2) Processing the data to extract meaningful information. HDFS handles storage, and MapReduce deals with processing.

What is HDFS and how does it differ from traditional file systems?

-HDFS (Hadoop Distributed File System) is a distributed file system designed to handle large amounts of data. Unlike traditional file systems like NTFS or NFS, which are meant for small or limited data, HDFS is designed for massive datasets, ensuring efficient storage and fault tolerance.

What are the two main components of HDFS?

-The two main components are the NameNode, which acts as the master or 'boss' controlling metadata and file storage locations, and the DataNodes, which store the actual data across distributed servers.

What is the role of the NameNode in HDFS?

-The NameNode manages the file system namespace and metadata, deciding where to store files and controlling access. It tracks file information like permissions and replication factors.

How does HDFS achieve fault tolerance?

-HDFS achieves fault tolerance by replicating each block of data across multiple DataNodes (default replication factor is 3). If one DataNode fails, the data can still be accessed from other nodes that store the replica.

What is the concept of Rack Awareness in HDFS?

-Rack Awareness ensures data blocks are stored on DataNodes in different racks. This way, if one rack fails, data can still be accessed from other racks, increasing fault tolerance and availability.

What is the function of the Secondary NameNode?

-The Secondary NameNode periodically updates the file system’s metadata (FSImage) to ensure the NameNode is not overloaded. It acts like an assistant, collecting changes and applying them to the main image of the file system.

How does HDFS handle read and write operations?

-For read operations, the client contacts the NameNode to get the DataNode location, then accesses the data directly. Write operations are more complex, as they require the data to be written simultaneously across multiple DataNodes to maintain consistency.

What is the purpose of the Heartbeat message in HDFS?

-The Heartbeat message is sent from each DataNode to the NameNode every three seconds. It informs the NameNode that the DataNode is functioning properly, helping maintain the health of the distributed system.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

Big Data In 5 Minutes | What Is Big Data?| Big Data Analytics | Big Data Tutorial | Simplilearn

What is MapReduce♻️in Hadoop🐘| Apache Hadoop🐘

O que é Hadoop (Parte 2)

Google SWE teaches systems design | EP21: Hadoop File System Design

HDFS- All you need to know! | Hadoop Distributed File System | Hadoop Full Course | Lecture 5

Introduction to Hadoop

5.0 / 5 (0 votes)