Markov Chains and Text Generation

Summary

TLDRThis video delves into Markov chains, illustrating their concept through a car's functional and broken states. It explains how to model states and transition probabilities, representing them as a table or matrix. The video progresses to demonstrate generating text using Markov chains, showing how to create states for each word and calculate transition probabilities. It uses 'the quick brown fox jumps over the lazy dog' as a simple example and then scales up with a chain trained on 5,000 Wikipedia articles. The video also touches on advanced text generation using n-grams and concludes with a unique application of Markov chains in generating novel, human-like pen strokes.

Takeaways

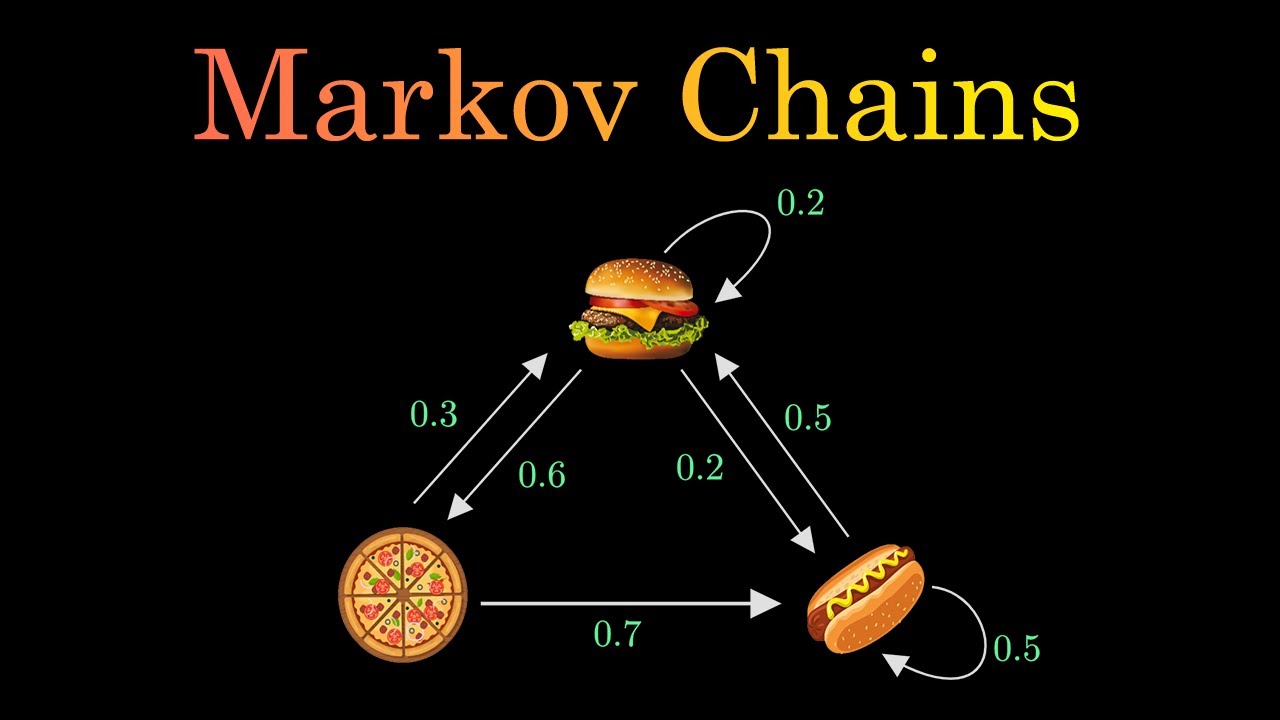

- 🔄 Markov chains are used to model transitions between states, with each state representing a condition or an event.

- 🚗 The script uses the example of a car's condition (working or broken) to explain how Markov chains can model real-life situations.

- 📊 Transition probabilities in a Markov chain can be represented as a table or matrix, showing the likelihood of moving from one state to another.

- 📈 Data collection and analysis are crucial for determining the probabilities within a Markov chain, such as observing the frequency of car breakdowns over time.

- 📝 Markov chains can be applied to text generation by treating each word as a state and modeling the likelihood of subsequent words.

- 📖 The script demonstrates how to create a Markov chain from a simple sentence, showing the transition probabilities between words.

- 🌐 Training a Markov chain on a large dataset, like Wikipedia articles, can generate more complex and realistic text predictions.

- 🔡 Text generation using Markov chains involves selecting the next word based on the current word's state, using probabilities to bias the choice.

- 🔗 Markov chains can be extended to consider n-grams (multiple words) instead of single words, improving the coherence of generated text.

- 🎨 Beyond text, Markov chains can model and generate other types of sequences, such as drawing strokes, creating unique and human-like patterns.

Q & A

What is a Markov chain?

-A Markov chain is a mathematical system that models a sequence of possible events where the probability of each event depends only on the state attained in the previous event.

How are states and transitions represented in a Markov chain?

-In a Markov chain, states are represented by nodes or circles, and transitions between states are represented by arrows pointing from one state to another, indicating the likelihood of moving from one state to the next.

What is the significance of the car example used in the script?

-The car example illustrates a simple Markov chain with two states: the car working or the car broken. It demonstrates how probabilities are used to model the likelihood of transitioning between these states.

How can the data from a Markov chain be represented?

-Data from a Markov chain can be represented as a table or a matrix, where rows represent the current state and columns represent the next state, with the values indicating the probability of transitioning from one state to another.

What is the process for converting raw data into probabilities in a Markov chain?

-To convert raw data into probabilities, the total occurrences for each state are calculated and then each occurrence count is divided by the total for that state, resulting in a probability for each transition.

How does the Markov chain model handle text generation?

-In text generation, each word is treated as a state, and the transitions are the probabilities of one word following another. This is used to predict and generate the next word in a sequence based on the previous word or words.

What is the significance of the 'the' word in the Markov chain text generation example?

-The word 'the' is significant because it appears multiple times in the example sentence, allowing for different transition probabilities to be observed and modeled, unlike other words that appear only once.

How can the randomness in text generated by a Markov chain be controlled?

-The randomness in text generated by a Markov chain is controlled by biasing the selection of the next word based on the probabilities defined by the chain, ensuring that some words are more likely to follow others.

What is the limitation of a Markov chain when generating text based on single-word states?

-The limitation of a Markov chain when generating text based on single-word states is that the generated text can diverge in meaning very quickly, as each word only depends on the immediately preceding word.

How can the coherence of generated text be improved in a Markov chain model?

-The coherence of generated text can be improved by making each word depend on the last two or three words instead of just the last word, creating a more complex state that considers word pairs or triplets.

What other applications of Markov chains are mentioned in the script?

-Apart from text generation, the script mentions using Markov chains to model and generate pen strokes for drawing, which can emulate human-like scribbles and create new, never-before-seen images.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahora5.0 / 5 (0 votes)