[1hr Talk] Intro to Large Language Models

Summary

TLDREl video presenta los modelos de lenguaje a gran escala: qué son, cómo se entrenan, su promesa y desafíos. Explica que son dos archivos: parámetros y código para ejecutarlos. Se entrenan con grandes conjuntos de datos y clústers de GPUs. Prometen ser como sistemas operativos con interfaces de lenguaje natural. Tienen desafíos de seguridad como ataques para sortear restricciones, inyección de indicaciones y datos envenenados.

Takeaways

- 😃 Los modelos de lenguaje predictivos aprenden comprimir grandes cantidades de texto en internet en archivos de parámetros

- 📚 Los modelos entrenados predicen la siguiente palabra en una secuencia dado un contexto

- 🔎 Los modelos de lenguaje utilizan herramientas como calculadoras y navegadores web para resolver problemas

- 👥 Los humanos escriben datos de entrenamiento de alta calidad en la etapa de ajuste fino

- ⚙️ Los modelos de lenguaje se están convirtiendo en sistemas operativos con interfaces en lenguaje natural

- 🔒 Existen varios tipos de ataques informáticos contra los modelos de lenguaje

- 📈 El rendimiento de los modelos de lenguaje mejora sistemáticamente al aumentar su tamaño

- 🤖 Es posible que los modelos de lenguaje mejoren por sí mismos en dominios acotados con medidas de recompensa

- 🎯 Es probable que haya modelos de lenguaje personalizados expertos en tareas específicas

- 😊 Los modelos de lenguaje prometen una nueva era de computación conversational

Q & A

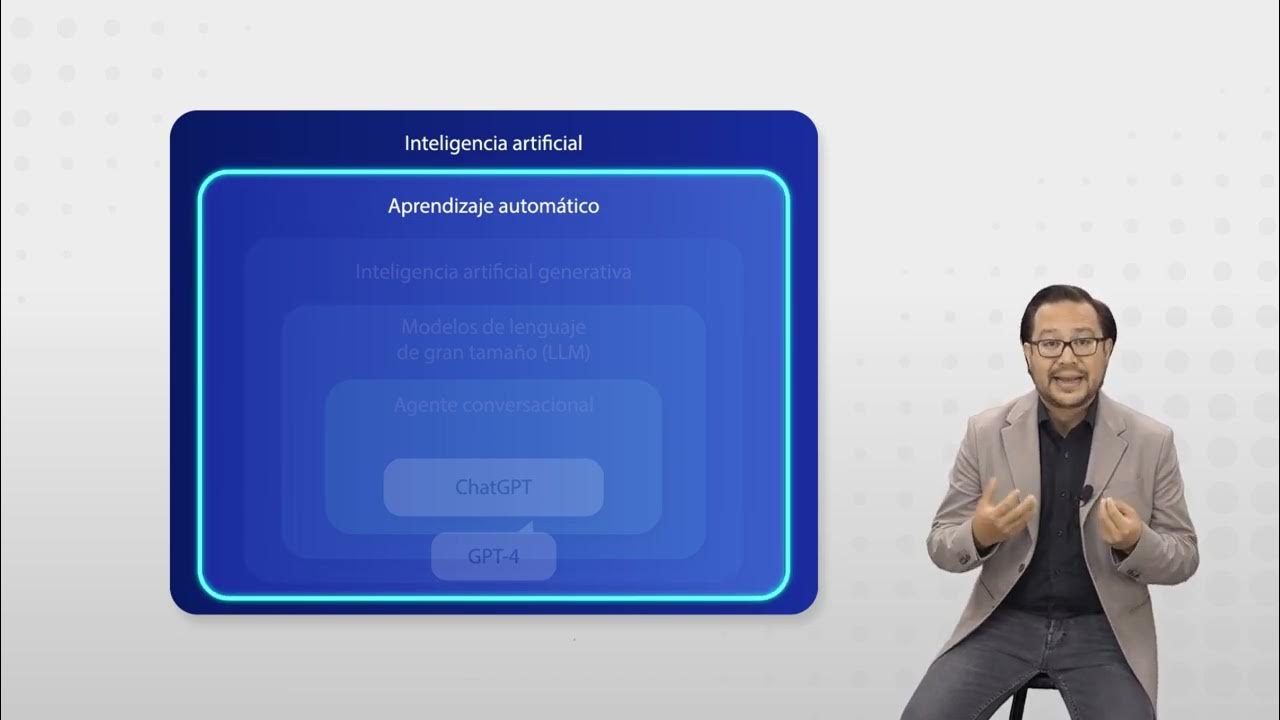

¿Qué son exactamente los modelos de lenguaje de gran escala?

-Los modelos de lenguaje de gran escala son redes neuronales entrenadas en enormes conjuntos de datos de texto para predecir la siguiente palabra en una secuencia. Típicamente predicen la próxima palabra en función del contexto de las palabras anteriores.

¿Cómo se entrenan los modelos de lenguaje de gran escala?

-Se entrenan en dos etapas principales. Primero, en la etapa de pre-entrenamiento, se entrenan para predecir la siguiente palabra en grandes conjuntos de datos de texto de Internet. Luego, en la etapa de fine-tuning, se ajustan en conjuntos de datos más pequeños de preguntas y respuestas para convertirlos en modelos asistentes.

¿Cuáles son los componentes clave para ejecutar un modelo de lenguaje de gran escala?

-Solo se necesitan dos archivos: el archivo de parámetros, que contiene los pesos del modelo, y el archivo de ejecución, que implementa la arquitectura de red neuronal para usar esos parámetros. No se necesita conectividad a Internet.

¿Cómo mejoran los modelos de lenguaje con el tiempo?

-Mejoran principalmente escalando, es decir, entrenando modelos más grandes en más datos. No se necesita progreso algorítmico, simplemente más capacidad computacional y datos.

¿Cómo se utilizan herramientas en los modelos de lenguaje para resolver problemas?

-Los modelos de lenguaje no intentan resolver problemas solo dentro de su espacio interno, utilizan activamente herramientas externas como calculadoras, intérpretes de Python, motores de búsqueda y generadores de imágenes para ayudar en la resolución.

¿Cuál es la promesa de los modelos de lenguaje?

-Ofrecen la promesa de un nuevo paradigma informático accesible a través de una interfaz de lenguaje natural, coordinando recursos para resolver problemas de manera similar a como lo hacemos los humanos.

¿Cuáles son algunos de los desafíos en seguridad de los modelos de lenguaje?

-Algunos desafíos son los ataques de falsificación de identidad para eludir restricciones de seguridad, los ataques de inyección de indicaciones para secuestrar el modelo, y los ataques de envenenamiento de datos para insertar vulnerabilidades.

¿Cómo se pueden personalizar los modelos de lenguaje?

-Se pueden personalizar proporcionando instrucciones especiales, cargando archivos de conocimiento adicionales, y potencialmente ajustando el modelo en sus propios conjuntos de datos para crear expertos en tareas específicas.

¿Cuál es la tendencia actual en los ecosistemas de modelos de lenguaje?

-Actualmente, los modelos propietarios cerrados como GPT tienen el mejor rendimiento, pero hay un ecosistema de código abierto de rápido crecimiento basado en modelos como LaMDA que ofrece más flexibilidad.

¿Hacia dónde se dirigen los modelos de lenguaje en el futuro?

-Algunas direcciones incluyen agregar capacidades multimodales como ver imágenes y escuchar audio, pensar durante períodos de tiempo más largos, y potencialmente mejorarse a sí mismos en dominios acotados que tienen una función de recompensa bien definida.

Outlines

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraMindmap

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraKeywords

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraHighlights

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraTranscripts

Esta sección está disponible solo para usuarios con suscripción. Por favor, mejora tu plan para acceder a esta parte.

Mejorar ahoraVer Más Videos Relacionados

DERIVING What are the major language models?

RAG, semantic search, embedding, vector... Find out what the terms used with Generative AI mean!

Qué es Inteligencia Artificial Generativa?

What is economics? 📊 Concept and Origin | Economics

🟢 Modelos en la Ciencia Física Secundaria 📚 🔶

Un Paper Ha Roto un Pilar Central de la IA.

CURSO PROMPT Engineering para CHATGPT y otros modelos - Aprende las MEJORES prácticas - Nivel BÁSICO

5.0 / 5 (0 votes)