mod04lec22 - Quantum Generative Adversarial Networks (QGANs)

Summary

TLDRThe video script delves into quantum machine learning, specifically focusing on quantum generative adversarial networks (GANs) applied to option pricing in finance. It explains the concept of GANs with a generator and discriminator, aiming to produce data indistinguishable from real samples. The script discusses a quantum approach to address data loading challenges, using a quantum generator and classical discriminator. It showcases how this framework is applied to European call option pricing, demonstrating faster convergence compared to classical Monte Carlo simulations. The script also includes a demo of quantum GANs for finance and a simulation of molecules using the Variational Quantum Eigensolver (VQE), emphasizing the practical application and potential of quantum computing in various fields.

Takeaways

- 🧠 The script discusses the application of Quantum Generative Adversarial Networks (GANs) in finance, specifically for option pricing.

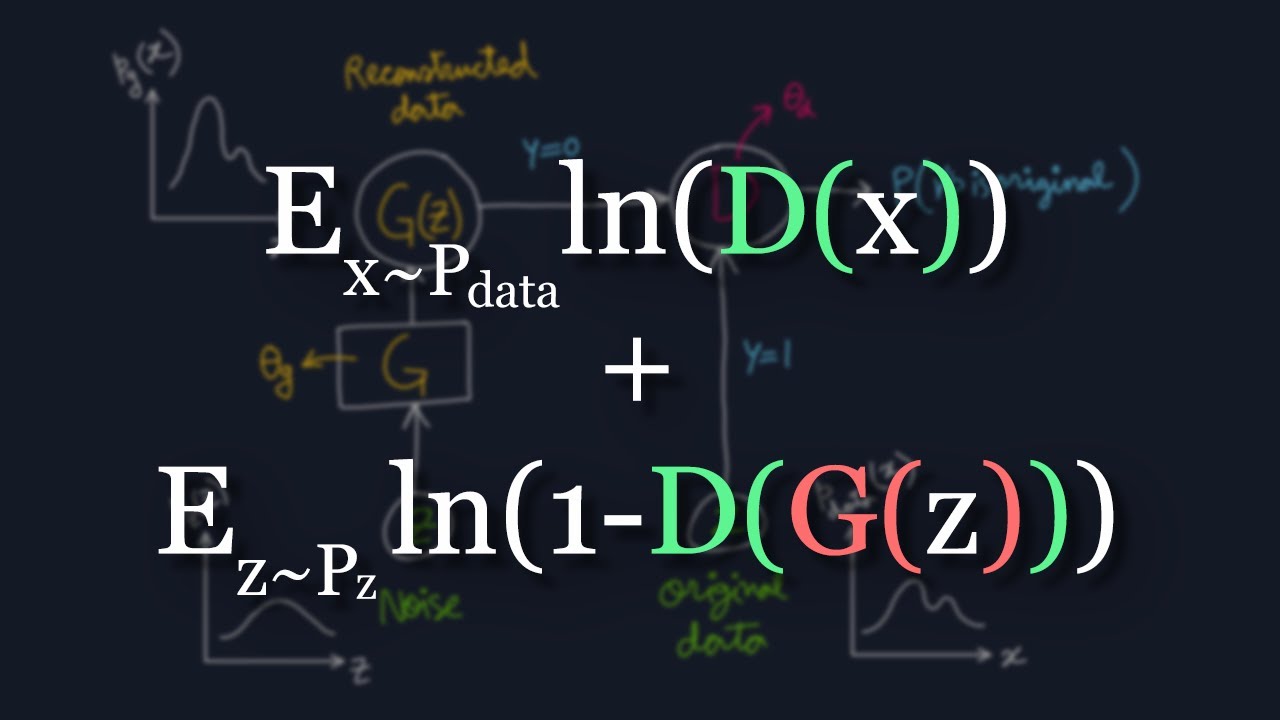

- 🤖 It explains the concept of GANs, which involve two neural networks: a generator that creates data samples and a discriminator that tries to distinguish between real and generated samples.

- 📈 The goal of the generator is to produce samples that are indistinguishable from real data, thus 'fooling' the discriminator.

- 💡 The script highlights a paper that addresses the 'data loading problem' in quantum computing, aiming to mimic data distributions without needing to load the exact classical data into quantum states.

- 🌐 It introduces a quantum framework where the generator is quantum (using variational quantum circuits) and the discriminator remains classical.

- 💼 The application demonstrated is European call option pricing, where the quantum approach is used to simulate the distribution of spot prices and calculate expected payoffs.

- 📊 The script shows a comparison between quantum computing and Monte Carlo simulations, with quantum showing faster convergence and lower estimation errors.

- 🔬 The demo includes a practical example of using the quantum GAN framework to price options, with the potential for significant efficiency gains over classical methods.

- 🔄 The script also touches on the broader context of quantum computing, including the NISQ (Noisy Intermediate-Scale Quantum) era and the importance of variational quantum algorithms.

- 🚀 Lastly, it emphasizes the rapid evolution of quantum hardware and the active research in areas like cost function optimization, Hamiltonian mapping, and quantum-aware classical optimizers.

Q & A

What is the main concept behind Generative Adversarial Networks (GANs)?

-The main concept behind GANs is to have two neural networks, a generator and a discriminator, competing against each other. The generator creates data samples, while the discriminator tries to distinguish between these generated samples and real data samples. The generator's goal is to produce samples that are indistinguishable from real data, effectively 'fooling' the discriminator.

How does the quantum version of a GAN differ from the classical one?

-In the quantum version of a GAN, the generator is a quantum circuit that produces quantum states approximating the distribution of the training data, while the discriminator remains a classical neural network. The quantum generator uses parameterized quantum circuits to generate states that encode the probability distribution of the data.

What is the significance of the data loading problem in quantum computing?

-The data loading problem refers to the challenge of efficiently translating classical data into quantum states. It's significant because even if quantum algorithms can provide exponential speedups, if loading classical data into quantum states requires an exponential number of gates, it negates the potential speedup. The paper discussed in the script addresses this problem by aiming to mimic the data distribution rather than loading the exact data.

How does the quantum GAN framework handle the data loading problem?

-The quantum GAN framework handles the data loading problem by focusing on mimicking the distribution of the data rather than loading the exact classical data into quantum states. This approach allows for a more efficient translation of data into a quantum context, sidestepping the need for complex gate operations.

What is the role of the variational quantum circuit in the quantum GAN?

-The variational quantum circuit in the quantum GAN serves as the quantum generator. It is a parameterized quantum circuit that is trained to generate quantum states whose probability distributions closely resemble the training data. This circuit is essential for encoding the data distribution into quantum amplitudes.

How is the payoff in a European call option calculated?

-The payoff in a European call option is calculated as the maximum of the difference between the spot price at maturity (S_t) and the strike price (K), or zero. If the spot price at maturity is higher than the strike price, the payoff is positive; otherwise, it is zero.

What is the advantage of using a quantum approach over classical Monte Carlo simulations for option pricing?

-The quantum approach has a convergence rate that scales polynomially faster than the Monte Carlo method, which scales with 1/√n. This means that the quantum approach can achieve the same level of accuracy with significantly fewer samples, offering a potential quantum advantage in computational efficiency.

What is the key takeaway from the paper on quantum GANs for finance?

-The key takeaway is that the quantum GAN framework can effectively mimic the distribution of financial data, allowing for more efficient computation of expected payoffs in option pricing. This approach demonstrates a potential quantum advantage over classical methods, particularly in the context of complex financial simulations.

How does the quantum GAN framework encode the spot price information?

-The quantum GAN framework encodes the spot price information into the quantum state's basis states, where each basis state represents a number in the domain of the data. The probability distribution of the spot price is then embedded into the amplitudes of these states, allowing for the generation of a distribution that can be used for financial calculations.

What are some of the active research areas in variational quantum algorithms?

-Active research areas in variational quantum algorithms include defining cost functions, mapping Hamiltonians, exploring useful ansatze, utilizing gradients, and developing quantum-aware classical optimizers. These areas are crucial for improving the efficiency and applicability of variational quantum algorithms.

Outlines

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenMindmap

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenKeywords

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenHighlights

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenTranscripts

Dieser Bereich ist nur für Premium-Benutzer verfügbar. Bitte führen Sie ein Upgrade durch, um auf diesen Abschnitt zuzugreifen.

Upgrade durchführenWeitere ähnliche Videos ansehen

5.0 / 5 (0 votes)