Model Monitoring with Sagemaker

Summary

TLDRThis webinar discusses model monitoring in production environments using AWS SageMaker. It covers the importance of monitoring model performance, the concept of model drift, and how SageMaker helps detect data quality and model accuracy issues. The session also includes a case study on implementing custom model monitoring for a security application.

Takeaways

- 😀 Model monitoring is crucial for tracking the performance of machine learning models in production environments and identifying when they are not performing as expected.

- 🔍 Model drift refers to the decline in a model's performance over time due to changes in data or the environment, which can be categorized into data drift, bias drift, or feature attribution drift.

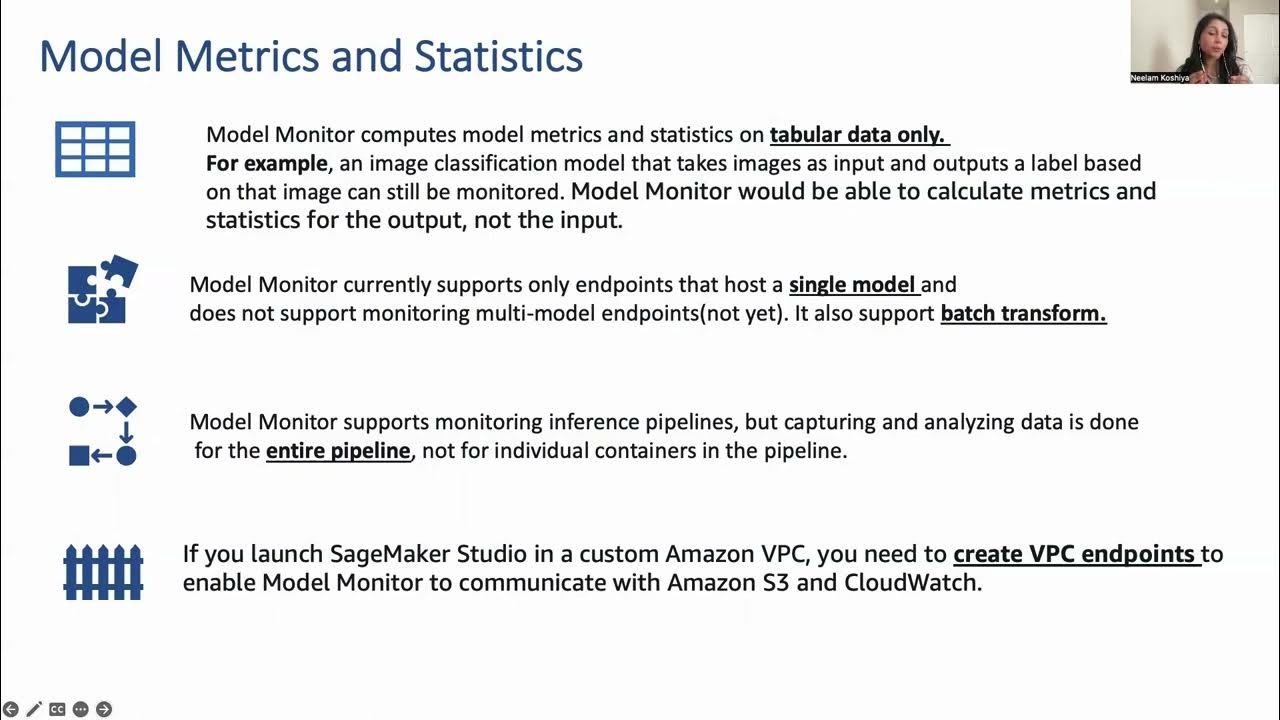

- 🛠 AWS SageMaker offers tools for model monitoring that can help detect issues like data quality, model quality, bias, and explainability, with SageMaker Clarify being a key tool for bias and explainability.

- 📈 Establishing a baseline for model performance metrics is essential for model monitoring, allowing for the comparison of current performance against expected standards.

- 🔒 Data capture is a critical component of model monitoring, involving the collection of both the data sent to the model and the ground truth labels for model quality monitoring.

- 📊 Model monitoring jobs can be scheduled to detect data quality drift and model accuracy, with results and alerts made available in S3, SageMaker Studio, and CloudWatch for action.

- 👷♂️ Best practices for model monitoring include keeping instance utilization below 70% to ensure effective data capture and maintaining data in the same region as the model monitoring.

- 📝 The architecture of data capture involves a training job, a model endpoint, a baseline processing job, and a monitoring job that captures and analyzes data quality and model predictions.

- 📉 Monitoring for data quality involves checking for violations such as missing or extra columns, unexpected data types, or a high number of null values, which can trigger CloudWatch alerts.

- 🎯 Model quality monitoring requires ground truth labels to compare predictions with reality, using metrics like RMSC or F1 score to evaluate accuracy and prediction quality.

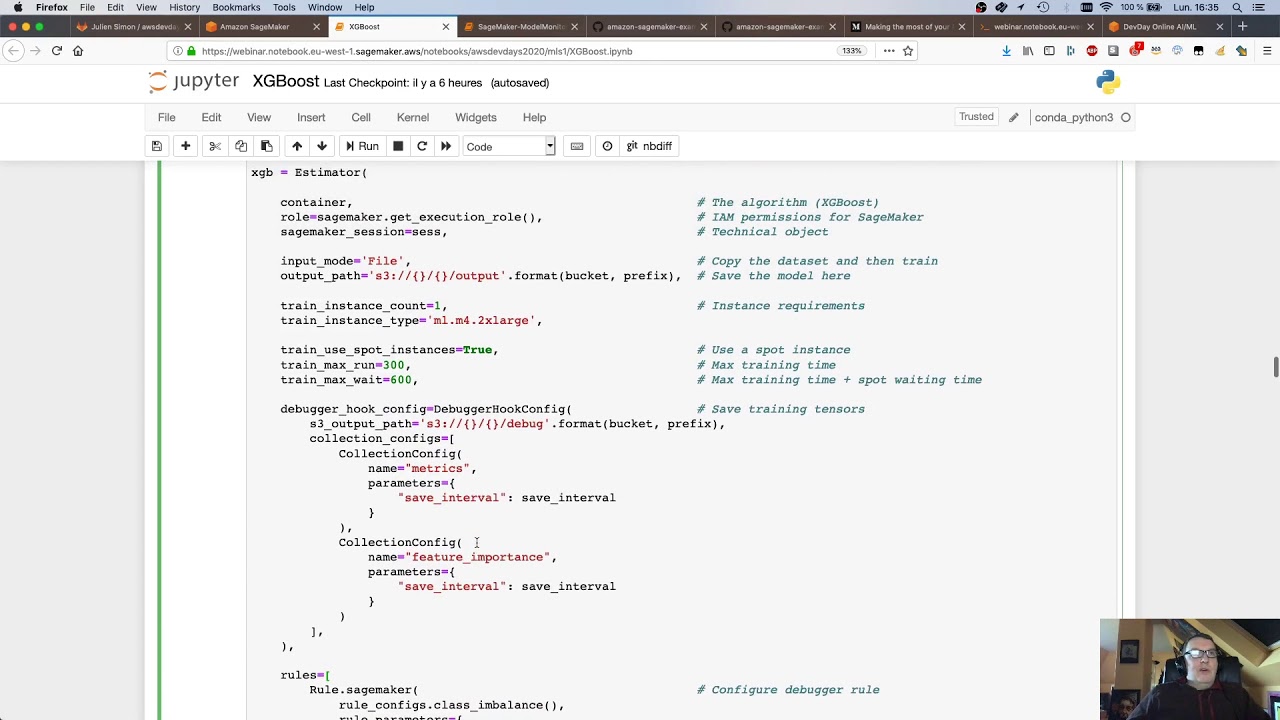

- 🔧 Custom model monitor scripts can be created for specific needs, deployed as Docker containers in AWS ECR, and scheduled to run at intervals to capture and analyze custom metrics.

Q & A

What is model monitoring?

-Model monitoring is the process of monitoring your model's performance in a production-level environment. It involves capturing key performance indicators that can indicate when a model is performing well or not, helping to detect issues like model drift.

Why is model monitoring necessary?

-Model monitoring is necessary because when machine learning models are deployed to production, factors can change over time, causing the model's performance to drift from the expected levels. Monitoring helps detect these changes and ensures the model continues to perform as intended.

What is model drift?

-Model drift refers to the decay of a model's performance over time after it has been deployed to production. It can be caused by changes in the data distribution (data drift), changes in the data that the model sees compared to the training data (bias drift), or changes in the features and their attribution scores (feature attribution drift).

What are the different types of model monitoring?

-The different types of model monitoring include data quality monitoring, model quality monitoring, bias monitoring, and explainability monitoring. These aspects help ensure the model's predictions are accurate and unbiased, and that the model's decisions can be explained.

How does SageMaker help with model monitoring?

-SageMaker provides tools and services for model monitoring, including the ability to capture data, establish baselines, and schedule model monitoring jobs. It can detect data quality drift, model accuracy, and other issues, with findings made available in S3 and visualized in SageMaker Studio.

What is the lifecycle of model monitoring in SageMaker?

-The lifecycle of model monitoring in SageMaker involves deploying a model, enabling data capture, collecting ground truth data, generating a baseline, scheduling model monitoring jobs, and taking action based on the findings, such as retraining the model if necessary.

What are some best practices for model monitoring in production?

-Best practices include keeping instance utilization below 70% to avoid reduced data capture, ensuring data captured for monitoring is in the same region as the model monitoring, and using lowercase variables with underscores to ease parsing in JSON and Spark jobs.

How can custom metrics be used in SageMaker model monitoring?

-Custom metrics can be used by developing a script, packaging it in a Docker container, and deploying it as a custom model monitor metric in AWS. This allows for monitoring specific aspects of model performance that are not covered by standard metrics.

What is the role of CloudWatch in SageMaker model monitoring?

-CloudWatch plays a crucial role in SageMaker model monitoring by receiving alerts and metrics from the monitoring jobs. These alerts can trigger actions, such as retraining the model or adjusting the model's parameters, based on the detected issues.

How can AWS Proof of Concept (PoC) funding support machine learning initiatives?

-AWS offers PoC funding for machine learning projects in partnership with aligned partners. This funding can cover up to 10% of the annual reoccurring revenue, with a one-time cap of $25,000, to support the evaluation and development of machine learning solutions.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

AWS re:Invent 2020: Detect machine learning (ML) model drift in production

Sagemaker Model Monitor - Best Practices and gotchas

AWS DevDays 2020 - Deep Dive on Amazon SageMaker Debugger & Amazon SageMaker Model Monitor

#1 Generative AI On AWS-Getting Started With First Project- Problem Statement With Demo

Estimating Healthcare Receivables using Amazon SageMaker Canvas | Amazon Web Services

What is Amazon SageMaker?

5.0 / 5 (0 votes)