[CS 70] Markov Chains – Finding Stationary Distributions

Summary

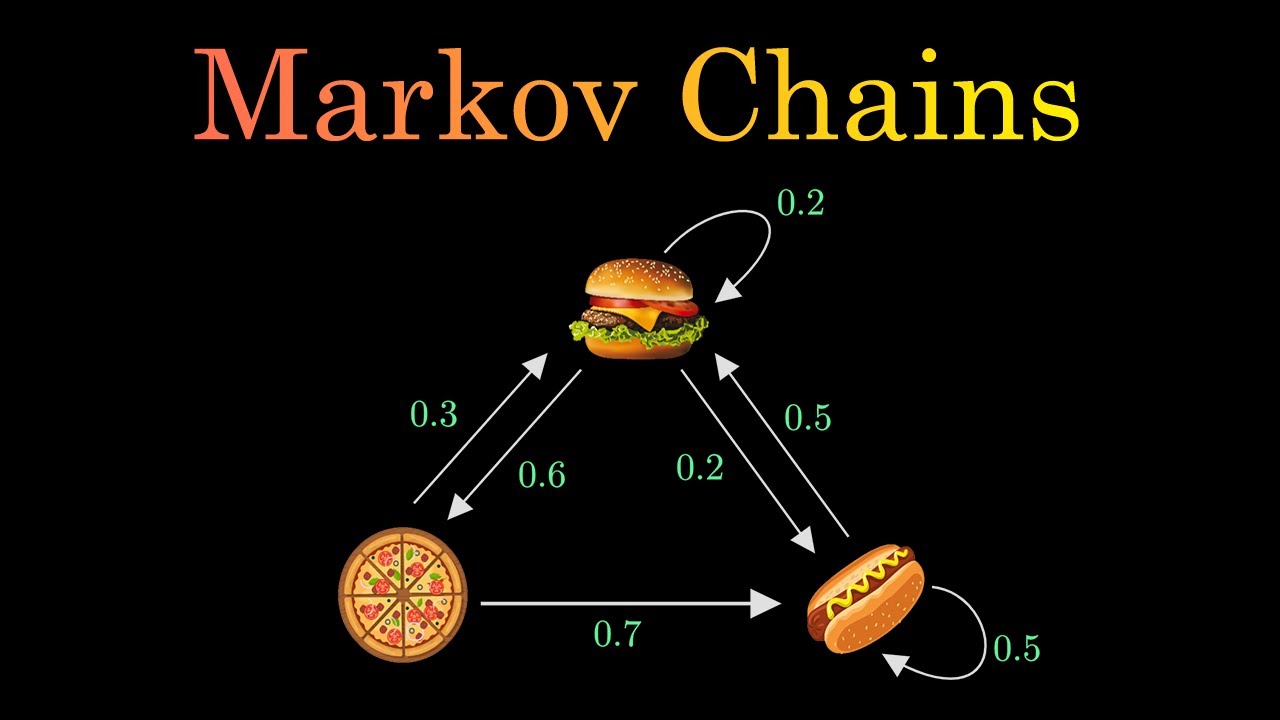

TLDRThe video script explains the concept of a stationary or invariant distribution in a Markov chain. It demonstrates how to find such a distribution by solving a system of linear equations where the multiplication of the transition matrix and the row vector of probabilities yields the same distribution. The example involves a simple Markov chain with two states, A and B, with given transition probabilities. The solution involves simplifying the equations and using the property that the probabilities must sum to 1, leading to the stationary distribution vector (4/11, 7/11).

Takeaways

- 📚 Pi sub n is the probability distribution at time step n, calculated as Pi sub 0 times P to the power of n.

- 🔄 The row vector Pi changes at each time step in a Markov chain, but there is a special case where it remains constant.

- 🌟 A stationary or invariant distribution is when multiplying the transition matrix P with Pi results in the same Pi.

- 🧩 To find a stationary distribution, solve the system of linear equations Pi P = Pi.

- 🔍 An example Markov chain is provided with two states A and B, with specific transition probabilities.

- 📉 The transition matrix is a 2x2 matrix, and rows must sum to 1 for the matrix to be correct.

- 🔢 The equations derived from Pi P = Pi are 0.3 Pi A + 0.4 Pi B = Pi A and 0.7 Pi A + 0.6 Pi B = Pi B.

- 🔄 Simplifying the equations leads to redundant equations, indicating a need for additional constraints.

- 🔄 The sum of elements in Pi must equal 1, which provides the necessary second equation to solve for Pi A and Pi B.

- 🔑 The solution to the equations is Pi A = 4/11 and Pi B = 7/11, forming the stationary distribution for the given Markov chain.

Q & A

What is a stationary distribution in the context of a Markov chain?

-A stationary distribution, also known as an invariant distribution, is a probability distribution that remains unchanged when multiplied by the transition matrix of a Markov chain. This means that if you apply the transition probabilities to the distribution, you get the same distribution back.

How do you determine if a distribution is stationary in a Markov chain?

-To determine if a distribution is stationary, you solve the system of linear equations where the product of the transition matrix and the distribution vector equals the distribution vector itself. If such a solution exists, the distribution is stationary.

What is the condition for the rows of a transition matrix to be valid?

-The rows of a transition matrix must sum to 1. This ensures that the probabilities of transitioning from one state to all possible states add up to the certainty of a transition occurring.

What is the significance of the transition matrix in a Markov chain?

-The transition matrix in a Markov chain represents the probabilities of moving from one state to another in the chain. Each element in the matrix corresponds to the probability of transitioning from one specific state to another.

How many states does the Markov chain in the example have?

-The Markov chain in the example has two states, labeled as 'a' and 'b'.

What are the probabilities of transitioning from state 'a' to state 'b' and vice versa in the given example?

-In the example, the probability of transitioning from state 'a' to state 'b' is 0.7, and the probability of transitioning from state 'b' to state 'a' is 0.4.

What is the self-loop probability for state 'a' in the example Markov chain?

-The self-loop probability for state 'a', which is the probability of staying in state 'a', is 0.3.

What is the self-loop probability for state 'b' in the example Markov chain?

-The self-loop probability for state 'b', which is the probability of staying in state 'b', is 0.6.

Why can't the system of equations be solved directly in the example provided?

-The system of equations in the example is redundant, meaning that the equations do not provide enough independent information to solve for both unknowns (PI_a and PI_b). This redundancy occurs because the equations essentially state the same relationship.

How is the second equation used to solve for the stationary distribution in the example?

-The second equation is derived from the requirement that the elements of the probability distribution must sum to 1. This non-redundant equation, combined with the relationship between PI_a and PI_b, allows for the calculation of the stationary distribution.

What is the final stationary distribution for the Markov chain in the example?

-The final stationary distribution for the Markov chain in the example is a row vector with elements 4/11 and 7/11, representing the probabilities of being in states 'a' and 'b', respectively.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

Markov Chains Clearly Explained! Part - 1

Markov Chain Monte Carlo (MCMC) : Data Science Concepts

Bayes: Markov chain Monte Carlo

Markov Chains and Text Generation

Value Chain Management - Meaning, Definition, Differences with Supply Chain & Porter's VC | AIMS UK

POSTULAT EINSTEIN | RELATIVITAS KHUSUS | FISIKA KELAS XII SMA

5.0 / 5 (0 votes)