Google SWE teaches systems design | EP21: Hadoop File System Design

Summary

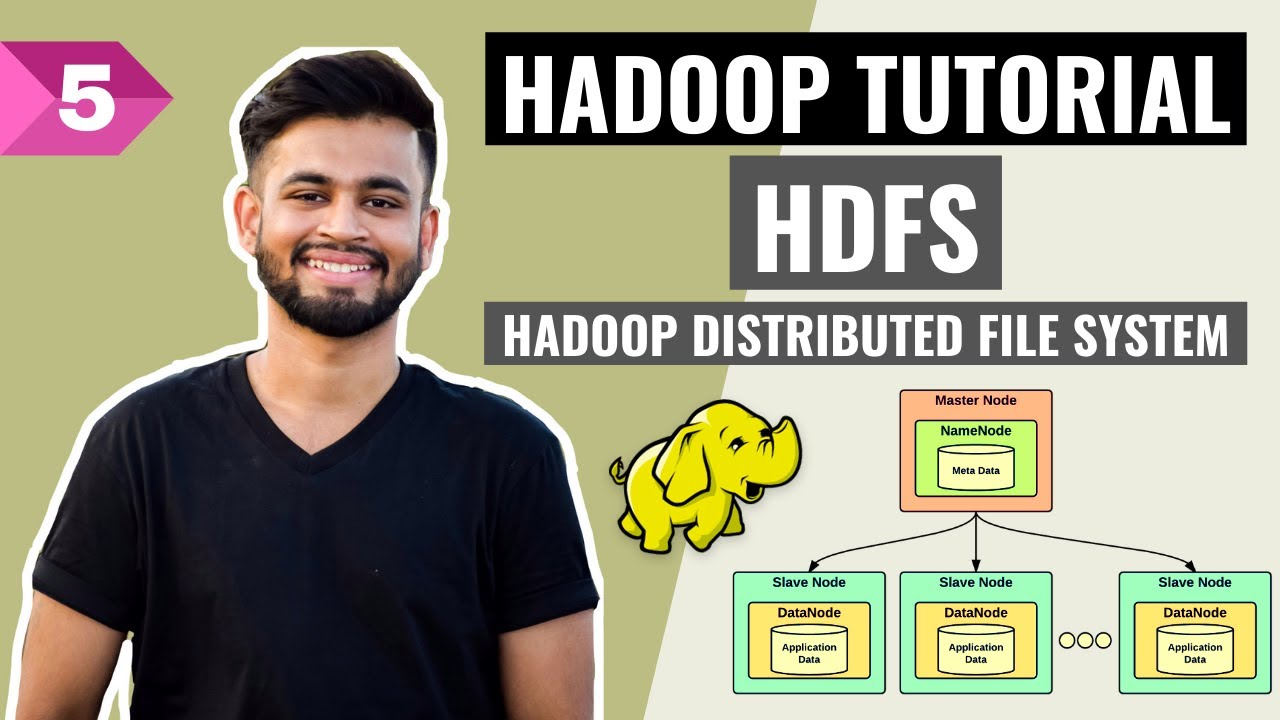

TLDRThis video delves into the architecture of Hadoop Distributed File System (HDFS), explaining its design for high throughput in both reading and writing large-scale data. It covers HDFS's distributed nature, chunk storage, metadata management by the Name Node, and the importance of replication for data availability. The script also touches on the evolution of HDFS to include high availability through coordination services, addressing the single point of failure issue. The video promises to connect these concepts to databases built on top of HDFS in a subsequent video.

Takeaways

- 🌞 The video discusses the architecture of Hadoop Distributed File System (HDFS), focusing on its design for high throughput reads and writes.

- 📚 HDFS is based on the Google File System and is popular for running on standard desktop computers, making it accessible for large-scale distributed systems.

- 🔍 HDFS is designed for write-once-read-many times files, storing data in chunks across multiple data nodes to improve parallelism for large files.

- 🗃️ The Name Node is a critical component of HDFS, storing all metadata about files and their chunks, and is kept in memory for quick access.

- 🔄 HDFS uses a write-ahead log (edit log) and an fsimage file for persistent storage of metadata changes, ensuring data is not lost on Name Node failure.

- 🔁 HDFS employs rack-aware replication to enhance data availability and throughput, placing replicas in different racks to minimize the risk of simultaneous node failures.

- 🔄 Pipelining is used in replication to ensure all replicas acknowledge write operations, maintaining strong consistency despite potential failures.

- 📖 Reading from HDFS involves querying the Name Node for the location of data chunks and selecting the data node with the least network latency for the client.

- 🖊️ Writing to HDFS, especially appending, involves a process of selecting a primary replica and ensuring data is propagated through the replication pipeline.

- ⚠️ A single point of failure exists with the Name Node; however, High Availability (HA) HDFS uses a quorum journal manager and Zookeeper for failover.

- 🔑 The video concludes by highlighting HDFS's strengths for large-scale compute and data storage, and its role as a foundation for databases that provide more complex querying capabilities.

Q & A

What is the primary purpose of the Hadoop Distributed File System (HDFS)?

-The primary purpose of HDFS is to store large data sets reliably across clusters of commodity hardware, providing high throughput access to the data for distributed processing.

Why is HDFS designed to be written once and then read many times?

-HDFS is designed this way to optimize for large-scale data processing workloads, where data is often written once and then processed or analyzed multiple times.

What is the typical size of the chunks in which HDFS stores files?

-Typically, the chunks in HDFS are around 64 to 128 megabytes in size.

What is the role of the NameNode in HDFS?

-The NameNode in HDFS is responsible for storing all the metadata regarding files, including the mapping of file blocks to the DataNodes where they are stored.

How does HDFS handle file system metadata changes?

-HDFS handles file system metadata changes by using an edit log, which is a write-ahead log for the NameNode, and periodically checkpointing the in-memory state to an fsimage file on disk.

What is the significance of the DataNode's block report in HDFS?

-The block report from a DataNode informs the NameNode about the blocks it holds, allowing the NameNode to maintain an up-to-date map of file blocks and their locations.

What does rack-aware replication mean in the context of HDFS?

-Rack-aware replication in HDFS means that data chunks are replicated in a way that considers the physical location of the nodes, typically placing one replica in the same rack as the writer and others in remote racks to maximize availability and minimize network traffic.

How does the replication process in HDFS ensure data consistency?

-The replication process in HDFS ensures data consistency by using a pipeline approach where data is written to a primary replica and then propagated to secondary replicas. A write is only considered successful if all replicas in the pipeline acknowledge it.

What is the client's strategy when it encounters a write failure in HDFS?

-When a client encounters a write failure in HDFS, it should keep retrying the write operation until it receives a success message.

What is the main issue with the original design of HDFS in terms of fault tolerance?

-The main issue with the original design of HDFS in terms of fault tolerance is the single point of failure represented by the NameNode. If the NameNode goes down, the entire system crashes.

How does High Availability (HA) in HDFS address the single NameNode issue?

-High Availability in HDFS addresses the single NameNode issue by using a backup NameNode that stays updated with the state of the primary NameNode through a replicated edit log, allowing for a failover to the backup NameNode in case the primary one goes down.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

Introduction to Hadoop

HDFS- All you need to know! | Hadoop Distributed File System | Hadoop Full Course | Lecture 5

What is MapReduce♻️in Hadoop🐘| Apache Hadoop🐘

What is HDFS | Name Node vs Data Node | Replication factor | Rack Awareness | Hadoop🐘🐘Framework

Big Data In 5 Minutes | What Is Big Data?| Big Data Analytics | Big Data Tutorial | Simplilearn

Hadoop Ecosystem Explained | Hadoop Ecosystem Architecture And Components | Hadoop | Simplilearn

5.0 / 5 (0 votes)