What is LoRA? Low-Rank Adaptation for finetuning LLMs EXPLAINED

Summary

TLDRLoRA (Low-Rank Adaptation) is a powerful technique for efficiently fine-tuning large language models like GPT-3 or LLaMA. It works by freezing the original model's weights and introducing small, trainable weight matrices, reducing computational costs. By using low-rank decomposition, LoRA optimizes the fine-tuning process, making it more memory-efficient compared to alternatives like adapters and prefix tuning. LoRA allows for task specialization without overwhelming hardware, offering a significant advantage for deploying large models with limited resources. This method has gained popularity due to its simplicity, effectiveness, and lower memory demands during both training and inference.

Takeaways

- 😀 LoRA (Low-Rank Adaptation) is a method for fine-tuning large language models efficiently by adding smaller, specialized weights rather than modifying the entire model.

- 😀 LoRA allows fine-tuning with less computational cost, making it more feasible to adapt large models like GPT-3 or LLaMA to specific tasks without needing immense GPU memory.

- 😀 Large language models (LLMs) are powerful but require significant resources due to their large number of parameters. Fine-tuning on small datasets can be difficult because of memory constraints.

- 😀 During LoRA fine-tuning, the original model weights are frozen, and only additional low-rank weights are adjusted, reducing the computational overhead during training and inference.

- 😀 LoRA optimizes the weight changes using two smaller matrices (A and B), which significantly reduces the number of parameters that need to be tuned compared to traditional methods.

- 😀 The key idea behind LoRA is using a low-rank decomposition to replace large weight matrices with smaller, more manageable ones that retain the same functional performance after adjustment.

- 😀 The rank (r) in the decomposition is a crucial hyperparameter. Too low a rank can cause loss of important information, while too high a rank can waste computation by keeping unnecessary parameters.

- 😀 Unlike methods like Adapter Layers and Prefix Tuning, LoRA maintains inference efficiency by not requiring additional memory during inference, only modifying a smaller set of weights.

- 😀 Adapter Layers are compute-efficient during training but introduce latency during inference because they must be processed sequentially, which is not ideal for large models.

- 😀 Prefix Tuning, which modifies input vectors, is harder to optimize and can reduce the effective input size, but LoRA is preferred for its simplicity and efficient adaptation without such limitations.

- 😀 LoRA is gaining popularity over other model adaptation techniques because it balances memory efficiency, flexibility, and minimal impact on inference performance.

Q & A

What is LoRA and how does it help in finetuning large language models?

-LoRA (Low-Rank Adaptation) is a technique that allows us to finetune large language models (LLMs) like GPT-3 or LLaMA in a more memory- and computationally-efficient manner. Instead of modifying the original model weights, LoRA freezes these weights and introduces smaller, trainable low-rank matrices, allowing for a reduction in the number of parameters needing adjustment during finetuning.

What problem does LoRA solve when finetuning large language models?

-Finetuning large language models typically requires significant GPU memory and computation due to the immense number of parameters in models like GPT-3 or LLaMA. LoRA addresses this by reducing the number of parameters that need to be adjusted, making the finetuning process more efficient and less resource-intensive.

How does LoRA differ from traditional finetuning methods for large models?

-Traditional finetuning methods adjust the entire set of parameters in the model, which can be very computationally expensive. LoRA, on the other hand, freezes the original model weights and only trains small, low-rank matrices, significantly reducing the computational burden while still allowing the model to adapt to new tasks.

What is the concept of low-rank decomposition in LoRA?

-Low-rank decomposition in LoRA involves representing the large weight matrices in the model as the product of two smaller matrices (A and B). This reduces the dimensionality of the matrices, meaning fewer parameters need to be adjusted, leading to improved efficiency in both computation and memory usage.

How do the matrices A and B in LoRA help in reducing computational cost?

-The matrices A and B in LoRA represent a low-rank approximation of the original weight matrix. Since A and B have fewer parameters than the full weight matrix, this allows for more efficient training, as fewer parameters need to be optimized. The larger the original weight matrix, the greater the computational savings achieved through low-rank decomposition.

What is the role of the rank 'r' in LoRA?

-The rank 'r' is a hyperparameter that controls the size of the low-rank matrices A and B. A low rank may lead to loss of important information, while a high rank could result in unnecessary computational overhead. Choosing the right rank is crucial for balancing efficiency and performance.

What happens during inference when using LoRA?

-During inference, LoRA adds the finetuned low-rank matrices (A and B) to the original pre-trained model weights. This allows the model to perform the specialized task without increasing the GPU memory usage, as the original weights remain frozen, and only the smaller matrices are adjusted.

How does LoRA compare to Adapter Layers as a finetuning approach?

-Adapter Layers introduce small layers into the model and only finetune the parameters of those layers. While adapter layers are computationally efficient during training, they can introduce latency during inference because they must be processed sequentially. In contrast, LoRA doesn’t add layers but instead optimizes small matrices, offering efficiency without the latency penalty.

What are the drawbacks of Prefix Tuning compared to LoRA?

-Prefix Tuning involves adding trainable input vectors to the model, but these vectors do not correspond to words and instead serve to adjust the model's behavior. The downsides of prefix tuning include reducing the effective input size and the difficulty of optimizing the number of trainable parameters. LoRA, on the other hand, directly modifies smaller matrices and avoids these issues.

Why has LoRA become increasingly popular for finetuning large models?

-LoRA has gained popularity because it provides a highly efficient way to finetune large models without increasing memory usage during inference or requiring excessive computational resources. Compared to other methods like Adapter Layers and Prefix Tuning, LoRA offers better scalability and performance for handling large language models.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

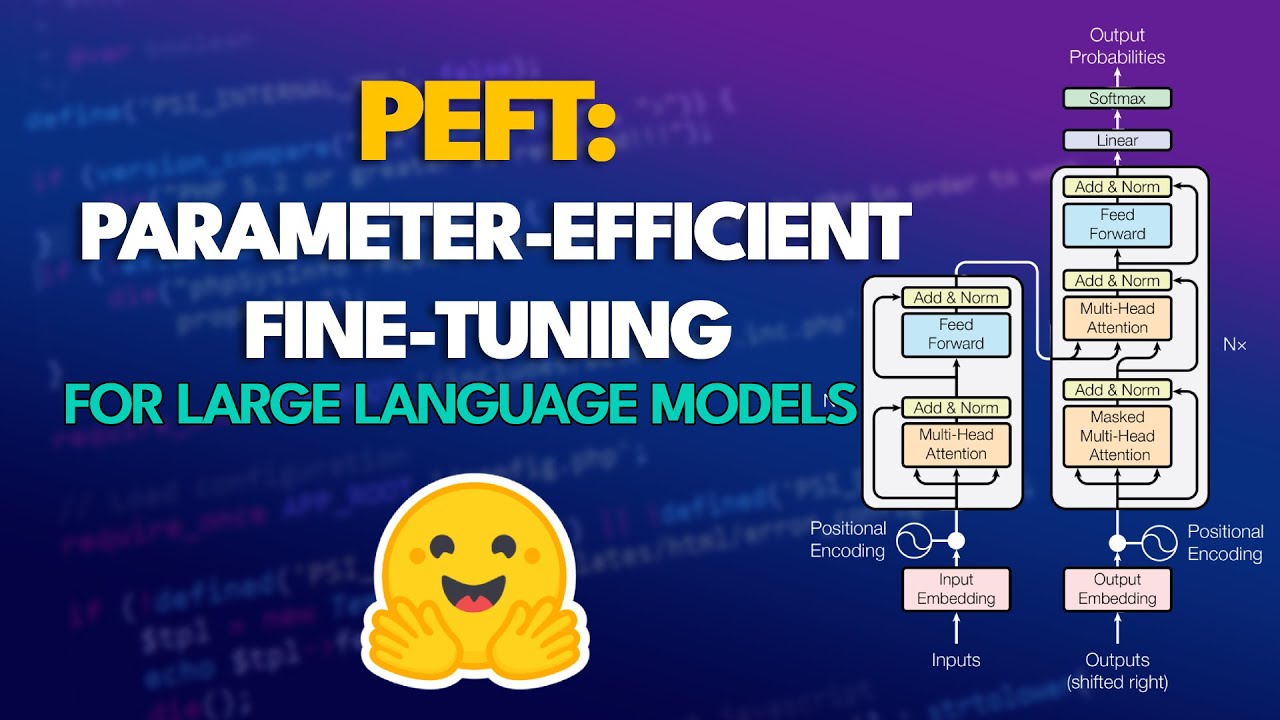

Fine-tuning LLMs with PEFT and LoRA

Lessons From Fine-Tuning Llama-2

Fine-tuning Large Language Models (LLMs) | w/ Example Code

EfficientML.ai 2024 | Introduction to SVDQuant for 4-bit Diffusion Models

Stanford XCS224U: NLU I Contextual Word Representations, Part 1: Guiding Ideas I Spring 2023

EASIEST Way to Fine-Tune LLAMA-3.2 and Run it in Ollama

5.0 / 5 (0 votes)