What is Transfer Learning? | With code in Keras

Summary

TLDRThis video introduces the concept of transfer learning, explaining how it involves using a pre-trained model for a new task, saving time and resources. It covers the process of fine-tuning, where layers of a model are adjusted for specific tasks, like recognizing dog faces from a model trained on human faces. The video walks through using the pre-trained EfficientNet model to classify flower species, demonstrating the steps of preparing datasets, modifying the model architecture, freezing layers, and fine-tuning the model. It also highlights the benefits of transfer learning, especially for tasks with limited data.

Takeaways

- 😀 Transfer learning allows you to use a pre-trained model on one task and apply it to a new, related task.

- 😀 A pre-trained model is a base model that has already been trained on a large dataset and can be fine-tuned for new tasks.

- 😀 Fine-tuning involves adjusting a pre-trained model to suit a new task by adding or modifying layers, based on the similarity of the tasks.

- 😀 Transfer learning can save time and resources by avoiding the need to train a model from scratch, especially when there is a lack of data.

- 😀 An example of transfer learning is training a model to recognize human faces and then adapting it to recognize dog faces.

- 😀 Transfer learning is particularly useful when you have limited data for a specific task but abundant data for a related task.

- 😀 Pre-trained models are available from many deep learning frameworks like TensorFlow, Keras, and PyTorch, and can be used for various tasks.

- 😀 To use transfer learning, you may either remove only the output layer of the pre-trained model or adjust more layers depending on the task similarity.

- 😀 Freezing the layers of the base model during fine-tuning ensures that the pre-trained knowledge isn't overwritten during training.

- 😀 After fine-tuning the top layers, you can unfreeze and retrain the base model if needed, adjusting the learning rate to improve performance.

Q & A

What is transfer learning in deep learning?

-Transfer learning involves taking a pre-trained model, which has already learned patterns from one task, and adapting it to perform well on a new, often related task. This method helps in utilizing previously learned knowledge to solve new problems more efficiently.

What is fine-tuning in the context of transfer learning?

-Fine-tuning is the process of adjusting a pre-trained model to perform a new task by retraining it with a new dataset. Fine-tuning typically involves modifying or adding new layers to the model to specialize it for the new task.

How does a pre-trained model help in transfer learning?

-A pre-trained model provides a solid starting point by having already learned useful features and representations from large datasets. This reduces the need for training from scratch, saving time and computational resources, especially when data is scarce.

Why might transfer learning be more beneficial than training a model from scratch?

-Transfer learning is beneficial when there is a lack of data for the new task, as it allows leveraging knowledge from a related task. By starting with a pre-trained model, you can overcome the problem of insufficient data and train models more effectively with fewer resources.

What are the two primary ways of implementing transfer learning?

-The two main approaches to transfer learning are: 1) Removing the output layer of the pre-trained model and adding a new output layer specific to the new task, and 2) Removing more layers of the pre-trained model and adding new layers to further specialize the model for the new task.

How do the layers in a deep neural network help in transfer learning?

-In deep neural networks, earlier layers typically capture low-level features like edges and textures, while deeper layers capture more abstract, high-level features. Transfer learning often involves keeping the lower layers intact while modifying or adding higher layers to adapt to the new task.

What is the benefit of freezing layers in a pre-trained model during fine-tuning?

-Freezing layers means that those layers will not be updated during training, allowing the newly added layers to learn and adapt to the new task while maintaining the knowledge learned by the frozen layers from the original task.

Why is it important to check the licensing of a pre-trained model from sources like GitHub?

-It is crucial to check the license of a pre-trained model to ensure that you are allowed to use and modify it according to the terms provided. Failing to do so could lead to legal issues or misuse of the model.

What role does the Keras library play in implementing transfer learning?

-Keras provides pre-trained models and essential tools for transfer learning, such as functions to process input data, set up models, and fine-tune them. Keras simplifies the process by providing ready-to-use models and components for quick adaptation.

What steps are involved in setting up a transfer learning project using Keras?

-The steps include: 1) Importing necessary libraries (e.g., TensorFlow, Keras), 2) Loading and preparing the dataset, 3) Choosing a pre-trained model, 4) Removing or modifying the top layers, 5) Freezing the base layers, 6) Adding new layers specific to the new task, 7) Compiling and fine-tuning the model with the new dataset, and 8) Evaluating the model's performance.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

What Is Transfer Learning? | Transfer Learning in Deep Learning | Deep Learning Tutorial|Simplilearn

What is Transfer Learning? [Explained in 3 minutes]

Course 4 (113520) - Lesson 1

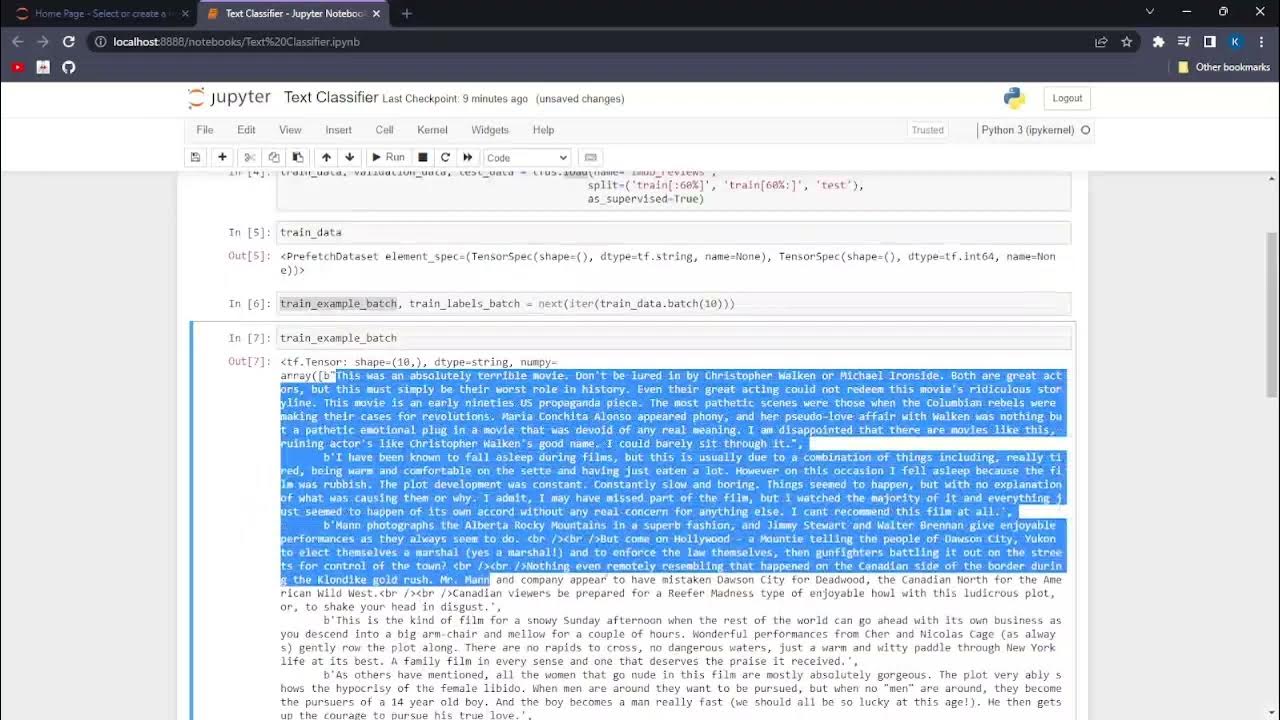

AIP.NP1.Text Classification with TensorFlow

Object Detection and Classification of Cars by Make using MMDetection

Lecture 3: Pretraining LLMs vs Finetuning LLMs

5.0 / 5 (0 votes)