Why is Linear Algebra Useful?

Summary

TLDRThis lesson explores three key applications of linear algebra: vectorized code, image recognition, and dimensionality reduction. It begins with vectorized code, showing how linear algebra simplifies tasks like calculating house prices, making it faster and more efficient. In image recognition, deep neural networks, particularly convolutional networks, process images by transforming them into numerical matrices. Finally, dimensionality reduction is introduced as a method to simplify complex datasets by reducing variables, illustrated through real-world examples like surveys. The lesson emphasizes the power of linear algebra in data science and machine learning.

Takeaways

- 🔢 Linear algebra is crucial in data science, particularly in vectorized code, image recognition, and dimensionality reduction.

- 🏠 Vectorized code, or array programming, allows for efficient computation by treating data as arrays rather than individual elements, significantly speeding up calculations.

- 💻 In machine learning, especially linear regression, inputs are often matrices, and parameters are vectors, with the output being a matrix of predictions.

- 🏡 The example of house pricing illustrates how linear algebra can simplify the process of calculating prices based on house sizes using a linear model.

- 🖼️ Image recognition leverages deep learning and convolutional neural networks (CNNs), which require converting images into numerical formats that computers can process.

- 📊 A greyscale image can be represented as a matrix where each element corresponds to a shade of grey, while colored images are represented as 3D tensors in the RGB color space.

- 📈 Dimensionality reduction is a technique that transforms data from a high-dimensional space to a lower-dimensional one, preserving essential information and simplifying analysis.

- 📉 Reducing the number of variables in a dataset can help in focusing on the most relevant features, making the data easier to interpret and visualize.

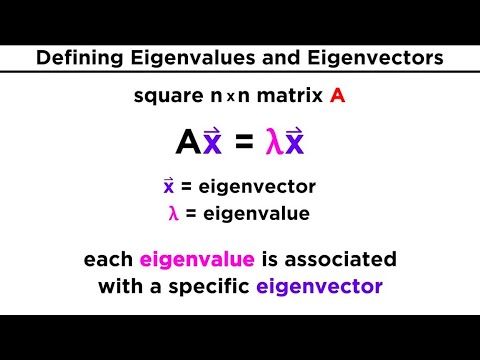

- 🔍 Linear algebra provides mathematical tools like eigenvalues and eigenvectors that are instrumental in dimensionality reduction techniques.

- 📊 The script uses the analogy of survey questions to explain how combining similar variables through dimensionality reduction can capture the essence of the data with fewer dimensions.

Q & A

What is vectorized code and why is it important?

-Vectorized code, also known as array programming, allows for the simultaneous computation of many values at once. It is much faster than using loops to iterate over data, especially when working with large datasets. Libraries like NumPy are optimized for this type of operation, increasing computational efficiency.

How does linear algebra relate to the calculation of house prices in the given example?

-Linear algebra can simplify the calculation of house prices by turning the problem into matrix multiplication. By representing the inputs (house sizes) and coefficients (price factors) as matrices, we can calculate the prices of multiple houses at once using a single matrix operation, rather than iterating through each size manually.

What role does linear algebra play in machine learning algorithms like linear regression?

-In linear regression, linear algebra is used to calculate the relationship between inputs (features) and outputs (predictions). The inputs are represented as a matrix, the weights (or coefficients) as another matrix, and the resulting predictions as an output matrix, facilitating efficient computation even for large datasets.

Why is it necessary to convert images into numbers for image recognition tasks?

-Computers cannot 'see' images as we do. Therefore, we convert an image into numbers using matrices, where each element represents the pixel intensity (in grayscale) or the color values (in RGB). This numerical representation allows algorithms to process and classify images.

What is the difference between a grayscale and colored photo in terms of matrix representation?

-A grayscale photo is represented as a two-dimensional matrix, where each element is a number between 0 and 255 representing the intensity of gray. A colored photo, on the other hand, is represented as a three-dimensional tensor (3x400x400) where each 2D matrix corresponds to one color channel: red, green, and blue (RGB).

What are convolutional neural networks (CNNs), and how do they apply linear algebra to image recognition?

-Convolutional neural networks (CNNs) are deep learning models that are widely used for image recognition tasks. They apply linear algebra to process images, turning them into matrices or tensors, and use layers of transformations to classify the contents of the images.

What is dimensionality reduction and why is it useful?

-Dimensionality reduction is the process of reducing the number of variables in a dataset while retaining most of the relevant information. This technique simplifies the problem and reduces computational complexity, which is especially useful when many variables are redundant or highly correlated.

How can linear algebra help in dimensionality reduction?

-Linear algebra provides methods like Principal Component Analysis (PCA) to transform high-dimensional data into lower-dimensional representations by finding new axes (or components) that capture the most variance in the data, thus reducing the number of variables.

Why is it often beneficial to reduce the number of variables in a dataset?

-Reducing the number of variables simplifies the problem, making the data easier to analyze and visualize. It also reduces the risk of overfitting in machine learning models and improves computational efficiency, especially when some variables provide redundant information.

Can you provide an example where dimensionality reduction would make sense in a real-world scenario?

-In a survey with 50 questions, some questions may measure similar traits, such as extroversion. Rather than treating each question as a separate variable, dimensionality reduction techniques can combine related questions, simplifying the dataset while retaining the core information.

Outlines

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنMindmap

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنKeywords

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنHighlights

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنTranscripts

هذا القسم متوفر فقط للمشتركين. يرجى الترقية للوصول إلى هذه الميزة.

قم بالترقية الآنتصفح المزيد من مقاطع الفيديو ذات الصلة

Álgebra Linear: Aplicação em TI -Computação Gráfica

Hallar BASE conociendo la MATRIZ de CAMBIO de BASE | Clase #4 | Álgebra para todos

Beginner Intro to Neural Networks 1: Data and Graphing

Finding Eigenvalues and Eigenvectors

What Linear Algebra Is — Topic 1 of Machine Learning Foundations

#7 Machine Learning Specialization [Course 1, Week 1, Lesson 2]

5.0 / 5 (0 votes)