Is YouTube radicalizing you?

Summary

TLDRThe video explores the dangers of YouTube's recommendation algorithm, which has been linked to radicalizing users by pushing extreme content, such as conspiracy theories, white supremacist views, and extremist ideologies. Techno sociologist Zeinab Tufekci argues that YouTube's autoplay feature exploits human vulnerabilities, keeping users glued to sensational content. The discussion emphasizes how this problem affects young people, drawing attention to the risks of unsupervised usage. Despite YouTube’s claims of improving the algorithm for user satisfaction, Tufekci warns that the platform still prioritizes watch time, enabling harmful content to proliferate.

Takeaways

- 📺 YouTube is one of the largest video platforms in the world, with over a billion users watching a billion hours of content daily.

- ⚠️ Reports show that major brands like Adidas, Amazon, and Hershey had ads appear alongside extreme content, including Nazi propaganda, pedophilia, and conspiracy theories.

- 🧠 YouTube's recommendation algorithm uses AI to keep viewers engaged, often by suggesting increasingly extreme or sensational content.

- 🎯 Studies suggest the platform can act as an 'engine of extremism' by promoting more radical content over time based on viewing behavior.

- 🔄 Autoplay functions like a feedback loop, continuously serving content that exploits human psychological vulnerabilities to keep users watching.

- 🥗 The algorithm tends to amplify more extreme versions of a topic, e.g., vegetarian videos leading to vegan or ultra-extreme content suggestions.

- 🚸 Children and young users are particularly vulnerable, often encountering conspiracy theories or misleading content even when starting with educational videos.

- 💻 While not all content on YouTube is harmful, the recommendation system exhibits a bias toward sensational and shocking material to maximize engagement.

- -

- 🛠️ Experts believe the algorithm can be adjusted or controlled since it is based on computer programs, but currently it incentivizes extremes for profit.

- 📈 YouTube has recently stated that it is shifting focus from pure watch time to user satisfaction in response to concerns about harmful content.

Q & A

What is the main concern raised in the script about YouTube's content?

-The main concern is that YouTube's algorithm is promoting extreme and harmful content, such as videos related to Nazis, pedophilia, conspiracy theories, and North Korean propaganda, often alongside ads from well-known brands like Adidas, Amazon, and Hershey.

What does Zeinab Tufekci mean by calling YouTube a 'radicalization machine'?

-Zeinab Tufekci argues that YouTube's algorithm pushes users toward increasingly extreme content by suggesting videos that escalate in radical views, contributing to the spread of extremism and potentially radicalizing viewers, especially in the run-up to significant events like the 2016 election.

How does YouTube's algorithm influence users' behavior on the platform?

-YouTube's algorithm recommends videos based on what users watch, leading them down a rabbit hole of increasingly extreme content. For example, after watching a video on a particular topic, the algorithm will suggest more radical content on the same or related subjects, keeping users engaged for longer periods.

What was the personal experiment Zeinab Tufekci conducted to study YouTube's algorithm?

-Zeinab Tufekci conducted an experiment by watching videos on specific topics like Donald Trump's rallies, Hillary Clinton, and Bernie Sanders. She observed that after watching these videos, YouTube started auto-playing more extreme and radical content related to those topics, such as white supremacist videos.

How does the algorithm contribute to users encountering extremist content?

-The algorithm promotes sensational and shocking content because it engages users, keeping them on the platform for longer. This often leads to the recommendation of conspiracy theories or radical ideas, as they tend to generate more views and engagement.

What analogy does Zeinab Tufekci use to explain the harm of YouTube's recommendation system?

-Tufekci compares YouTube's recommendation algorithm to a cafeteria serving children unhealthy foods like sugary or salty snacks. Just as you wouldn't continuously serve children such foods, YouTube's algorithm continues to recommend increasingly extreme content, exploiting users' vulnerabilities.

What impact does YouTube's algorithm have on young users, according to the script?

-Young users are particularly susceptible to the dangers of YouTube's algorithm, which can lead them into conspiracy theories or harmful content. For example, a child watching a science video may end up exposed to content promoting the idea that the moon landing was fake or that vaccines cause illnesses.

What did YouTube say in response to the criticisms of its algorithm?

-In response to the criticisms, YouTube acknowledged that they previously optimized their algorithm for watch time but have since shifted their focus to prioritizing user satisfaction with the content they watch, rather than just maximizing the time spent on the platform.

What kind of content does YouTube promote in addition to extreme videos?

-In addition to promoting extreme content, YouTube also hosts educational and instructional videos, such as tutorials and informational content, which many users find helpful for practical purposes like learning how to fix something or understanding a complex topic.

What is the primary concern regarding YouTube's influence on democracy?

-The primary concern is that YouTube's recommendation algorithm can perpetuate misinformation, extremist views, and conspiracy theories, which can distort public opinion and harm democratic processes by radicalizing users and spreading divisive content.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

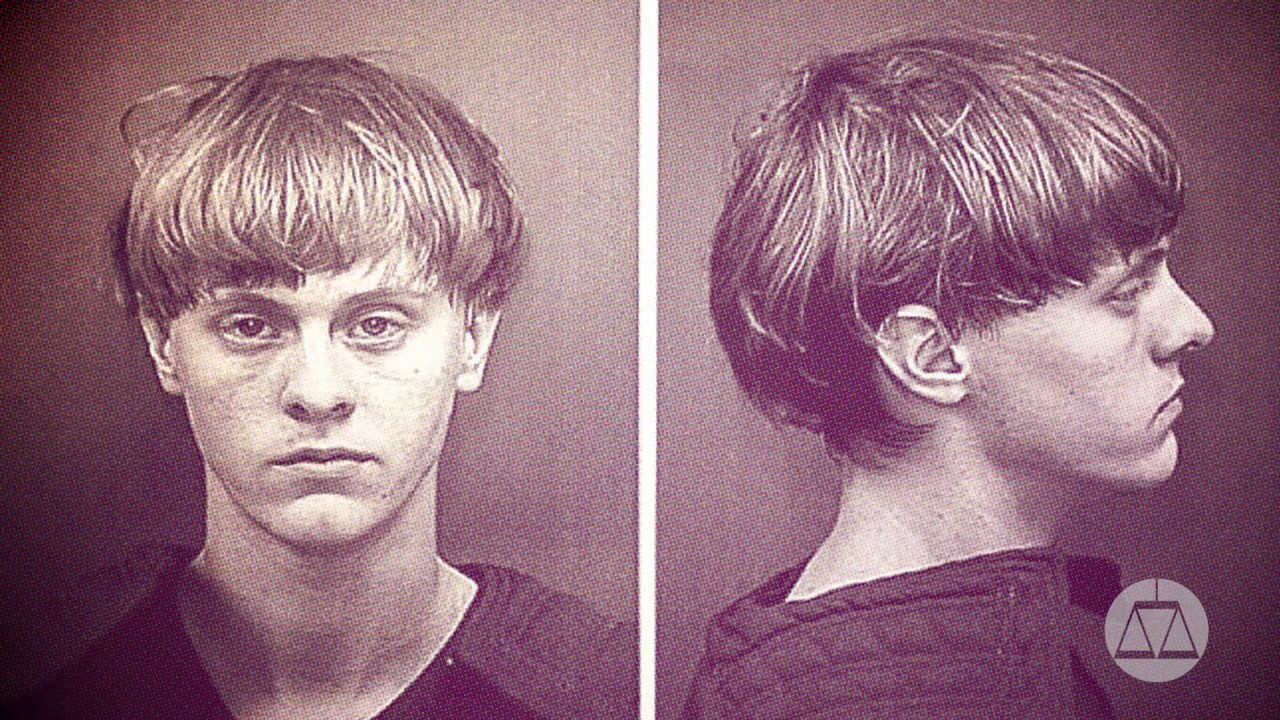

The Miseducation of Dylann Roof

Down The YouTube Rabbit Hole

La RAGAZZA Che Ha "HACKERATO" YOUTUBE... @chiaraavalentine

Людство ТУПІШАЄ?! ЛГБТ+ за радикальних мусульман. Деколонізатори за імперії. ООН за агресію.

Top 5 FREE Ways To Promote Your YT Channel To GUARANTEE Views

Why Does YouTube Keep Recommending You Videos You’ve Already watched?

5.0 / 5 (0 votes)