Spark Out of Memory Issue | Spark Memory Tuning | Spark Memory Management | Part 1

Summary

TLDRIn this video, we explore common out-of-memory issues in Apache Spark, focusing on both driver and executor memory problems. Driver issues often arise from operations like `collect()` and broadcast joins, while executor memory can be affected by high concurrency and large partitions. Solutions include adjusting driver memory, increasing YARN memory overhead, and balancing executor core assignments. Proper partitioning of data is also essential for optimizing memory usage. Understanding and addressing these issues will help prevent out-of-memory errors and improve Spark application performance.

Takeaways

- 😀 Out-of-memory (OOM) issues in Spark are commonly faced by developers, either at the driver or executor level.

- 😀 A common driver-side memory issue is caused by the `collect()` operation, which gathers large datasets onto the driver.

- 😀 Broadcasting large files in a broadcast join can cause the driver to run out of memory if the file exceeds the driver's capacity.

- 😀 To avoid driver memory issues, you can increase driver memory or reduce the size threshold for broadcast tables.

- 😀 Executor memory issues often occur due to insufficient Yarn memory overhead, which holds off-heap objects like internal Spark strings.

- 😀 Increasing Yarn memory overhead can help avoid OOM errors caused by off-heap memory limitations in the executor.

- 😀 High concurrency in executors, when too many cores are assigned to a single executor, can result in OOM errors.

- 😀 Assigning 4-5 cores per executor is recommended to ensure each core has enough memory to handle its task efficiently.

- 😀 Large partitions can cause executors to run out of memory while processing; reducing partition sizes can help mitigate this problem.

- 😀 Reducing the size of partitions by dividing large ones into smaller parts can prevent executor memory issues during data processing.

Q & A

What are the two main reasons Spark might run out of memory?

-Spark can run out of memory due to issues with either the driver or the executor. The driver can run out of memory when operations like `collect` or broadcast joins are performed, while executors can run out of memory due to large partitions, high concurrency, or insufficient Yarn memory overhead.

How does the `collect` operation cause the driver to run out of memory?

-The `collect` operation causes the driver to run out of memory by trying to gather all partitions of a large file stored across multiple machines, merging them into a single file. If the resulting file is too large, it exceeds the driver's memory capacity and leads to an out-of-memory issue.

What is the impact of a broadcast join on driver memory?

-In a broadcast join, if one file is large and another is small, Spark tries to broadcast the small file across all nodes. However, if the broadcasted file becomes too large and exceeds the driver's memory capacity, it can cause the driver to run out of memory.

How can you avoid driver memory issues when performing a broadcast join?

-You can avoid driver memory issues in a broadcast join by either increasing the driver's memory or reducing the threshold size for broadcasting, ensuring that only smaller tables are broadcasted.

What is Yarn memory overhead, and how does it contribute to out-of-memory issues on executors?

-Yarn memory overhead is the off-heap memory allocated for Spark's internal objects, including interned strings and language-specific objects (like Python or R objects). If this overhead is too small, it can cause executors to run out of memory, especially when handling a lot of Spark internal data or when running Spark jobs in languages like Python or R.

How can you address an out-of-memory issue caused by Yarn memory overhead?

-To address Yarn memory overhead issues, you should increase the size of the Yarn memory overhead, which is typically set to 10% of the total memory allocated to the executor. This helps accommodate larger Spark internal objects and language-specific objects.

What is the recommended number of cores to assign to each executor?

-It is recommended to assign 4 to 5 cores to each executor to ensure each core has enough memory to process its partitions. Assigning too many cores can cause memory issues due to insufficient memory per core.

How does high concurrency affect executor memory?

-High concurrency can cause executors to run out of memory when too many cores are assigned to each executor. This results in each core having less memory available to process its partitions, leading to memory overflow.

What happens when an executor encounters a large partition, and how can this be avoided?

-When an executor encounters a large partition, it may run out of memory due to the large amount of metadata and overhead created when reading and decompressing the data. This issue can be avoided by ensuring that partitions are of an appropriate size, ideally by dividing larger partitions into smaller ones.

How can partition size affect memory usage in Spark?

-Partition size affects memory usage because larger partitions require more memory to process. If a partition is too large, it may cause memory issues on the executor. To prevent this, partitions should be split into smaller, more manageable sizes.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Spark [Driver and Executor] Memory Management Deep Dive

What exactly is Apache Spark? | Big Data Tools

Ballooning - Georgia Tech - Advanced Operating Systems

Openstax Psychology - Ch8 - Memory

Learn Apache Spark in 10 Minutes | Step by Step Guide

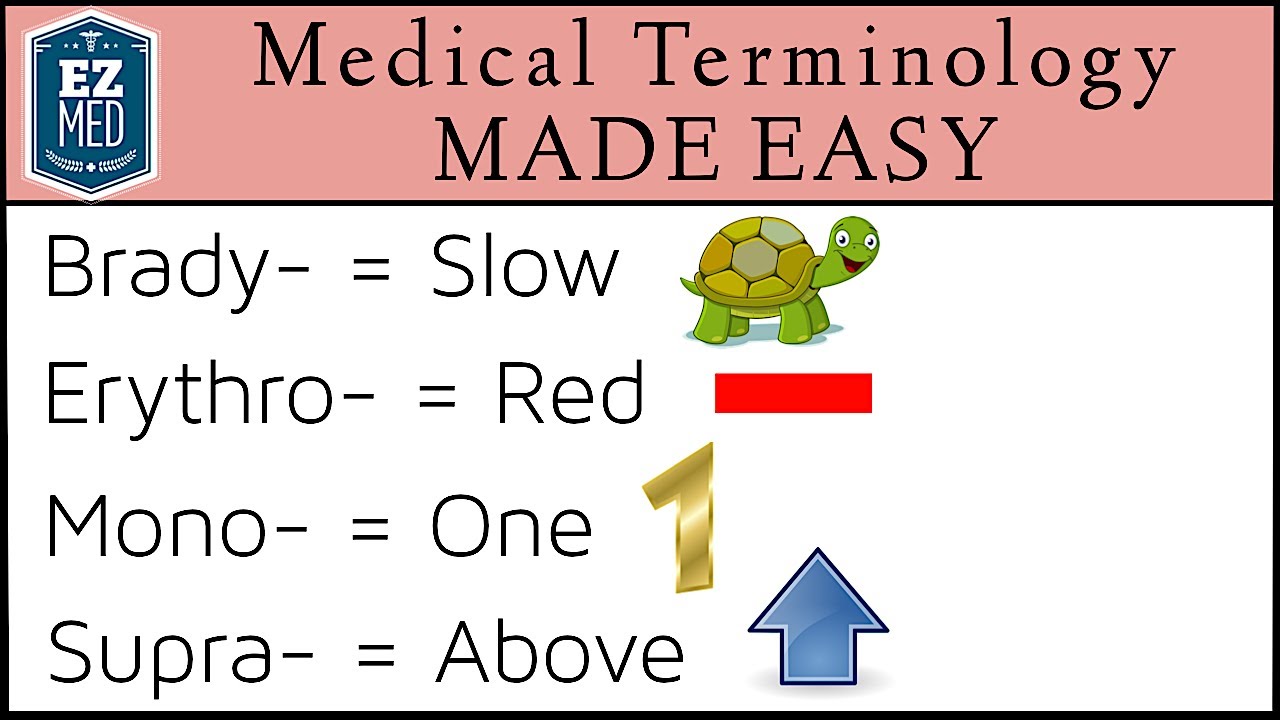

Medical and Nursing Terminology MADE EASY: Prefixes [Flashcard Tables]

5.0 / 5 (0 votes)