0. Introduction to Parallel Programming || OpenMP || MPI ||

Summary

TLDRIn this video, Joseph Sedan introduces parallel programming with C, focusing on the essentials of multi-core and distributed memory systems. Key concepts include breaking down problems into smaller tasks for simultaneous execution, managing concurrency, and addressing challenges such as process communication and synchronization. The video highlights the importance of MPI (Message Passing Interface) and OpenMP (Open Multi-Processing) as key APIs for parallel computing, with MPI suited for distributed systems and OpenMP for multi-core CPU systems. The next video will explore communication techniques within multi-core CPUs using MPI.

Takeaways

- 😀 Parallel programming involves decomposing a problem into smaller tasks that can be executed simultaneously across multiple computing resources.

- 😀 The video assumes viewers have a basic understanding of Linux, C programming, and parallel computing concepts.

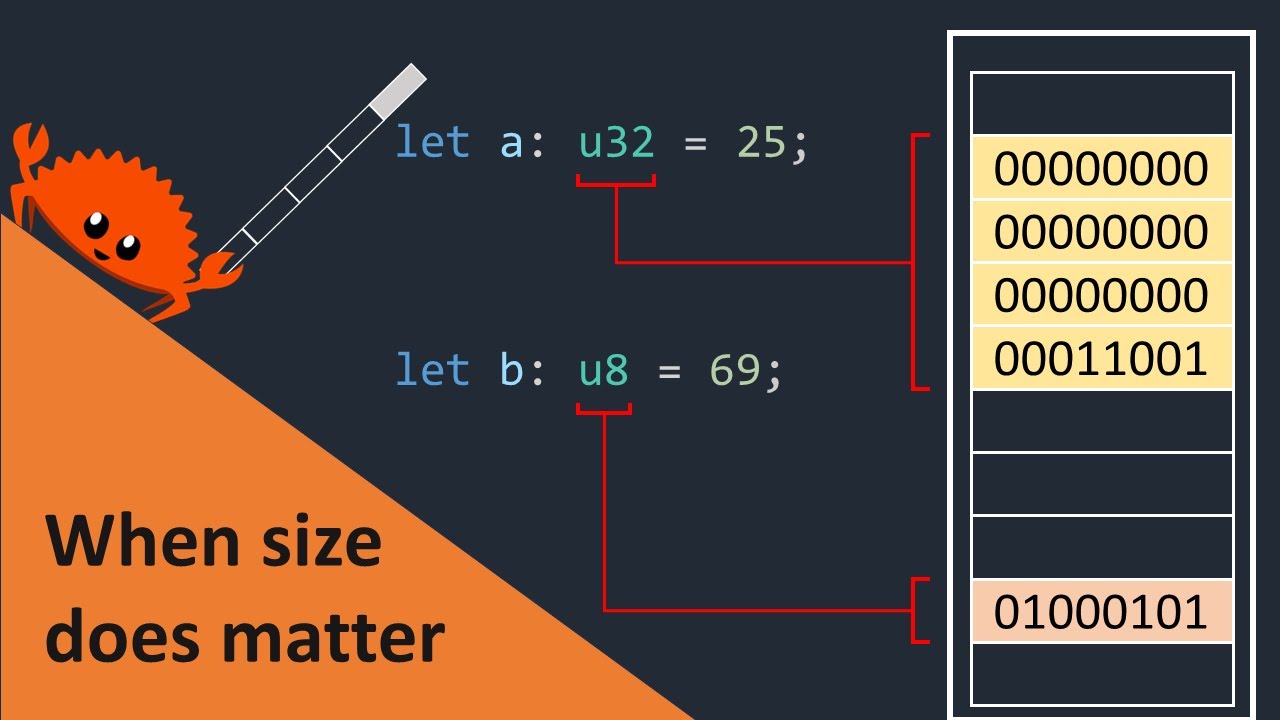

- 😀 One core or processor is assumed to handle only one process at a time, whether in a multi-core CPU or a distributed memory system.

- 😀 Parallel programming requires identifying which parts of your code can be executed concurrently to improve performance.

- 😀 Concurrency involves ensuring parallel tasks run simultaneously without causing delays due to waiting for other tasks to finish.

- 😀 Process communication is crucial when multiple processes or systems need to exchange data during parallel execution.

- 😀 Synchronization ensures the smooth integration of results from parallel tasks once they are completed.

- 😀 APIs like MPI (Message Passing Interface) and OpenMP (Open Multi-Processing) help address challenges in parallel programming for CPU-based systems.

- 😀 MPI enables communication between processes across distributed memory systems, connecting multiple machines over a network.

- 😀 OpenMP focuses on parallelism within a single machine, utilizing multi-threading capabilities to leverage multiple CPU cores.

- 😀 For more complex parallel tasks involving GPUs, APIs like OpenCL, OpenGL, and CUDA are used, though this series focuses on CPU-based parallelism with MPI and OpenMP.

Q & A

What is parallel programming, as described in the video?

-Parallel programming is the process of breaking down a problem into smaller tasks that can be executed simultaneously using multiple computing resources.

What assumptions does the video make about the viewer's knowledge?

-The video assumes the viewer has some knowledge of Linux, C programming, and parallel computing concepts. It also assumes the viewer is familiar with multi-core processors and basic computing terminology.

What does the term 'concurrency' refer to in parallel programming?

-Concurrency in parallel programming refers to the challenge of ensuring that tasks run simultaneously without one task having to wait for another to complete before proceeding.

What is the role of 'process communication' in parallel programming?

-Process communication refers to how multiple processes, especially in a distributed system, can exchange data and ensure synchronization during execution.

Why is synchronization important in parallel programming?

-Synchronization ensures that all parallel tasks are properly coordinated and that the data shared between them is consistent, preventing race conditions or incorrect results.

What is the MPI (Message Passing Interface) and its primary use?

-The MPI is an API used for parallelism between processes in a distributed memory model. It allows communication between systems connected over a network, making it ideal for multi-machine environments.

How does OpenMP differ from MPI?

-OpenMP is an API used for parallelism within a processor in a shared memory model. It focuses on multi-threading on a single system with multiple CPU cores, while MPI is for communication across distributed systems.

What does 'multi-threading' mean in the context of parallel programming?

-Multi-threading refers to the ability to run multiple threads (smaller units of a process) concurrently on a single CPU, which helps in leveraging the multiple cores of modern processors for parallel computing.

What kind of systems does the OpenMP API target?

-The OpenMP API is designed for systems with multiple cores, where it helps parallelize tasks within a single machine using shared memory.

What kind of parallel computing does the video focus on?

-The video focuses on parallel CPU computing, specifically using MPI and OpenMP to parallelize tasks either within a processor or across distributed systems, primarily leveraging CPU resources.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级5.0 / 5 (0 votes)