EfficientML.ai Lecture 6 - Quantization Part II (MIT 6.5940, Fall 2024)

Summary

TLDRThis lecture delves into quantization techniques for neural networks, focusing on the balance between model efficiency and accuracy. It discusses binary quantization, utilizing XOR and pop count operations for faster computations, while highlighting trade-offs in accuracy. Various strategies, including mixed-precision quantization and layer sensitivity analysis, are presented to optimize performance. The importance of hardware-aware quantization is emphasized, showcasing how automated approaches can enhance model design by considering latency and energy efficiency. Overall, the lecture provides insights into improving neural network implementation in resource-constrained environments.

Takeaways

- 😀 Quantization in neural networks reduces the precision of weights and activations to improve computational efficiency.

- 😀 Techniques like binary quantization utilize XOR operations and pop count to simplify calculations and minimize memory usage.

- 😀 The trade-off between reduced precision and model accuracy is critical; achieving a balance is essential for optimal performance.

- 😀 Mixed precision quantization allows different layers of a neural network to use varying levels of precision based on their sensitivity.

- 😀 Automated search methods can optimize quantization strategies, improving latency and energy efficiency in hardware implementations.

- 😀 The straight-through estimator helps recover accuracy loss during quantization by providing a gradient through quantized operations.

- 😀 Binary and ternary quantization represent weights with fewer bits, resulting in significant reductions in memory footprint and computation time.

- 😀 Pop count is an efficient method to count binary activations, enabling rapid calculations in quantized networks.

- 😀 Understanding the distribution of weights can inform thresholds for quantization, allowing for more effective scaling and accuracy retention.

- 😀 Overall, leveraging a combination of quantization techniques and hardware-aware strategies can lead to significant improvements in neural network performance.

Q & A

What is the primary focus of the lecture on quantization in neural networks?

-The lecture primarily focuses on various quantization techniques used to optimize neural network performance, particularly in terms of reducing memory usage and computation requirements while maintaining accuracy.

How does the lecture define binary and ternary quantization?

-Binary quantization represents weights and activations using two values (typically -1 and +1), while ternary quantization includes a third value, zero, to represent weights that are near zero, allowing for a more nuanced representation of the model.

What operations are highlighted as efficient for hardware implementation in binary quantization?

-The lecture highlights XOR and pop count operations as efficient alternatives to traditional multiplication and addition, significantly reducing computational complexity.

What is the significance of the pop count operation in the context of binary neural networks?

-Pop count is used to count the number of ones in a binary array, facilitating the computation of the dot product in a way that is both computationally inexpensive and efficient for hardware.

What strategies does the lecture suggest for improving the accuracy of quantized models?

-To improve accuracy, the lecture suggests techniques such as using learnable scaling factors, mixed-precision quantization, and fine-tuning with quantization-aware training.

What challenges are associated with lower precision in quantization, according to the lecture?

-Lower precision can lead to a significant degradation in model accuracy, particularly when transitioning from floating-point representations to binary or ternary formats.

How does mixed-precision quantization differ from uniform quantization?

-Mixed-precision quantization allows different layers of a neural network to use varying bit-widths based on their sensitivity to accuracy, whereas uniform quantization applies the same bit-width across the entire model.

What role does sensitivity analysis play in quantization, as discussed in the lecture?

-Sensitivity analysis helps identify which layers are more sensitive to quantization effects, allowing for targeted application of higher precision where necessary and optimizing overall model performance.

What is the empirical approach suggested for determining the threshold in ternary quantization?

-The empirical approach involves analyzing the absolute value distribution of weights and multiplying by a factor (0.7) to establish a threshold for classifying weights into positive, negative, and zero categories.

What overall conclusion does the lecture reach regarding the trade-offs in quantization?

-The lecture concludes that while reducing precision can decrease memory usage and computational costs, there is a critical balance to be struck to avoid significant accuracy loss, advocating for a thoughtful application of quantization techniques.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

EfficientML.ai Lecture 5 - Quantization Part I (MIT 6.5940, Fall 2024)

EfficientML.ai Lecture 4 - Pruning and Sparsity Part II (MIT 6.5940, Fall 2024)

EfficientML.ai Lecture 2 - Basics of Neural Networks (MIT 6.5940, Fall 2024)

Backpropagation in Neural Networks | Back Propagation Algorithm with Examples | Simplilearn

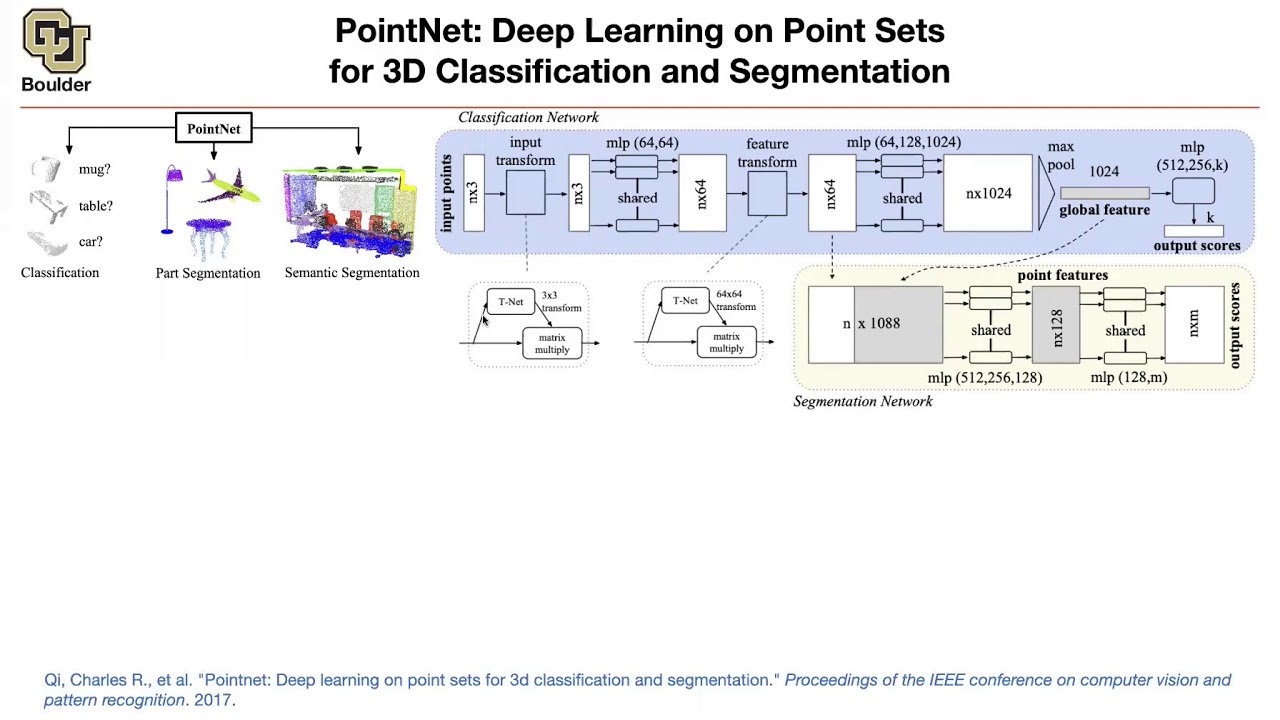

PointNet | Lecture 43 (Part 1) | Applied Deep Learning

NDSS 2024 - Timing Channels in Adaptive Neural Networks

5.0 / 5 (0 votes)