75 Regularization Methods - Early Stopping, Dropout, and Data Augmentation for Deep Learning

Summary

TLDRThis video discusses various regularization techniques in neural networks to combat overfitting. Key methods include early stopping, which halts training when validation loss worsens, and dropout, where random weights are set to zero to promote robust learning. Data augmentation generates new training samples through techniques like image transformations and GANs, enhancing model performance. Additionally, meta-learning optimizes one neural network with another, improving the overall architecture and efficiency. Together, these strategies help build more generalizable models, ensuring they perform well on unseen data.

Takeaways

- 😀 Early stopping is a cross-validation strategy that halts training when validation performance starts to decline, preventing overfitting.

- 📈 Monitoring validation loss during training helps identify the optimal point to stop training before performance worsens.

- 🚫 High variance between training and validation errors indicates overfitting, making early stopping crucial.

- 🔄 Dropout is a regularization technique that randomly sets a fraction of weights to zero during training to prevent reliance on specific neurons.

- ⚙️ The dropout rate, typically between 0.2 and 0.5, controls how many weights are dropped to enhance model generalization.

- 📊 Data augmentation increases the number of training samples by generating new data from existing samples, which helps reduce overfitting.

- 📸 Geometric transformations, such as flipping and rotating images, are common methods used in data augmentation.

- ✂️ Cutout and mixing images are additional techniques that improve model robustness by altering training data.

- 🔍 Feature space augmentation involves creating new features from existing data to enrich the dataset.

- 🤖 Generative Adversarial Networks (GANs) can be utilized for augmentation by generating new images from existing ones, enhancing data variety.

Q & A

What is early stopping in neural networks?

-Early stopping is a regularization technique that halts training when the model's performance on a validation dataset starts to degrade, preventing overfitting.

How does early stopping determine when to stop training?

-It monitors the validation loss during training. If the validation loss reaches a peak and starts increasing, training is stopped at that point.

What is the significance of the dotted line in the validation loss plot?

-The dotted line indicates the point where training should stop because the validation loss has started to increase, suggesting overfitting.

What is dropout in the context of neural networks?

-Dropout is a technique where a random fraction of weights in a neural network is set to zero during training, helping to prevent the model from memorizing training data.

What is the typical range for the dropout rate?

-The dropout rate typically ranges from 0.2 to 0.5, which controls the proportion of weights set to zero.

How does data augmentation help reduce overfitting?

-Data augmentation generates new training samples from existing data, increasing the diversity of the training set and helping the model generalize better.

What types of transformations are involved in data augmentation?

-Data augmentation can involve geometric transformations such as flipping, rotating, and cropping images, as well as mixing images together.

What role do Generative Adversarial Networks (GANs) play in data augmentation?

-GANs can be used to generate synthetic images from existing data, thereby increasing the number of training samples and enhancing model training.

What is feature space augmentation?

-Feature space augmentation involves creating new features from existing data points, such as deriving age from the date of birth, to increase the feature set available for training.

How does meta-learning contribute to regularization in neural networks?

-Meta-learning optimizes one neural network by using another, adjusting hyperparameters and enhancing the network's architecture to improve the overall performance of the model.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Machine Learning Tutorial Python - 17: L1 and L2 Regularization | Lasso, Ridge Regression

Neural Networks Demystified [Part 7: Overfitting, Testing, and Regularization]

Deep Learning(CS7015): Lec 1.5 Faster, higher, stronger

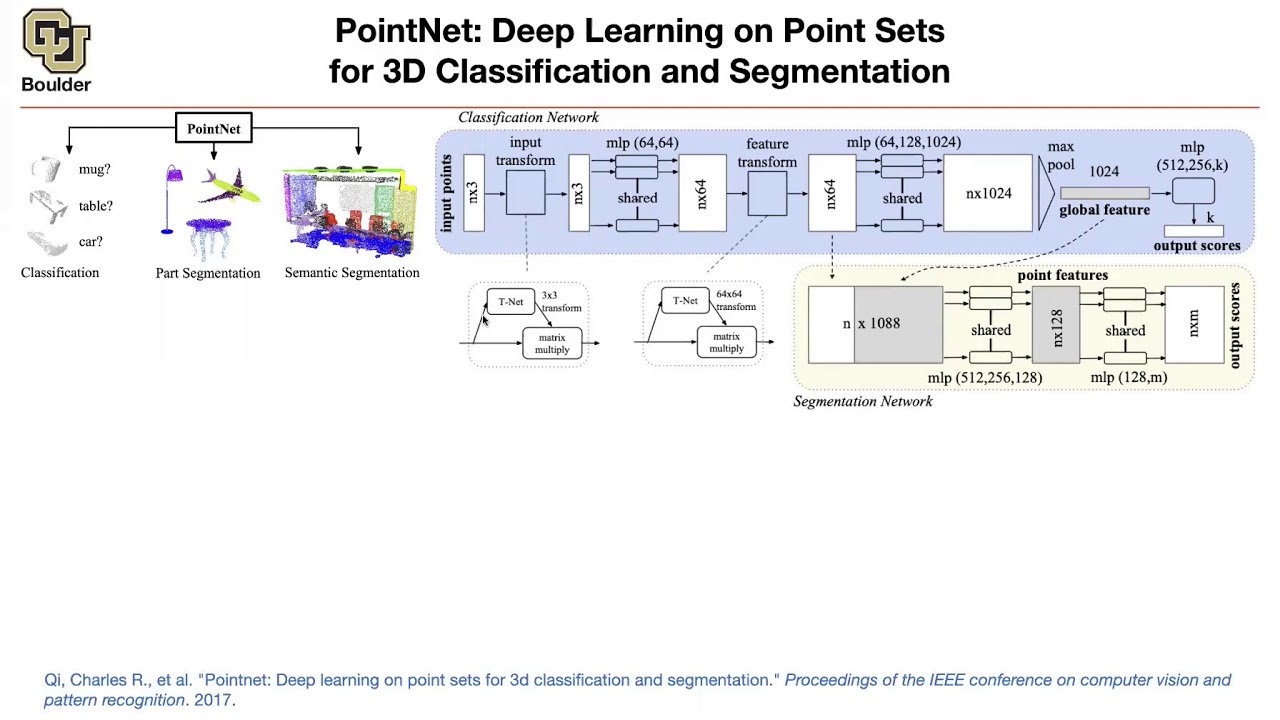

PointNet | Lecture 43 (Part 1) | Applied Deep Learning

How to Build Classification Models (Weka Tutorial #2)

Apa itu Overfitting dan Underfitting dan Solusinya!

5.0 / 5 (0 votes)