Week 5 -- Capsule 1 -- From linear classification to Neural Networks

Summary

TLDRCe script introduit les réseaux de neurones en commençant par la classification linéaire et en montrant comment passer à un réseau de neurones pour gérer les données non séparables linéairement. Il explique comment combiner plusieurs classifieurs linéaires pour obtenir une décision non linéaire. Le script utilise un exemple graphique avec des neurones pour illustrer la manière dont les réseaux de neurones fonctionnent, comment ils sont constitués de couches d'entrée, de couches cachées et de sortie, et comment ils peuvent être utilisés pour différentes tâches d'apprentissage supervisé, en soulignant leur rôle clé dans la révolution du deep learning.

Takeaways

- 🧠 Les réseaux neuronaux sont une extension des modèles de classification linéaire permettant de gérer des données non séparables linéairement.

- 🔍 La classification linéaire utilise une régression linéaire avec un seuil pour séparer les classes, mais elle a des limites avec les données non linéaires.

- 🤖 Pour traiter les données non linéaires, on peut combiner plusieurs classifieurs linéaires pour obtenir une décision non linéaire.

- 🔗 Chaque classifieur linéaire est représenté par un modèle avec des poids et un biais, et leur combinaison forme la base d'un réseau neuronal.

- 📈 Les réseaux neuronaux peuvent être visualisés graphiquement avec des noeuds (ou neurones) qui reçoivent des signaux et effectuent des calculs.

- 💡 Les neurones effectuent un produit scalaire entre les poids et les entrées, suivi d'une fonction d'activation non linéaire pour produire une sortie.

- 🌐 Les réseaux neuronaux sont composés de couches, y compris une couche d'entrée, une ou plusieurs couches cachées, et une couche de sortie.

- 🔑 Le nombre de couches et la taille de chaque couche sont des hyperparamètres qui influencent la capacité du modèle à apprendre des données complexes.

- 📊 Les réseaux neuronaux sont flexibles et peuvent être adaptés à de nombreuses tâches d'apprentissage supervisé, comme la régression, la classification et l'estimation de densité.

- ⏳ L'essor des réseaux neuronaux est au cœur de la révolution du deep learning, avec des progrès considérables au cours des dix dernières années dans la capacité à résoudre des problèmes complexes.

Q & A

Quel est le problème typique abordé dans le script?

-Le script aborde le problème de classification linéaire binaire, où l'objectif est de classer des données en deux classes distinctes, représentées par des points verts et bleus dans un espace bidimensionnel.

Comment est défini le modèle de régression linéaire dans le script?

-Le modèle de régression linéaire est défini comme un produit scalaire entre w et x, plus un terme de biais w0, qui sert de base pour établir une frontière de décision.

Quelle est la limitation du modèle de régression linéaire mentionnée dans le script?

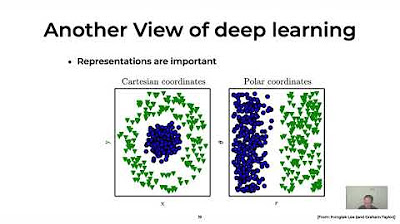

-La limitation du modèle de régression linéaire est qu'il ne peut obtenir zéro erreur que si les données sont séparables linéairement. Il échoue avec des données non séparables linéairement.

Quelle est la solution proposée pour traiter les données non séparables linéairement?

-Pour traiter les données non séparables linéairement, le script suggère d'utiliser des réseaux neuronaux qui combinent la décision de plusieurs classifieurs linéaires pour obtenir une frontière de décision non linéaire.

Comment les deux classifieurs linéaires sont-ils combinés pour former un réseau neuronal?

-Les deux classifieurs linéaires sont combinés en utilisant leurs sorties respectives comme entrées pour un troisième modèle, qui est également un classifieur linéaire, pour prendre une décision finale.

Quels sont les éléments clés d'un neurone dans un réseau neuronal?

-Les éléments clés d'un neurone dans un réseau neuronal incluent les connexions associées aux poids, le sommet de la fonction d'activation non linéaire, et la capacité de calculer une sortie basée sur les entrées.

Pourquoi les réseaux neuronaux sont-ils considérés comme des modèles flexibles?

-Les réseaux neuronaux sont considérés comme des modèles flexibles car ils permettent d'ajouter des neurones, des couches et même différents types de connexions pour créer des modèles plus puissants capables de modéliser divers types de données.

Quels sont les types de tâches supervisées auxquelles les réseaux neuronaux sont bons?

-Les réseaux neuronaux sont particulièrement efficaces pour des tâches supervisées telles que la régression, la classification et l'estimation de densité.

Quel est le lien entre les réseaux neuronaux et la 'révolution de l'apprentissage profond'?

-Les réseaux neuronaux sont au cœur de la 'révolution de l'apprentissage profond' car ils sont les modèles derrière les techniques d'apprentissage profond, qui ont permis de réaliser des progrès considérables dans le domaine de l'intelligence artificielle.

Quelle est la signification des couches dans un réseau neuronal?

-Dans un réseau neuronal, les couches représentent les différentes étapes de traitement des informations. Il y a généralement une couche d'entrée, une ou plusieurs couches cachées, et une couche de sortie, chacune contenant un certain nombre de neurones.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Week 3 -- Capsule 2 -- Linear Classification

Week 5 -- Capsule 3 -- Learning representations

RÉSEAU DE NEURONES (2 COUCHES) - DEEP LEARNING 7

Qu'est-ce que les réseaux de communication ? Comment ça marche Internet ? 🤔🌐

Le deep learning

33 - La mémoire est une question de neurones - Magistère mémorisation (2/8)

5.0 / 5 (0 votes)