Logistic Regression Part 2 | Perceptron Trick Code

Summary

TLDRIn this YouTube tutorial, the presenter delves into logistic regression, following up on a previous discussion about the perceptron algorithm. They demonstrate converting the algorithm into code, using a dataset to illustrate the classification process. The video includes detailed explanations of data plotting, algorithm logic, and the interpretation of weights and intercepts. The presenter also addresses the limitations of the perceptron trick and compares it with logistic regression, highlighting the latter's symmetrical approach to classification and its potential for better generalization.

Takeaways

- 🧑🏫 The video is focused on teaching logistic regression and contrasts it with the Perceptron algorithm, which was covered in the previous video.

- 💻 The speaker has already written a code to implement the algorithm, and it is centered around classifying a dataset using logistic regression.

- 📊 A classification dataset was generated and visualized, with inputs consisting of two columns and outputs represented by two classes (0 and 1), color-coded as blue and green.

- 🛠️ A core function, called `scan`, was created to process the data, returning weights and intercepts after running the algorithm.

- 📝 The code involves adding a bias term to the input features, creating a weight matrix, and updating it iteratively using a loop for 1,000 iterations.

- 🎯 Logistic regression is demonstrated by selecting random samples from the dataset, calculating product values, and updating the weights based on classification results.

- 🔍 The video demonstrates how Perceptron updates weights based on misclassified points, while logistic regression continues improving the classification boundary until an optimal solution is found.

- 🚩 The speaker highlights the limitations of the Perceptron algorithm, particularly its inability to find the best line if the data is linearly separable.

- 🔄 An animation is shown in the video to demonstrate how the Perceptron algorithm adjusts the decision boundary as more misclassified points are identified.

- 💡 Logistic regression, unlike Perceptron, seeks a decision boundary that is symmetrically placed between the two classes, resulting in better generalization and less overfitting.

Q & A

What is the main topic discussed in the video?

-The video discusses logistic regression and how to implement the Perceptron algorithm in code, focusing on classifying data points.

What was covered in the previous video?

-The previous video covered the Perceptron Trick, which is a method used in machine learning for classification.

What is the dataset used in this video?

-The dataset is a two-dimensional classification dataset with input features represented by two columns and target values being either 1 or 0.

How are the data points visually represented in the plot?

-The data points are represented by different colors: green for class 1 and blue for class 0.

What is the function created in the video used for?

-The function, named `scan`, takes input data (X and Y) and returns the weights and intercepts needed for classification.

What does the algorithm do after generating weights?

-The algorithm selects a random data point and performs classification, updating the weights if the data point is misclassified.

How is misclassification handled in the Perceptron Trick?

-If a point is misclassified, the algorithm updates the weights to adjust the decision boundary until all points are correctly classified.

What is the key difference between Perceptron and Logistic Regression discussed?

-Perceptron stops updating the decision boundary once all points are correctly classified, while Logistic Regression continues optimizing to find the best model by minimizing error, leading to a more symmetric and generalized boundary.

What is the issue with the Perceptron Trick as discussed in the video?

-The Perceptron Trick might not find the best decision boundary because it only focuses on correctly classifying points, ignoring further optimization that could improve the model's generalization.

How does Logistic Regression improve over the Perceptron Trick?

-Logistic Regression continues to optimize the decision boundary even after points are classified correctly, ensuring better generalization and performance on new data.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级浏览更多相关视频

Machine Learning Tutorial Python - 8 Logistic Regression (Multiclass Classification)

Machine Learning Tutorial Python - 8: Logistic Regression (Binary Classification)

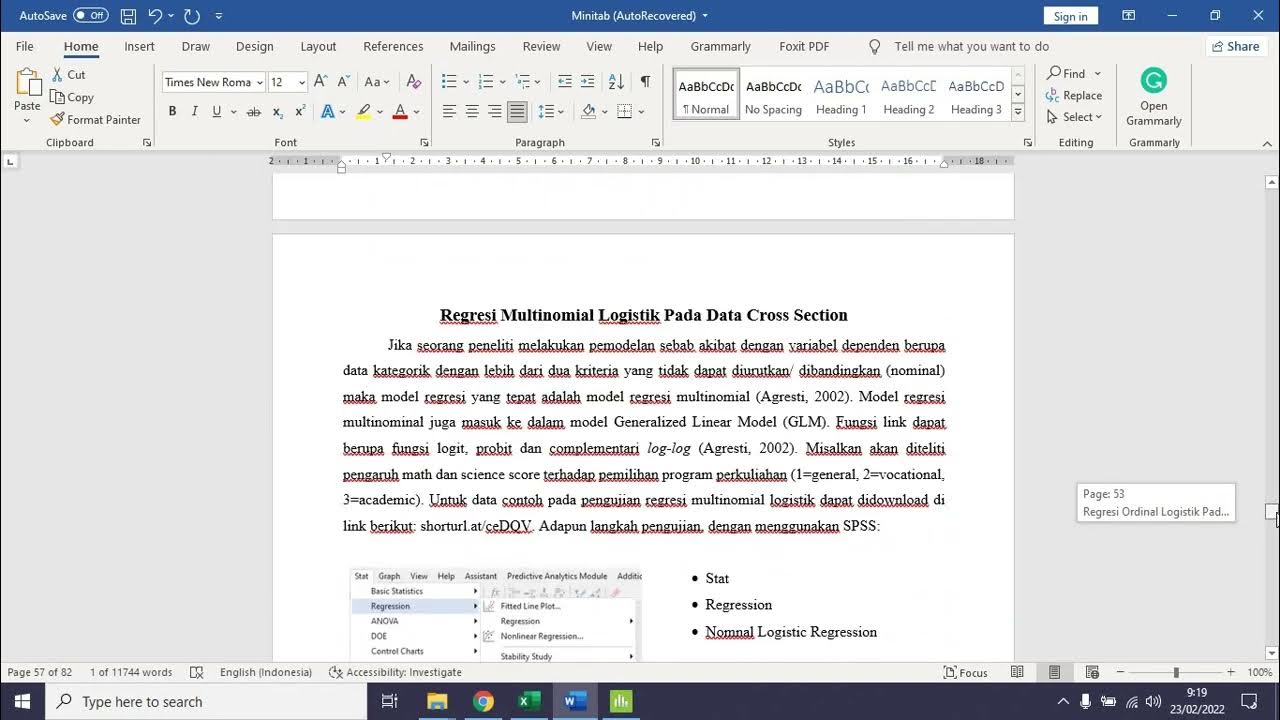

Regresi Ordinal dan Multinomial Logistik Pada Data Crosssection dengan Minitab

Lec-5: Logistic Regression with Simplest & Easiest Example | Machine Learning

Statistik Terapan: Regresi Logistik penjelasan singkat

24-10-2024 Logistic Regression Perhitungan Manual dan Sintaks Pythonnya

5.0 / 5 (0 votes)