Direct Memory Mapping

Summary

TLDRThis educational video script explains the concept of direct memory mapping in computer systems. It begins with an introduction to the organization of secondary and main memory, both divided into pages and frames of equal size. The script delves into the process of bringing elements from main memory into cache memory, highlighting the similarities in organization between blocks and lines. It discusses the significance of physical address bits, block and line offset, and tag bits in the mapping process. The explanation of round-robin mapping and the direct mapping technique's strictness concludes the session, promising numerical problems in upcoming lessons to solidify understanding.

Takeaways

- 😀 The video introduces different cache memory mapping techniques, starting with direct memory mapping.

- 🔍 The secondary memory and main memory are conceptually diagrammed, showing how programs are converted into processes and subdivided into pages and frames of equal size.

- 💾 The operating system is responsible for managing the subdivision of processes into pages and their loading into main memory, as detailed in a separate course on operating systems.

- 📚 Cache and main memory organization is similar, with main memory parts termed as 'blocks' and cache parts as 'lines', both having the same size.

- 📝 The smallest addressable memory unit is a 'word', and in a byte-addressable memory, each word is one byte in size.

- 📉 The script explains how to calculate the number of blocks in main memory and how they are numbered, using an example with 64-word size and block size of four words.

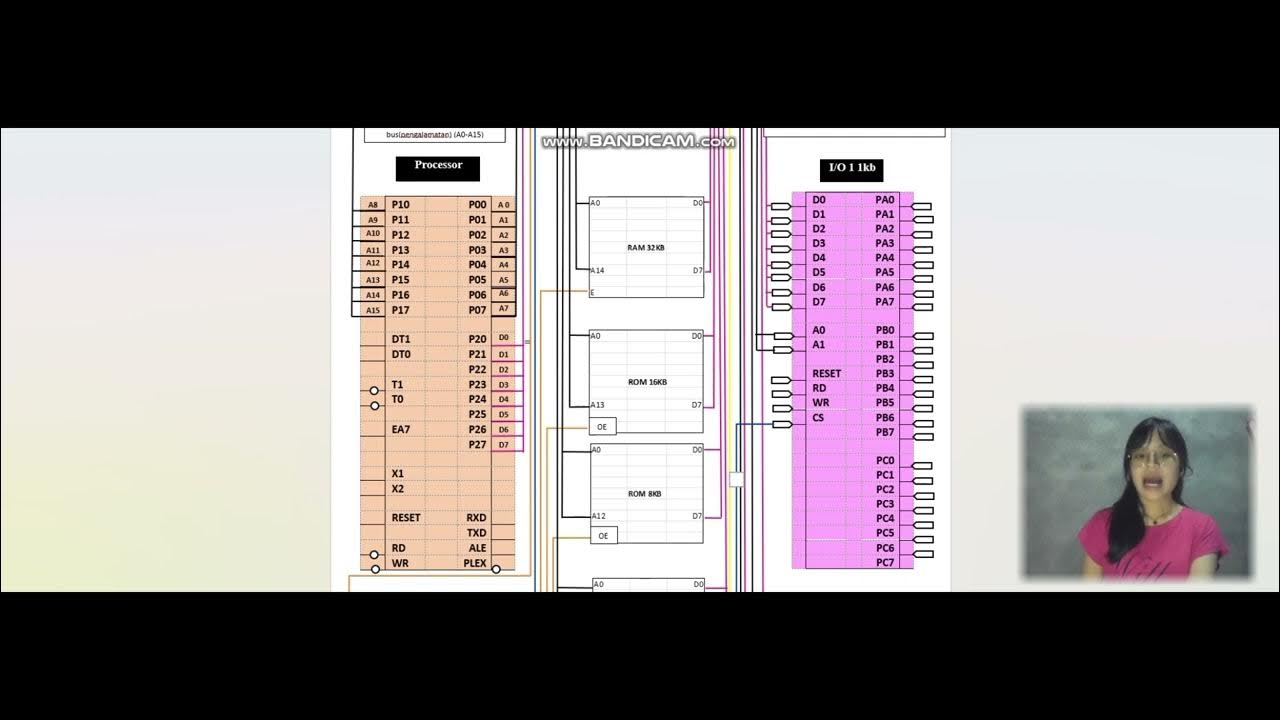

- 📍 It details the concept of physical address bits (PA bits), which are used to address locations in the main memory, split into block numbers and word offsets within blocks.

- 🔄 The mapping of main memory blocks to cache lines is done in a round-robin manner, which is a many-to-one relationship.

- 🔑 The PA bits are split into block or line offset, block numbers, and tag bits, with the tag bits identifying which block is present in the cache.

- 🔍 Direct mapping is a strict memory mapping technique where main memory blocks are directly mapped to cache lines, as opposed to other mapping techniques that may allow more flexibility.

- 📈 The video concludes with an explanation of why the tag bits are called so, as they act as identifiers or 'tags' for the blocks present in the cache.

Q & A

What is the main focus of the session described in the transcript?

-The main focus of the session is to explain different cache memory mapping techniques, starting with direct memory mapping.

How is the secondary memory related to the main memory in terms of program execution?

-In terms of program execution, programs permanently reside in secondary storage and during execution, they turn into processes. The operating system is responsible for subdividing these processes into equal-sized pages and bringing them into the main memory.

What is the term used for the smallest addressable memory unit?

-The smallest addressable memory unit is called a 'word'.

What does the size of each block and frame represent in the context of the script?

-The size of each block and frame represents the equal-sized subdivisions of the main memory and secondary memory, respectively, which are used for efficient memory management and access.

How are the main memory and cache memory organized in terms of blocks and lines?

-In the script, parts of the main memory are termed as 'blocks', and parts of the cache are named as 'lines'. Both the block size and line size are the same, facilitating a direct correspondence between them.

What is the significance of the term 'byte addressable memory' in the script?

-The term 'byte addressable memory' signifies that the size of each word is one byte, which is a standard unit for measuring memory capacity and addressing within the memory.

How many bits are required to address 64 words in the main memory?

-To address 64 words in the main memory, 6 bits are required, as log2(64) equals 6.

What are the different parts of a physical address according to the script?

-A physical address is split into the most significant bits for identifying blocks, and the least significant bits for addressing each word within a block.

What is the purpose of the round-robin mapping technique mentioned in the script?

-The round-robin mapping technique is used to assign main memory blocks to cache lines in a cyclic order, ensuring that all blocks have an opportunity to be mapped to each cache line over time.

What is the role of 'tag bits' in direct memory mapping?

-Tag bits are used to identify which block is present in the cache. They work as identifiers or 'tags' for the blocks within a cache line.

What is the significance of the least significant two bits in block numbering?

-The least significant two bits in block numbering dictate which cache line a particular block will be mapped onto in the direct memory mapping technique.

Outlines

此内容仅限付费用户访问。 请升级后访问。

立即升级Mindmap

此内容仅限付费用户访问。 请升级后访问。

立即升级Keywords

此内容仅限付费用户访问。 请升级后访问。

立即升级Highlights

此内容仅限付费用户访问。 请升级后访问。

立即升级Transcripts

此内容仅限付费用户访问。 请升级后访问。

立即升级5.0 / 5 (0 votes)