Driver Drowsiness Detection System using OpenCV | Graduation final year Project with Source Code

Summary

TLDRThis video script guides viewers through building a project that detects eye blinks using OpenCV and dlib. It covers installing necessary modules, initializing face and landmark detectors, calculating eye aspect ratios, and playing an alarm when a blink is detected. The tutorial also demonstrates drawing contours and convex hulls, and concludes with playing music using the pygame module.

Takeaways

- 😀 The video script describes the process of building an application that detects eye blinks and plays an alarm when a blink is detected.

- 🛠️ The project requires the installation of several Python modules including 'opencv-python', 'RPi.GPIO', 'imutils', 'dlib', and 'cmake'.

- 🔍 The 'dlib' library is used for face detection and to detect 68 facial landmarks, which are essential for tracking eye movements.

- 📚 The script introduces the concept of the Eye Aspect Ratio (EAR), which is calculated using the coordinates of the facial landmarks around the eyes to detect blinks.

- 📉 The EAR value remains constant and drops significantly when the eyes are closed, making it a reliable metric for detecting eye blinks.

- 👁️ The script details how to calculate the EAR for both the left and right eyes and then averages them to determine if a blink has occurred.

- 🔶 The convex hull, which is the smallest convex polygon that can enclose a set of points, is used to simplify the shape of the eyes for contour analysis.

- 🔲 The script includes a method to draw the convex hull and contours on the video frames to visualize the eye shapes.

- ⏰ An alarm is triggered when the EAR is below a certain threshold for a specified number of frames, indicating a sustained eye closure.

- 📈 The script mentions adjusting the threshold value based on the user's setup and the frame rate of the webcam.

- 🎶 Towards the end, the script adds functionality to play an alarm sound using the 'pygame' module when the eye blink is detected.

Q & A

What is the purpose of the application being discussed in the script?

-The purpose of the application is to detect eye blinks using facial landmarks and play an alarm when a blink is detected.

Which modules are required to be installed for this project?

-The required modules are opencv-python, imutils, dlib, and pygame.

Why is the frontal face detector used instead of the traditional OpenCV's Haar Cascade detector?

-The frontal face detector is used because it is faster and more effective compared to the traditional Haar Cascade detector.

What is the significance of the EAR (Eye Aspect Ratio) in this project?

-EAR is used to detect eye blinks. The value of EAR remains constant except when the eyes are closed, making it an effective way to detect eye blinks.

How is the EAR calculated in the script?

-EAR is calculated using the distances between specific facial landmarks of the eyes. It is the sum of the vertical distances divided by twice the horizontal distance.

What are the facial landmarks used for in this project?

-Facial landmarks are used to detect 68 points on the face, which include eyes, eyebrows, jawline, lips, nose, etc. These landmarks are used as reference points for detecting eye blinks.

What is the role of the convex hull in this project?

-The convex hull is used to determine the minimum boundary that can completely enclose the object, in this case, the eyes. It helps in drawing the outline around the eyes.

How does the script handle the detection of eye blinks?

-The script calculates the EAR for both the left and right eyes, averages them, and checks if it is less than a certain threshold for a specific number of frames. If it is, an alarm is played.

What is the threshold value used for detecting eye blinks and how can it be adjusted?

-The threshold value is initially set to 0.25, but it can be adjusted between 0.25 to 0.5 depending on the user's setup and the frame rate of the video or webcam.

How is the music played in the application?

-Music is played using the pygame module. The music file is loaded and played using the mixer.music.play function.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

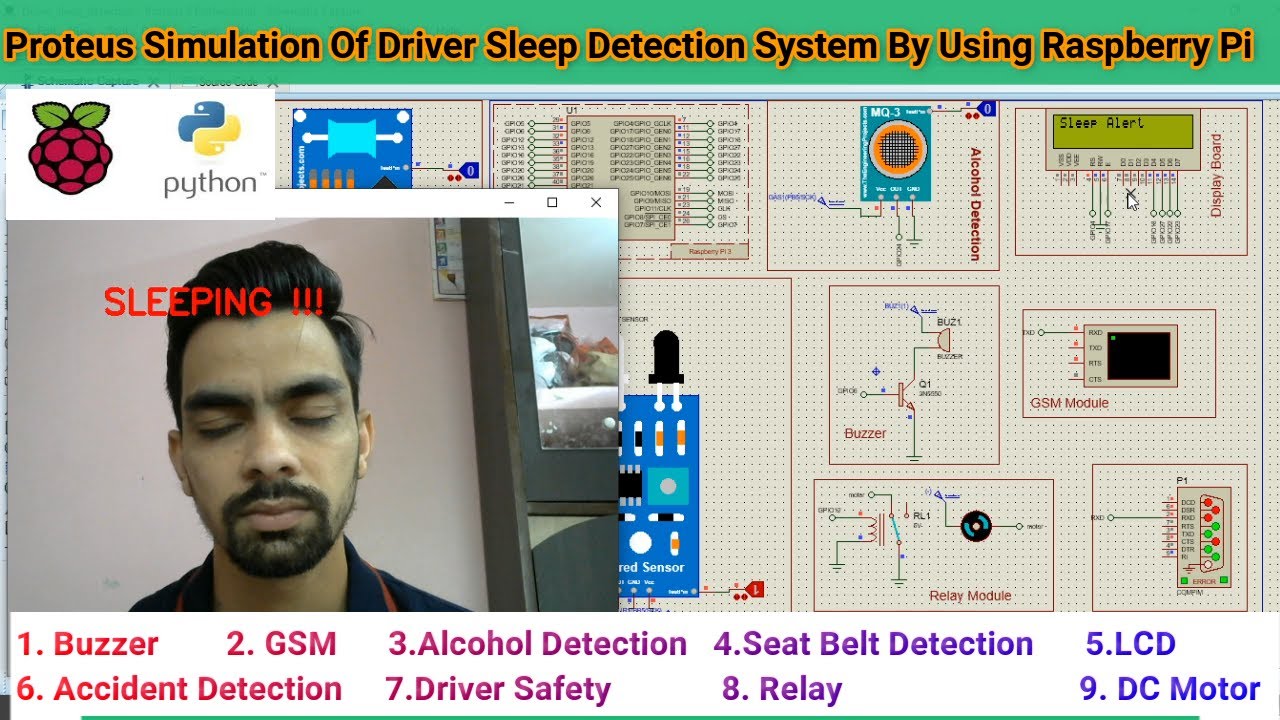

Driver Drowsiness Detection System

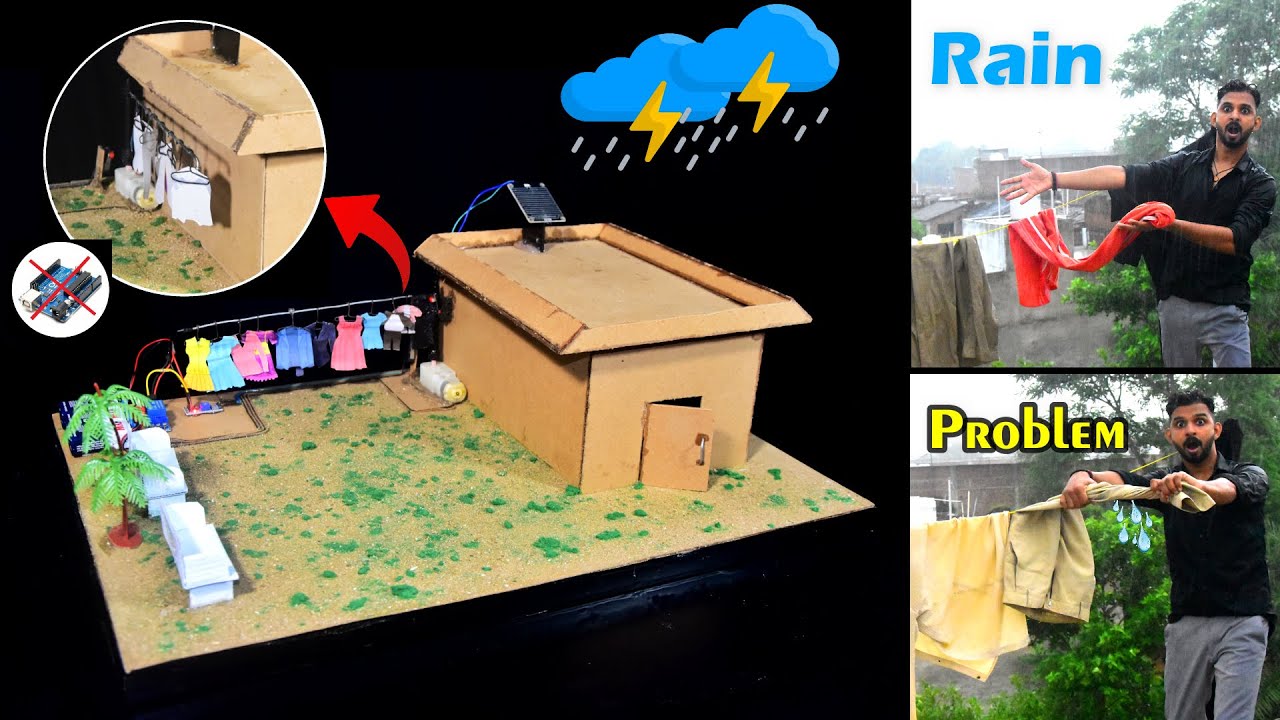

Automatic Rain Detection Smart Roof For Cloth Protection | New Project Idea

Cyberbullying Detection Using Machine Learning | Python Final Year IEEE Project

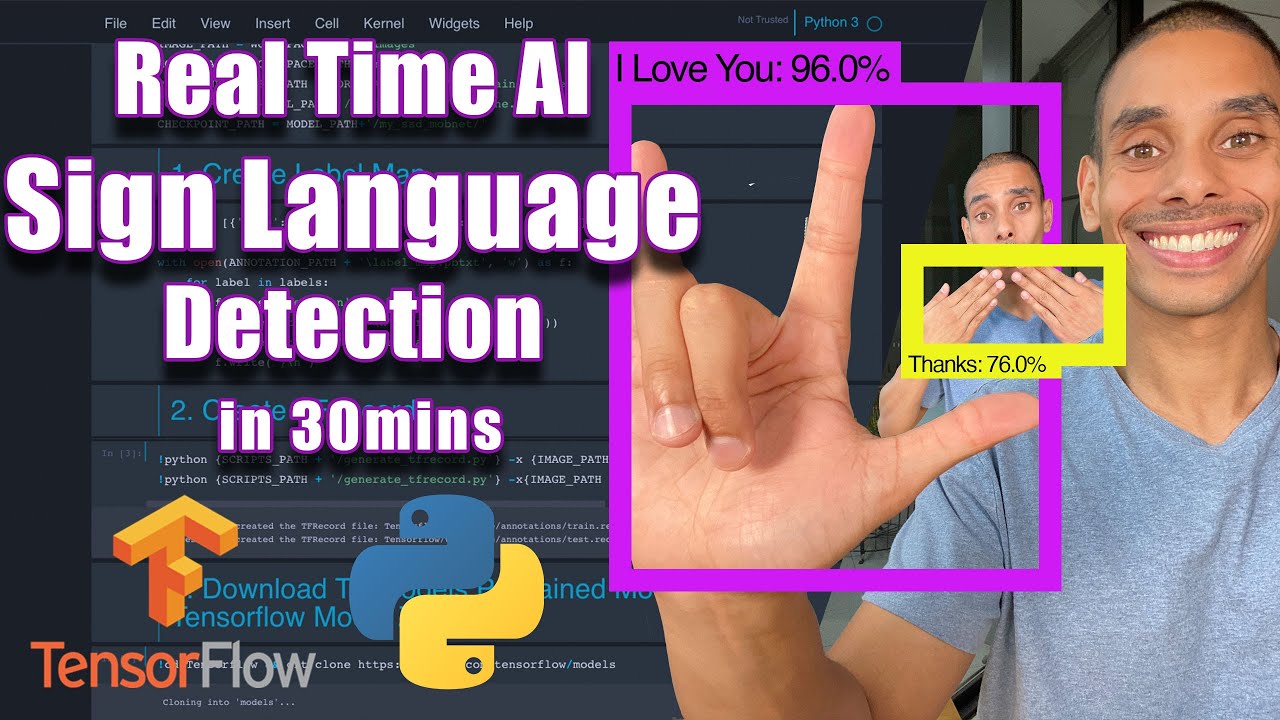

Real Time Sign Language Detection with Tensorflow Object Detection and Python | Deep Learning SSD

How to install OpenCV on Windows 10 (2021)

Prediksi Penyakit Serangan Jantung | Machine Learning Project 11

5.0 / 5 (0 votes)