Introduction to Generative AI and LLMs [Pt 1] | Generative AI for Beginners

Summary

TLDRIn this introductory lesson of the 'Generative AI for Beginners' course, Carot Cuchu, a Cloud Advocate at Microsoft, presents an open-source curriculum exploring generative AI and large language models. These models, built on the Transformer architecture, revolutionize education by improving accessibility and personalizing learning experiences. The course will examine how these technologies address challenges and limitations while transforming the educational landscape.

Takeaways

- 📘 The course is an introduction to Generative AI for beginners, based on an open-source curriculum available on GitHub.

- 👋 The instructor, Carot Cuchi, is a Cloud Advocate at Microsoft with a focus on artificial intelligence technologies.

- 🧠 Generative AI and large language models are at the forefront of AI technology, achieving human-level performance in various tasks.

- 🌟 Large language models have capabilities and applications that are revolutionizing education and improving accessibility and personalized learning experiences.

- 🔍 The course will explore how a fictional startup uses generative AI to innovate in education and address social and technological challenges.

- 📚 The origins of generative AI date back to the 1950s and 1960s, evolving from rule-based chatbots to statistical machine learning algorithms.

- 💡 The breakthrough in AI came with the introduction of neural networks and the Transformer architecture, which improved natural language processing significantly.

- 🔢 Large language models work with tokens, breaking text into chunks that are easier for the model to process and understand.

- 🔮 The predictive process involves creating an expanding window pattern, allowing the model to generate coherent and contextually relevant responses.

- 🎲 A degree of randomness is introduced in the selection of output tokens to simulate creative thinking and ensure variability in output.

- 📝 Examples of using large language models include generating assignments, answering questions, and providing writing assistance in an educational context.

Q & A

What is the main focus of the 'Generative AI for Beginners' course?

-The course focuses on introducing generative AI and large language models, exploring their capabilities and applications, particularly in revolutionizing education through a fictional startup.

Who is Carot Cuchu and what is his role?

-Carot Cuchu is a Cloud Advocate at Microsoft, specializing in artificial intelligence technologies. He introduces the concept of generative AI in the course.

What is the ambitious mission of the fictional startup mentioned in the script?

-The startup's mission is to improve accessibility in learning on a global scale, ensuring equitable access to education and providing personalized learning experiences to every learner according to their needs.

How does the course plan to address the challenges associated with generative AI?

-The course will examine the social impact of the technology and its technological limitations, discussing how the fictional startup harnesses the power of generative AI while addressing these challenges.

What is the significance of the 1990s in the development of AI technology as mentioned in the script?

-The 1990s marked a significant turning point with the application of a statistical approach to text analysis, leading to the birth of machine learning algorithms that could learn patterns from data without explicit programming.

What advancements in hardware technology allowed for the development of advanced machine learning algorithms?

-Advancements in hardware technology enabled the development of neural networks, which significantly improved natural language processing capabilities.

What is the Transformer architecture and its role in generative AI?

-The Transformer architecture is a new model that emerged after decades of AI research. It can handle longer text sequences as input and is based on the attention mechanism, which allows it to focus on the most relevant information in the input text.

What is tokenization and why is it important in large language models?

-Tokenization is the process of breaking down input text into an array of tokens, which are then mapped to token indices. This process is crucial as it converts text into a numerical format that the model can process and understand more efficiently.

How does a large language model predict the output token?

-The model predicts the output token based on the probability distribution calculated from its training data. It introduces a degree of randomness to simulate creative thinking, ensuring the model does not always choose the token with the highest probability.

What are the different types of textual inputs and outputs for a large language model?

-The input is known as a 'prompt', and the output is known as 'completion'. Prompts can include instructions, questions, or text to complete, and the model generates the next token to complete the current input.

What will be covered in the following lessons of the course?

-In the following lessons, the course will explore different types of generative AI models, how to test, iterate, and improve performance, and compare different models to find the most suitable one for specific use cases.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Open Source Models and Hugging Face [Pt 16] | Generative AI for Beginners

What Skillsets Takes You To Become a Pro Generative AI Engineer #genai

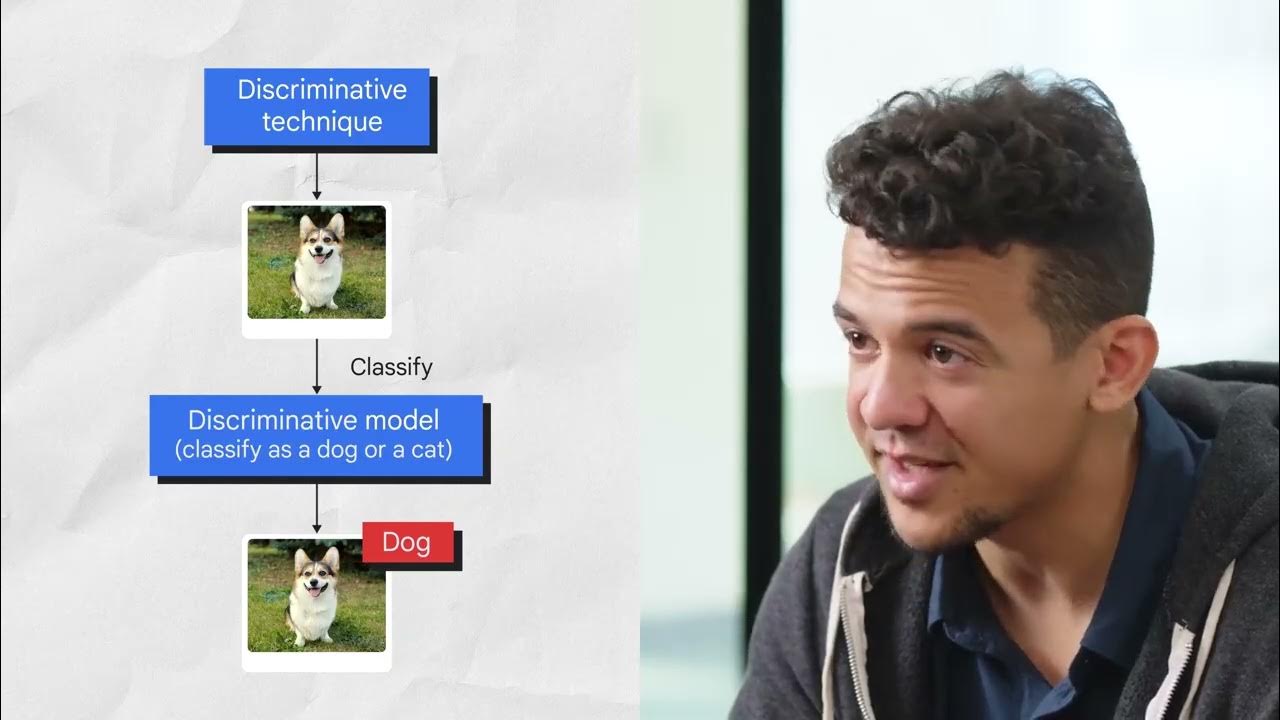

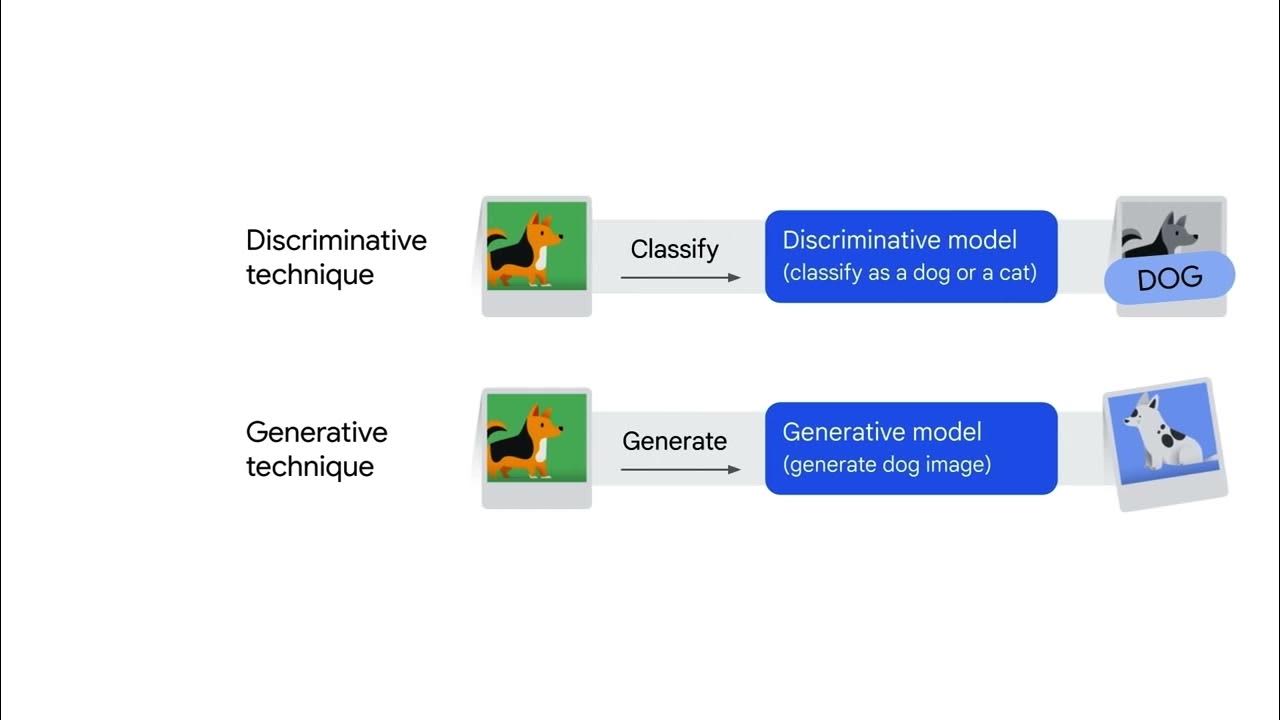

Introduction to Generative AI

Introduction to Generative AI

Generative AI Vs NLP Vs LLM - Explained in less than 2 min !!!

Exploring Job Market Of Generative AI Engineers- Must Skillset Required By Companies

5.0 / 5 (0 votes)