AI4E V3 Module 4

Summary

TLDRThis course module delves into AI's limitations, emphasizing the distinction between current 'narrow AI' and the futuristic 'general AI'. It discusses the importance of unbiased, accurate data in AI training to avoid perpetuating societal biases. The script addresses ethical concerns, the potential for AI misuse, and the significance of explainability and transparency in AI decision-making. It also highlights the role of AI governance frameworks in ensuring ethical AI deployment and the impact of AI on society and business.

Takeaways

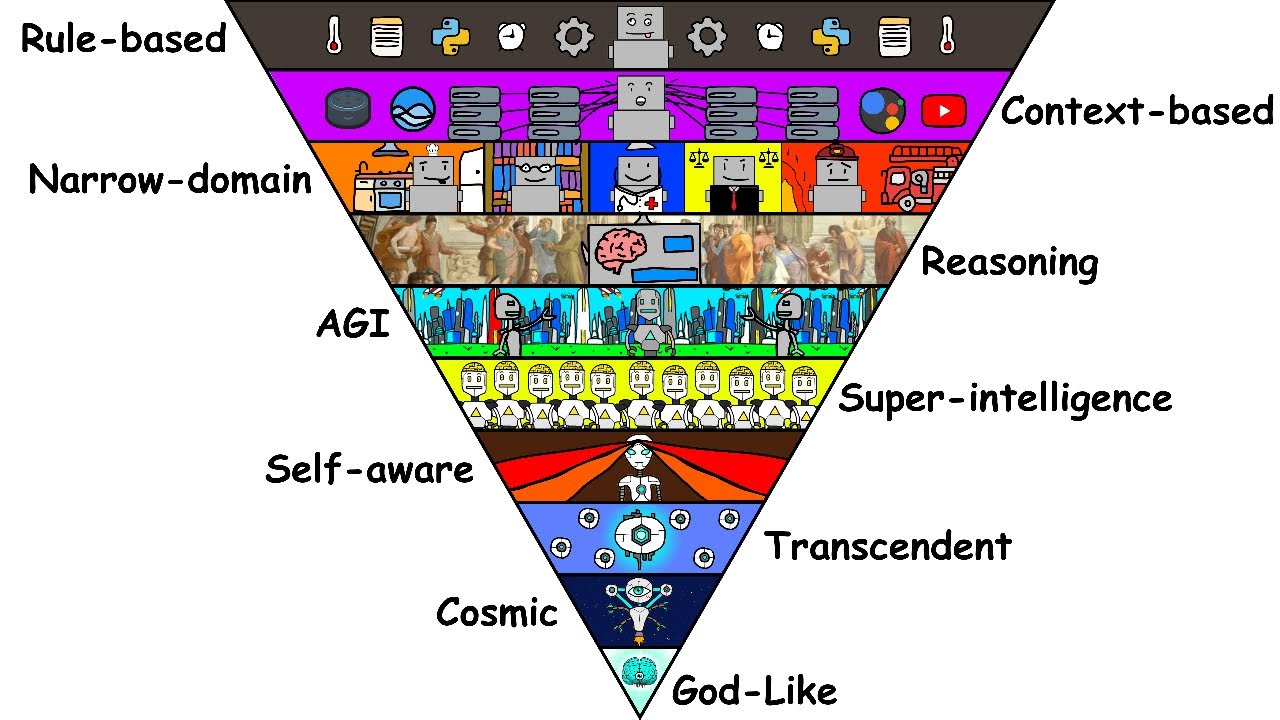

- 🧠 AI has limitations: The script emphasizes that AI, particularly 'narrow AI,' is limited to the tasks it has been trained for and cannot think or act like humans autonomously.

- 🤖 Hollywood portrayal is unrealistic: AI in movies often shows AI as sentient beings, but in reality, we are far from creating AI that can independently think or act against humans.

- 🔮 AGI is a distant goal: Artificial General Intelligence (AGI), where AI has human-like intelligence, is not yet achievable with current technology.

- 🔍 AI is a pattern recognizer: AI's capabilities are best described as advanced pattern recognition, rather than human-like cognition.

- 🚫 Garbage in, garbage out: The quality of AI predictions is directly affected by the quality of the input data; bad data leads to inaccurate outcomes.

- 📈 Data can introduce bias: AI systems can perpetuate and even amplify existing biases if trained on biased data sets.

- 👁️ Vision systems can be fooled: AI-based computer vision systems can be misled by images they have not been trained on, showing their brittleness.

- 🔏 Ethical considerations in AI: AI itself is neutral, but it can be used to implement or amplify unethical practices, policies, or decisions.

- 🛑 The Trolley Problem in AI: AI does not have ethics; it's a tool that can be influenced by the ethical considerations of its creators and users.

- 🚫 AI gone wrong: The script provides examples of AI projects that failed due to issues like bias, racism, and misinformation.

- 🌐 Global AI governance frameworks: Various countries and organizations are developing frameworks to guide the ethical and responsible use of AI.

Q & A

What is the primary distinction between AI as depicted in movies and the current state of AI technology?

-The primary distinction is that movies often show AI as robots that can think for themselves and may even turn against humans, while in reality, AI today is known as 'narrow AI,' which is only effective at specific tasks it has been trained for and cannot think or act like humans.

What is 'narrow AI' and how does it differ from 'Artificial General Intelligence' (AGI)?

-Narrow AI refers to AI systems that are highly specialized and can only perform well in the specific tasks they have been trained for. AGI, on the other hand, is a theoretical form of AI that would possess intelligence comparable to humans, capable of understanding, learning, and applying knowledge across a wide range of tasks.

Why is it important to ensure the data used to train AI systems is unbiased?

-It is important because if the training data is biased, the AI system will learn and perpetuate those biases, leading to unfair and potentially harmful outcomes. For example, an AI system trained to recommend salaries based on biased historical data may continue to recommend lower salaries for women.

How can AI systems be fooled or manipulated by external factors?

-AI systems can be fooled by carefully crafted inputs designed to trick them, such as color stickers on a stop sign that can make a self-driving car misinterpret it as a different sign, or specific patterns worn by individuals to avoid detection in CCTV footage.

What is the 'garbage in, garbage out' principle in the context of AI?

-The 'garbage in, garbage out' principle means that if the data input into an AI system is of poor quality, incomplete, or incorrect, the AI's predictions and outputs will also be of poor quality and accuracy.

What is the main ethical concern regarding the deployment of AI systems?

-The main ethical concern is that AI systems can amplify and scale the improper implementation of policies or biases present in the data they are trained on, leading to unfair or harmful consequences for certain groups of people.

What are some examples of AI projects that have gone wrong due to ethical or bias issues?

-Examples include Microsoft's chatbot Tay, which learned to generate racist and bigoted comments from users, and AI systems that claimed to identify criminals based on facial features, which can be biased due to the source of the training data.

What is the 'Trolley Problem' in the context of AI and autonomous vehicles?

-The Trolley Problem is a thought experiment that poses a moral dilemma about choosing between two harmful outcomes. In the context of AI, it is used to discuss the ethical decisions autonomous vehicles might have to make, such as choosing between two groups of people to minimize casualties in an unavoidable accident.

What are the two guiding principles of the Model AI Governance Framework proposed by PDPC and IMDA?

-The two guiding principles are that decisions made by AI should be explainable, transparent, and fair, and that AI systems should be human-centric, focusing on the benefit to humanity before other purposes.

How does the AI Model Audit Framework help in ensuring the ethical and responsible use of AI?

-The AI Model Audit Framework provides a holistic view of what it takes to get an AI model to market safely and ethically, covering aspects such as internal governance, human involvement, decision making, operations management, and stakeholder interactions.

What role do quality and standards play in the adoption and implementation of AI technologies?

-Quality and standards set a bar for the industry to meet, ensuring that AI technologies are developed and implemented in a way that is efficient, secure, and reliable. They also facilitate trade and strengthen competitiveness by providing a benchmark for quality and performance.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

What Is AI? This Is How ChatGPT Works | AI Explained

The 10 Stages of AI Explained in 10 Minutes

6 Tipe Dan Klasifikasi A.I Yang Harus Kamu Pahami - Tipe Artificial Intelligence / Kecerdasan Buatan

ISTQB Certified Tester AI Testing Explained – Chapter 1 – Introduction to AI

Lec 06- Customer value and Role of AI in Value Delivery Process

Lec 16-What is Marketing Research-I

5.0 / 5 (0 votes)