How AI tells Israel who to bomb

Summary

TLDRHeba, a resident of northern Gaza, reflects on the loss of her home and the devastating impact of AI-directed bombings in the region. AI systems like Gospel and Lavender have been used by the Israeli Defense Forces to identify and target locations in Gaza, leading to significant civilian casualties. These systems rely on extensive data collection and predictive algorithms but often lack precise accuracy, resulting in high collateral damage. The use of AI in warfare raises ethical concerns and highlights the need for stringent oversight and accountability.

Takeaways

- 🌍 Heba's family evacuated northern Gaza on October 11th, 2023, and by February, her home was destroyed.

- 📸 AI systems are used by the Israeli Defense Forces (IDF) for targeting in Gaza, causing significant destruction.

- 💥 The AI system, Gospel, identifies bombing targets in Gaza by analyzing large-scale data on Palestinian and militant locations.

- 🔍 AI systems like Alchemist and Fire Factory collect and categorize data, leading to target generation.

- 🏢 Power Targets include residential and high-rise buildings with many civilians, aiming to exert civil pressure on Hamas.

- ⚠️ Lavender, another AI system, targets specific people, often leading to significant civilian casualties.

- 👨🔬 AI systems' effectiveness and accuracy depend heavily on the quality and understanding of the data and human oversight.

- 📜 Human analysts conduct independent examinations before target selection, but sometimes only check the gender of the target.

- 🤖 Gaza is seen as an unwilling test site for future AI technologies in warfare, lacking sufficient oversight and accountability.

- 🕊️ There is a concern that faster warfighting with AI will not lead to global security and peace but may worsen civilian casualties.

Q & A

What was Heba's reaction when she saw the picture of her house?

-Heba and her family were in shock upon seeing the picture of their destroyed home.

What role does AI play in the destruction of Gaza since October 7th, 2023?

-AI systems have been used to identify and direct bombing targets in Gaza, significantly influencing the destruction.

What is the Iron Dome and how does it use AI?

-The Iron Dome is a defensive system that uses AI to disrupt missile attacks, protecting Israel from aerial threats.

How does the SMASH system work?

-The SMASH system is an AI precision assault rifle sight that uses image-processing algorithms to target enemies accurately.

What is the Gospel system?

-Gospel is an AI system that produces bombing targets in Gaza by analyzing surveillance and historical data.

What are 'power targets' according to the IDF?

-Power targets are residential and high-rise buildings with civilians that the IDF targets to exert civil pressure on Hamas.

What is the Lavender system and its function?

-Lavender is an AI system that targets specific individuals, identifying Hamas and Islamic Jihad operatives based on historical data and surveillance.

What was the human approval process for AI-selected targets?

-The human approval process involved ensuring the AI-selected target was male before bombing the suspected junior militants' houses.

What are some concerns experts have about using AI in warfare?

-Experts are concerned about the imprecise and biased automation of targets, leading to increased civilian casualties and lack of sufficient oversight.

Why has Israel not signed the US international framework for the responsible use of AI in war?

-The script does not specify why Israel has not signed, but it highlights the need for more oversight and accountability in the use of AI in warfare.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

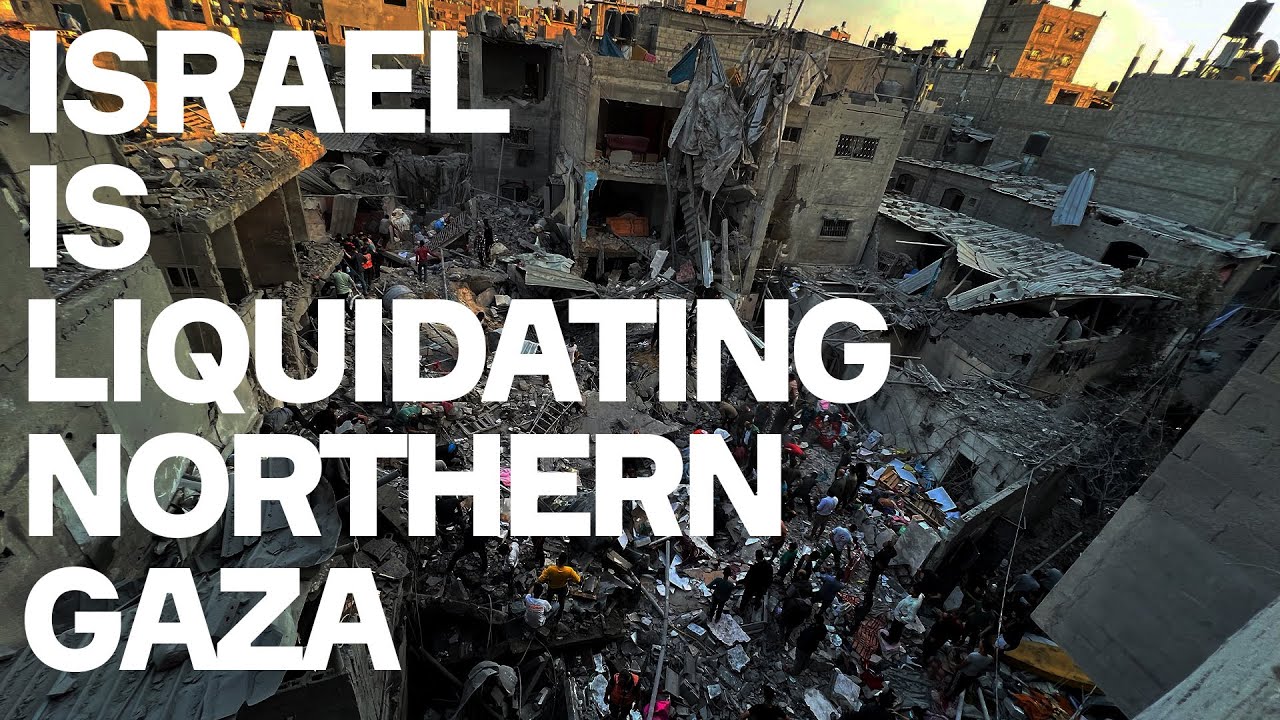

Israel Is Liquidating Northern Gaza - And Boasting About It

Sangue e farina: Gaza attraverso gli occhi di Amal Khayal

US says “policy of starvation” in Gaza would be “horrific and unacceptable” | BBC News

Palestinian ambassador to the UN addresses General Assembly

Penampakan Hiroshima-Nagasaki Dibom AS pada 79 Tahun Lalu

Controversy over the decision to drop atomic bombs on Japan still lingers

5.0 / 5 (0 votes)