COS 333: Chapter 6, Part 3

Summary

TLDRThis lecture delves into advanced data types, focusing on unions, pointers, and references, and their implications on memory management and type safety. It distinguishes between free and discriminated unions, highlighting the dangers of type-unsafe operations in C and C++. The lecture also explores strong versus weak typing, type equivalence, and the role of type checking in preventing errors, concluding with a brief introduction to data type theory and its significance in programming language design.

Takeaways

- 📚 The lecture concludes the discussion on Chapter Six, covering advanced topics like union types, pointer and reference types, type checking, strong versus weak typing, type equivalence, and data type theory.

- 🔄 Union types allow a variable to store different data types at different times but only one value can be set at a time, sharing the same memory space for all fields.

- 🛠️ Unions are useful for conserving memory space when only one of multiple variables is in use at a time, but they are less useful in modern programming due to the abundance and affordability of memory.

- ⚠️ Free unions do not support type checking and can lead to unsafe programming practices, while discriminated unions, supported by languages like Ada, include type checking for safety.

- 🔬 Discriminated unions use a 'discriminant' or 'tag' to track the type of data stored, preventing errors associated with accessing the wrong fields.

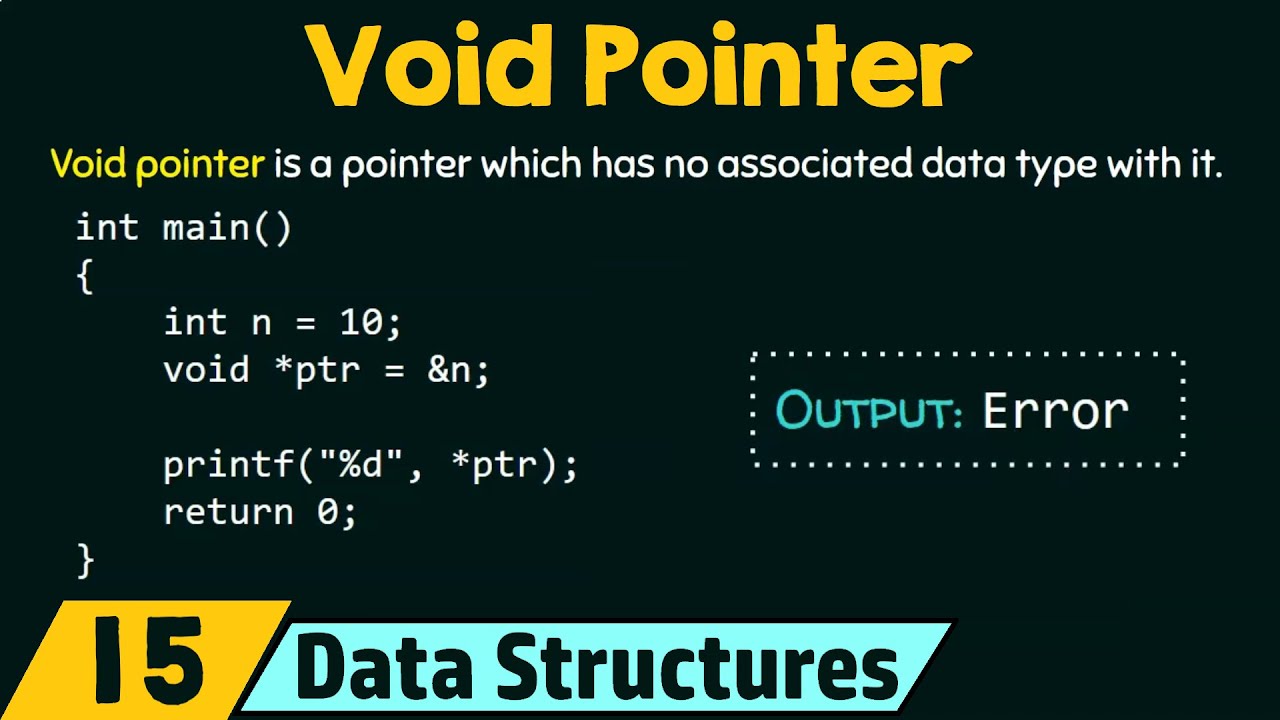

- 👀 Pointers store memory addresses and can be used for indirect addressing and dynamic memory management, but they can introduce complexity and potential errors like dangling pointers and memory leaks.

- ➡️ Pointer arithmetic allows for the manipulation of memory addresses to access different parts of memory but requires careful handling to avoid out-of-bounds access.

- 🔐 References in languages like C++ and Java provide a safer alternative to pointers for indirect addressing, as they cannot perform pointer arithmetic and must always be initialized.

- 🔄 Coercion can weaken typing in programming languages by automatically converting types to match operators, potentially masking type mismatches.

- ⚖️ Strong typing ensures that type errors are always detected, leading to more reliable code, while weak typing may allow certain type errors to go undetected.

- 📘 The lecture touches on type theory, distinguishing between practical type theory relevant to commercial programming languages and abstract type theory, which is of more interest to theoretical computer scientists.

Q & A

What is a union type in programming?

-A union type is a type that can store values of different types at different times during execution. It is characterized by the fact that only one of its fields may contain a value at a time, and all fields within a union share the same storage space.

Why are unions considered less useful in modern programming languages?

-Unions are considered less useful in modern programming languages because they were particularly useful in the early days of computing when memory was scarce and expensive. Today, with large and comparatively cheap memory, the need for conserving memory space through unions has diminished.

What are the two design issues that arise when implementing unions in a programming language?

-The two design issues are whether type checking should be required for unions and whether unions should be embedded within records or be a separate type on their own.

What is the difference between free unions and discriminated unions?

-Free unions do not support type checking, leaving it to the programmer to track which type is stored. Discriminated unions, on the other hand, support type checking through the use of a discriminant or tag that helps check the type of the union and detect errors when invalid fields are accessed.

Why are pointers considered dangerous in programming?

-Pointers are considered dangerous because they can lead to issues like dangling pointers and lost heap dynamic variables. They also increase the number of routes to access a specific line in a program, similar to the 'go-to' statement, which can complicate program flow and make it harder to understand.

What are the two fundamental operations that must be supported for pointers in a programming language?

-The two fundamental operations for pointers are assignment, which allows setting a pointer's value to a memory address, and dereferencing, which retrieves the value stored at the location a pointer refers to.

What is pointer arithmetic, and how is it used?

-Pointer arithmetic allows the addition of values to pointers to compute offsets from a specific memory location. It is used to access elements in arrays or to navigate through memory addresses.

What is the difference between a pointer and a reference in programming?

-A pointer stores the memory address of a value it refers to, while a reference is an alias for another value. Pointers can be manipulated as addresses, whereas references are treated as the values they refer to and cannot perform pointer arithmetic.

Why are references considered safer than pointers?

-References are considered safer than pointers because they cannot be used for pointer arithmetic, which prevents errors like accessing memory beyond the bounds of an array. Additionally, references must always be initialized to an existing object, avoiding the issue of uninitialized or dangling references.

What is type checking, and why is it important?

-Type checking is the process of verifying that the operands of an operator are of compatible types. It is important because it helps ensure that operations are performed on the correct types of data, preventing type errors that could lead to unexpected behavior or program crashes.

What is the difference between static and dynamic type checking?

-Static type checking is performed at compile-time and can catch type errors before the program runs. Dynamic type checking occurs at runtime and may result in runtime type errors if the types are not compatible.

What does it mean for a programming language to be strongly typed?

-A strongly typed programming language is one that always detects type errors, ensuring that all variable misuses causing type errors are caught, typically at compile-time.

What is type equivalence in the context of programming languages?

-Type equivalence defines when operands of two types can be substitutable with no coercion taking place. It sets the rules for which types are acceptable for all operators and can be based on either name type equivalence or structure type equivalence.

What are the potential drawbacks of implementing structure type equivalence in a programming language?

-Implementing structure type equivalence can be complex and may lead to potential problems such as difficulty in differentiating between types with the same structure but different meanings, and the need to answer a multitude of questions regarding how types should be considered equivalent.

What is data type theory, and what are its branches?

-Data type theory is a broad area of study concerned with data types and their properties. It has two main branches: practical type theory, which deals with data types in commercial and real-world programming languages, and abstract type theory, which often involves typed lambda calculus and is of interest mainly to theoretical computer scientists.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)