What is Semi-Supervised Learning?

Summary

TLDRThis video explains semi-supervised learning, a method that combines a small amount of labeled data with abundant unlabeled data to improve AI model performance. Using the example of classifying cats and dogs, it highlights the challenges of manual labeling, overfitting, and limited datasets. The video covers key techniques including the wrapper method with pseudo-labels, unsupervised pre-processing with autoencoders, clustering-based methods, and active learning with human input. By combining these approaches, models can learn more effectively while reducing annotation effort. The process is likened to raising a pet: balancing guidance, freedom, and continuous learning for optimal results.

Takeaways

- 🐱 Semi-supervised learning combines a small amount of labeled data with a large amount of unlabeled data to train AI models effectively.

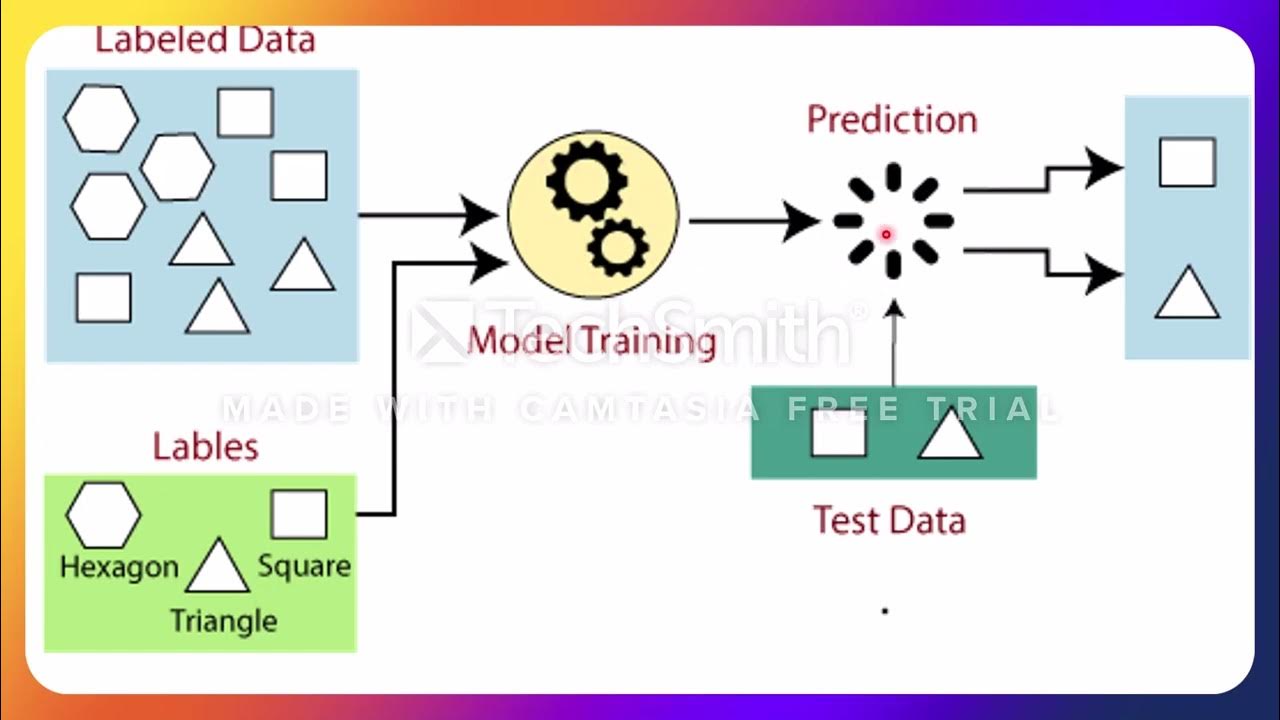

- 📊 Supervised learning requires fully labeled datasets, which can be time-consuming and tedious to create.

- ⏳ Labeling data, especially in specialized domains like genetics or protein classification, requires both time and domain expertise.

- ⚠️ Using only a limited amount of labeled data can lead to overfitting, where the model performs well on training data but poorly on new data.

- 📈 Incorporating unlabeled data into training expands the dataset, helping the model generalize better.

- 📝 The wrapper method uses a trained model to assign pseudo-labels to unlabeled data, which can then be added to the training set for iterative retraining.

- 🤖 Unsupervised pre-processing, like using autoencoders, extracts meaningful features from unlabeled data to improve supervised model performance.

- 🔗 Clustering-based methods group similar data points together, allowing unlabeled points in a cluster to adopt labels from nearby labeled examples.

- 👥 Active learning involves human annotators labeling only low-confidence or ambiguous samples to maximize efficiency.

- 🔄 Semi-supervised learning methods can be combined for better results, such as pre-processing, clustering, pseudo-labeling, and active learning in one workflow.

- 🎯 The ultimate goal is to create a better-fitting AI model with minimal labeling effort, leveraging both structure and flexibility in learning.

Q & A

What is semi-supervised learning?

-Semi-supervised learning is a machine learning approach that combines a small amount of labeled data with a larger amount of unlabeled data to improve model performance and generalization.

Why is labeling data considered challenging?

-Labeling data can be time-consuming, tedious, and often requires domain expertise, especially in specialized areas like genetics or protein classification.

How does supervised learning work in image classification?

-Supervised learning uses a labeled dataset where each image is tagged with a class, like 'cat' or 'dog.' The model learns patterns from these labeled examples and adjusts its parameters to correctly predict labels on new images.

What is overfitting and why is it a concern in supervised learning?

-Overfitting occurs when a model performs well on the training data but poorly on unseen data. It happens when the dataset is small or unrepresentative, causing the model to learn irrelevant patterns rather than meaningful features.

How does semi-supervised learning help reduce overfitting?

-By incorporating a large amount of unlabeled data, semi-supervised learning effectively expands the training dataset, giving the model more context and improving its ability to generalize to new examples.

What is the wrapper method in semi-supervised learning?

-The wrapper method trains a base model on labeled data, predicts labels for unlabeled data as pseudo-labels, and retrains the model on the combined dataset iteratively to improve accuracy.

How do autoencoders assist in semi-supervised learning?

-Autoencoders extract compact and meaningful features from unlabeled data, capturing essential patterns like shapes and textures. These features help train supervised models more effectively and improve generalization.

What role does clustering play in semi-supervised learning?

-Clustering groups similar data points together based on their features. Unlabeled data in a cluster can be assigned pseudo-labels based on the labeled examples within the same cluster, helping expand the training dataset.

What is active learning and how is it used in SSL?

-Active learning involves humans labeling only low-confidence or ambiguous examples. It ensures human effort is focused on data points where the model is unsure, improving overall training efficiency.

Can multiple semi-supervised learning techniques be combined?

-Yes, techniques can be combined. For example, autoencoders can extract features from unlabeled data, clustering can group similar points, the wrapper method can refine pseudo-labels, and active learning can target uncertain samples for human labeling.

Why is semi-supervised learning especially valuable in specialized domains?

-In specialized domains like genetic sequencing or protein classification, labeled data is scarce and costly to obtain. Semi-supervised learning allows models to leverage abundant unlabeled data to improve performance without extensive labeling.

What is a pseudo-label in the context of semi-supervised learning?

-A pseudo-label is a label assigned to an unlabeled data point by a model, typically with a probability indicating the model's confidence. High-confidence pseudo-labels are treated as ground truth for retraining.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

L8 Part 02 Jenis Jenis Learning

TYPES OF MACHINE LEARNING-Machine Learning-20A05602T-UNIT I – Introduction to Machine Learning

Supervised vs. Unsupervised Learning

U6-30 V5 Maschinelles Lernen Algorithmen V2

1.2. Supervised vs Unsupervised vs Reinforcement Learning | Types of Machine Learning

#6 Machine Learning Specialization [Course 1, Week 1, Lesson 2]

5.0 / 5 (0 votes)