Top Ten MLOps Interview questions|| ML and AI #mlops #machinelearning # artificial intelligence

Summary

TLDRIn this video, Pradeep dives into the top 10 ML Ops interview questions, covering essential concepts such as ML Ops pipelines, data pipelines, training, testing, and model inference. He explains the importance of integrating tools like Apache Spark, Airflow, and AWS services for managing machine learning models in production. Topics like data drift, model drift, and continuous monitoring are also discussed to ensure models stay effective over time. The session provides practical insights into building and maintaining efficient ML Ops systems, making it crucial for anyone looking to excel in ML Ops roles.

Takeaways

- 😀 MLOps is a paradigm that aims to deploy and maintain machine learning models in production reliably and efficiently, addressing challenges like data and model drifting.

- 😀 Only 10% of ML models make it to production, highlighting the importance of having a well-defined MLOps pipeline to improve model deployment success.

- 😀 The MLOps pipeline consists of five key pipelines: Data Pipeline, Training Pipeline, Testing Pipeline, Model Inference Pipeline, and Model Monitoring Pipeline.

- 😀 MLOps integrates with DevOps by adding two crucial elements: DataOps and ModelOps, focusing on managing data and model drift within the production pipeline.

- 😀 Data Pipelines are responsible for collecting and processing raw data, transforming it into features for model training, with tools like Apache Spark and AWS SageMaker.

- 😀 Training Pipelines load models from the development environment, experiment with hyperparameters, and retrain them with new data, using tools like MLFlow and Airflow.

- 😀 The Testing Pipeline validates models with unseen data, ensuring integration and performance in multiple stages: validation, unit testing, user acceptance testing, and deployment.

- 😀 In the Inference Pipeline, trained models are deployed to production environments, making predictions on live data with frameworks like FastAPI or MLFlow.

- 😀 Model and Data Monitoring Pipelines are critical for tracking performance and detecting any drifts in data or model behavior, triggering actions to retrain or adapt the models.

- 😀 Managing data drift involves detecting significant deviations in data patterns, which can trigger automatic actions like model retraining or returning to the development environment for further experiments.

Q & A

What is ML Ops and why is it important?

-ML Ops is a paradigm that aims to deploy and maintain machine learning models in production reliably and efficiently. It's crucial because only about 10% of machine learning models make it to production, and a well-defined ML Ops pipeline ensures that models are deployed successfully, even in the face of challenges like data drift and model drift.

What are the different components in an ML Ops system design?

-An ML Ops system can be designed using five main pipelines: data pipeline, training pipeline, testing pipeline, model inference pipeline, and model monitoring pipeline. These pipelines help manage the entire lifecycle of an ML model from development to deployment and monitoring in production.

What is the role of a data pipeline in ML Ops?

-A data pipeline in ML Ops is responsible for collecting, processing, and preparing raw data for training models. It involves stages such as data ingestion, feature engineering, and storing features in a physical store, which are later used for training the model. Tools like Apache Spark, Airflow, and AWS Sagemaker are commonly used for managing data pipelines.

How does the training pipeline work in ML Ops?

-The training pipeline loads models from the development environment and trains them with new data. It can either retrain the model with new data or without changing the hyperparameters. It is also connected to an ML flow tracking server, which logs every model run, allowing for tracking and comparison of different models during the training process.

What is a testing pipeline and why is it important?

-The testing pipeline ensures that the model performs as expected before it is deployed into production. It involves validating the model with unseen data, performing unit and integration tests, and passing the model through stages such as staging and user acceptance testing. Once the model passes these tests, it moves to the inference pipeline for deployment.

What is the purpose of the model inference pipeline?

-The model inference pipeline is responsible for deploying the trained model into a production environment where it can make predictions on live data. It uses frameworks like FastAPI and MLFlow for deployment, and once deployed, it feeds the model's performance into the monitoring pipeline for ongoing evaluation.

How do data and model monitoring work in ML Ops?

-The data and model monitoring pipeline is crucial for ensuring that models continue to perform well over time. It tracks data drift and model drift, which can occur when the model's performance degrades due to changes in input data or the model itself. Alerts are triggered if deviations are detected, and corrective actions are taken to retrain the model or address data issues.

What is data drift and how is it handled in ML Ops?

-Data drift occurs when the distribution of input data changes over time, which can affect model performance. In ML Ops, triggers are set to detect deviations in data patterns. For minor deviations (1-2%), no action is taken, but for larger deviations (5-8%), the training pipeline is triggered to retrain the model, and for significant changes (over 10%), the model may need to be experimented with again in the development environment.

What are the two ways to implement ML Ops?

-ML Ops can be implemented in two main ways: on-premises and in the cloud. On-premises solutions typically use open-source tools like MLFlow, Apache Airflow, and PyCaret, while cloud-based solutions make use of managed services like AWS Sagemaker, Google Cloud AI, and Azure ML, which provide scalable infrastructure and built-in features for deploying and managing models.

What are the advantages of implementing an ML Ops system over a simple model deployment?

-An ML Ops system offers several advantages over simple model deployment, including reducing lag in model development and deployment, continuous model training with new data, and automated monitoring of model performance. It also enables effective experimentation tracking and ensures that models are regularly updated to adapt to changing data, leading to improved accuracy and reliability over time.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

MLOps System Architecture|| MLOps|| #mlops #machinelearning # MLOps System Architecture

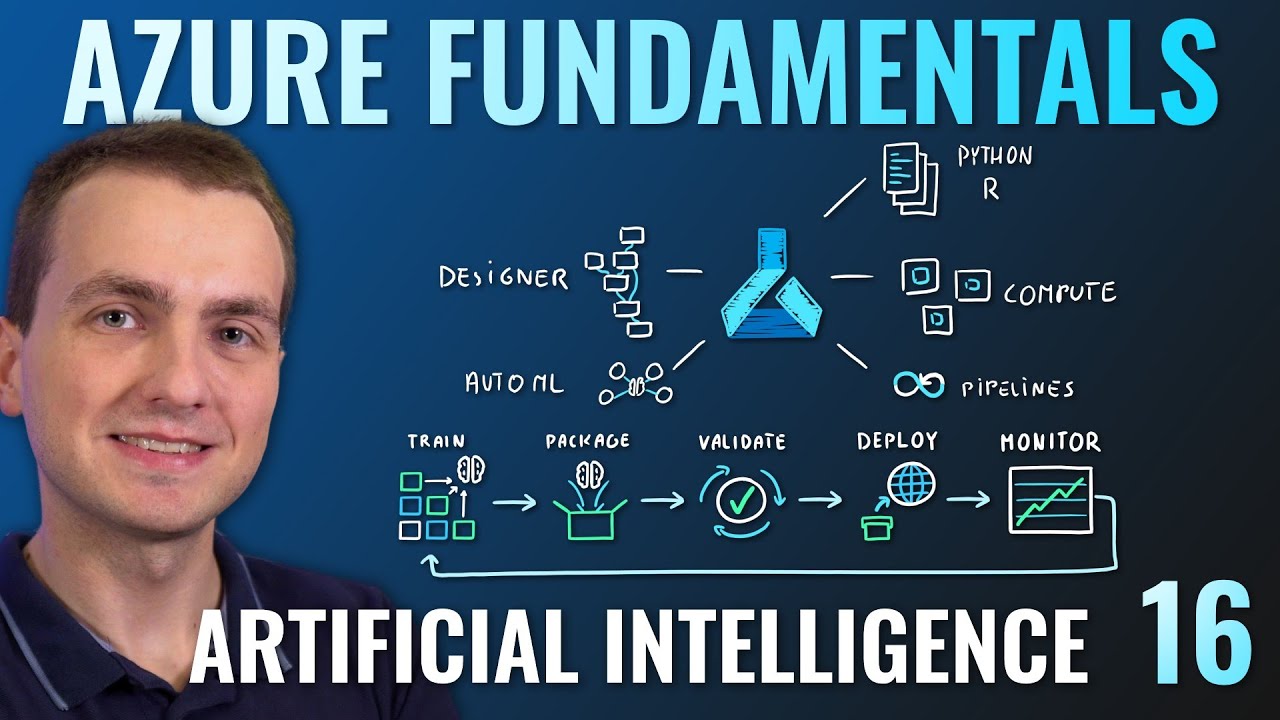

AZ-900 Episode 16 | Azure Artificial Intelligence (AI) Services | Machine Learning Studio & Service

Course 4 (113520) - Lesson 1

3 Must-Know Trends for Data Engineers | DataOps

Data Science Jobs Explained in 5 Minutes

ML Engineering is Not What You Think - ML jobs Explained

5.0 / 5 (0 votes)