SOSP '23 | Efficient Memory Management for Large Language Model Serving with PagedAttention

Summary

TLDRThis presentation discusses the challenge of efficiently serving large language models (LLMs) on GPUs, focusing on memory fragmentation in the KV cache. The solution, called Page Attention, virtualizes and partitions memory, significantly reducing fragmentation and improving throughput. By allowing for memory sharing, it enhances efficiency during parallel sampling and beam search. The system also integrates recomputation and swapping for memory management, achieving up to 4x higher throughput and 2.5-5x better memory usage. The authors demonstrate the effectiveness of Page Attention in real-world applications, with open-source adoption already in progress.

Takeaways

- 😀 Page Attention improves memory efficiency for serving large language models (LMs) by reducing fragmentation and enabling memory sharing.

- 😀 Serving large language models with GPUs can be slow and costly, with some GPUs managing less than one request per second for models like ModSiiz.

- 😀 Batching multiple requests together helps improve GPU parallelism, but inefficient memory management for KV cache (key-value cache) remains a major bottleneck.

- 😀 KV cache is a critical part of the inference process, but traditional methods of memory allocation for KV cache are highly inefficient, leading to significant memory waste due to fragmentation.

- 😀 Page Attention works by partitioning KV cache into smaller blocks and virtualizing them, similar to how operating systems use paging to reduce fragmentation.

- 😀 The system uses a block table to map logical and physical KV blocks, reducing memory waste by efficiently reusing memory across different requests.

- 😀 Although introducing a slight 10-15% overhead due to memory indirection, Page Attention delivers significant memory savings and higher throughput.

- 😀 Page Attention enables memory sharing between parallel samples during decoding, reducing both computation and memory usage, especially during tasks like beam search.

- 😀 With the use of recomputation and swapping techniques, the system efficiently manages memory even when all physical blocks are filled, preempting requests as needed.

- 😀 The system supports various preemption strategies, including swapping to CPU RAM and recomputing KV cache, with recomputation being faster and more efficient in most cases.

- 😀 VRM (Virtualized Resource Management) was implemented and evaluated using PyTorch, Hugging Face Transformers, Ray, and Megatron for distributed execution, outpacing state-of-the-art models by up to 4x in throughput.

Q & A

What is the main problem the paper addresses?

-The paper addresses the challenge of inefficient memory management in serving large language models (LMs), specifically related to the management of key-value (KV) cache, which leads to slow and expensive inference.

What is KV cache, and why is it important in LM inference?

-KV cache stores the intermediate token states (attention keys and values) during LM inference. It is crucial because the model needs to retain these states for generating the next token in the sequence, and inefficient management of this memory can cause significant performance issues.

Why is traditional memory management for KV cache inefficient?

-Traditional memory management pre-allocates a contiguous block of memory for the entire maximum sequence length. This leads to significant internal and external fragmentation, wasting a large portion of memory, as the actual sequence length varies and cannot be predicted.

How does Page Attention improve memory management for KV cache?

-Page Attention applies memory paging and virtualization to KV cache. It partitions the cache into fixed-size blocks and allows for dynamic allocation of these blocks as needed, reducing fragmentation and improving memory efficiency.

What is the key benefit of using Page Attention over traditional methods?

-Page Attention minimizes both internal and external fragmentation, leading to up to 96% utilization of KV cache, which represents a 2.5x to 5x improvement over traditional systems.

What is the impact of using smaller block sizes in Page Attention?

-Smaller block sizes can lead to higher overhead due to more frequent data transfers, but they can also improve memory efficiency by minimizing internal fragmentation. The optimal block size found in the paper is 16.

What role does memory sharing play in Page Attention?

-Memory sharing allows multiple parallel output samples to share the same KV cache memory, which reduces both compute and memory usage. This technique works well for scenarios like parallel sampling and beam search decoding.

How does Page Attention handle the situation when KV cache runs out of memory?

-When KV cache is exhausted, Page Attention uses two strategies: swapping KV blocks to CPU memory or recomputing the KV cache from scratch. Recomputing is often faster than swapping, particularly with smaller block sizes.

What is the significance of recomputation in Page Attention?

-Recomputation allows for the regeneration of KV cache by recomputing the sequence of tokens, which can be done efficiently in parallel on GPUs, avoiding the need to retain partial KV cache that wouldn't help in generating new tokens.

How does Page Attention compare to virtual memory in operating systems?

-Both systems use paging to reduce fragmentation and allow memory sharing. However, Page Attention is specifically designed for LM inference, using a simple block table and supporting request-level preemption and recomputation, which differs from the more complex paging systems in OS memory management.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

EfficientML.ai 2024 | Introduction to SVDQuant for 4-bit Diffusion Models

The KV Cache: Memory Usage in Transformers

Why LLMs get dumb (Context Windows Explained)

The Dark Matter of AI [Mechanistic Interpretability]

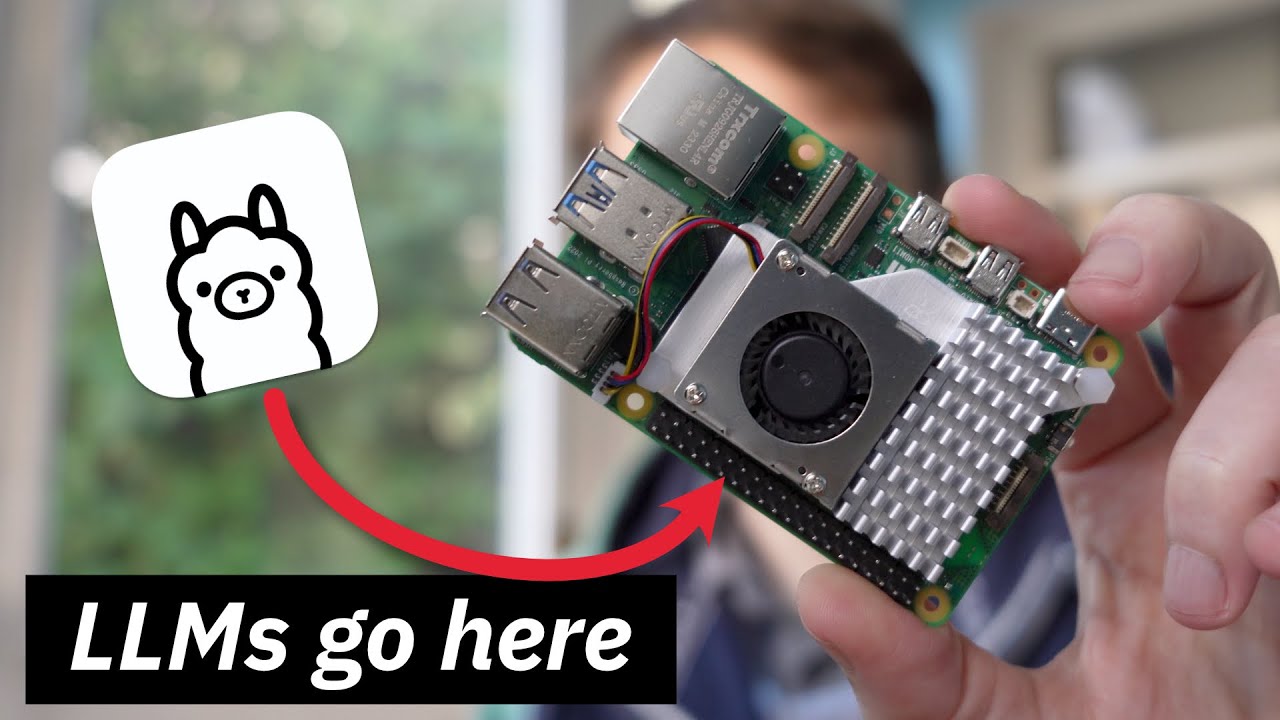

Using Ollama to Run Local LLMs on the Raspberry Pi 5

LLMs are not superintelligent | Yann LeCun and Lex Fridman

5.0 / 5 (0 votes)