How Sonrai Analytics leverages ML to accelerate Precision Medicine (L300) | AWS Events

Summary

TLDRこのプレゼンテーションでは、アイルランドのスタートアップソリューションアーキテクトであるジョナ・クレイグが、AWSのサービスを活用してがん治療の効率化を目指すスタートアップであるソナイアナリティクスをサポートしていることを紹介します。ソナイアナリティクスは、大量の医療データを扱い、機械学習を用いてがんの診断や治療の時間を短縮する取り組みを進めています。彼らはAWSのマネージドサービスを活用し、小規模ながらも技術的最先端を駆使して、医療分野におけるイノベーションを目指しています。また、プレゼンテーションでは、AWSのサービスであるSageMakerを使用して機械学習モデルをトレーニング、展開し、監視するプロセスについても説明されています。

Takeaways

- 🌟 ソナイアナリティクスは、AWS技術を活用してがん治療の臨床試験時間を短縮し、医療システムの効率化に貢献しています。

- 👔 ジョナ・クレイグはアイルランドのスタートアップソリューションアーキテクトとして、さまざまな規模のスタートアップをサポートしています。

- 🛠️ AWSのマネージドサービスを活用することで、スタートアップチームは限られたリソースを効果的に利用し、技術的な課題に取り組むことができます。

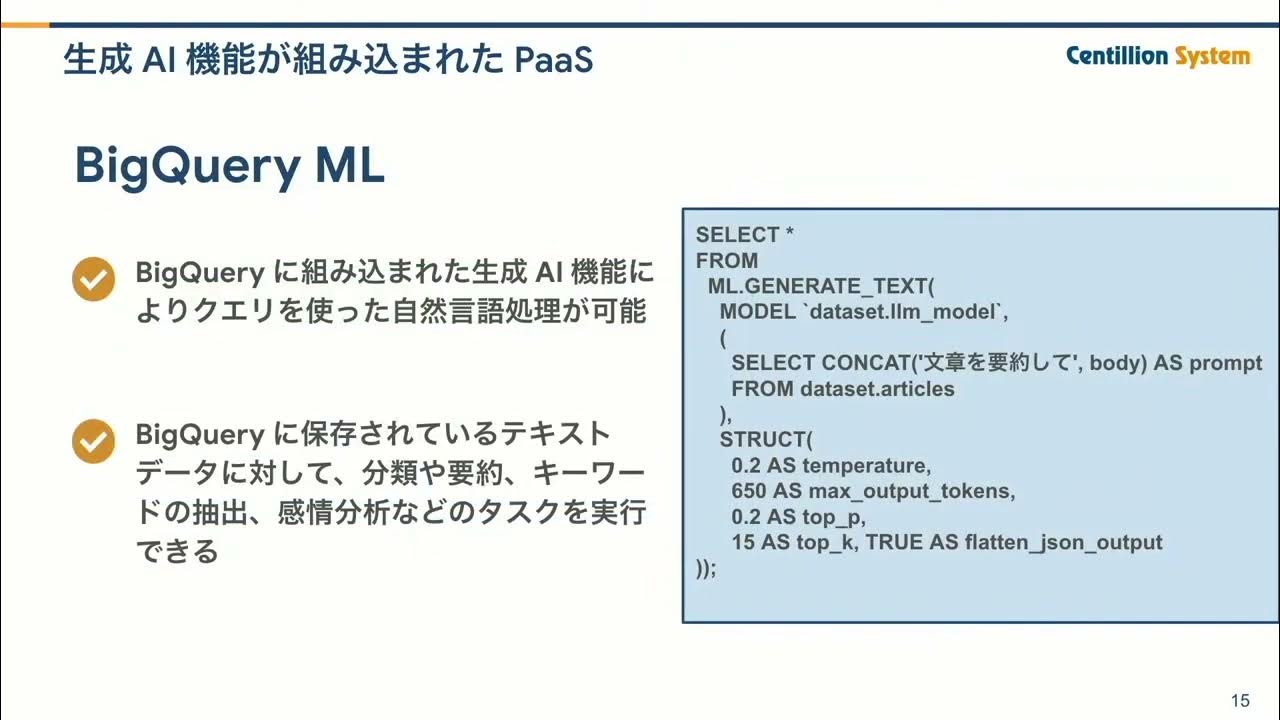

- 🔧 機械学習のループは、データの準備、モデルのトレーニング、モデルの展開、そしてモニタリングとオーケストレーションの4つの基本的なステップから成り立っています。

- 💾 ソナイアナリティクスは、ペットバイト単位のデータを扱うため、効果的なコスト管理システムとスケーラブルなアーキテクチャが必要です。

- 🧬 彼らのクライアントは、がんを治療するための新しい薬剤を開発しており、AIを活用して適切な治療法を特定しています。

- 🔬 コンピュータービジョンを使用した特定のユースケースでは、顕微鏡画像からがん細胞を検出するAIを開発しています。

- 🚀 AWSのサービススタックを活用して、トレーニングから推論までのプロセスを効率化し、モデルのパフォーマンスを最適化しています。

- 🌐 グローバルな展開が可能で、AWSのデータセンターを活用して、顧客ごとにセグメント化されたインスタンスを提供しています。

- 🛡️ データ保護とプライバシーに重きを置いたAWSサービスを使用することで、GDPRなどの規制に適合しています。

- 🔑 AWSのアクティベートプログラムを活用して、初期のビジネス開発と技術的な検証を迅速に行うことができました。

Q & A

AWS認定チャレンジとは何ですか?

-AWS認定チャレンジは、AWSのサービスや機能を学ぶためのプログラムで、機械学習やソリューションアーキテクチャを含むAWSの様々な認定資格に挑戦することができる。

ソナイアナリティクスはどのような企業ですか?

-ソナイアナリティクスは、AWS技術を利用してがんのドラッグ試験時間を短縮することを目指すスタートアップ企業であり、医療分野における効率性向上に貢献している。

Jonah Craigの現在の職務は何ですか?

-Jonah Craigはアイルランドにいるスタートアップソリューションアーキテクトとして、ソナイアナリティクスなどのスタートアップ企業をサポートしている。

ソナイアナリティクスが取り組んでいるデータの種類にはどのようなものがありますか?

-ソナイアナリティクスは、医療や生命科学分野のデータを扱っており、petabytesのデータを処理する必要がある。

AWSサービスの中でソナイアナリティクスが使用しているものには何がありますか?

-ソナイアナリティクスはAWSのサービスを幅広く使用しており、SageMaker、Health Omix、Athena、Glue、Lambda、Fargate、ECSなどが挙げられる。

ソナイアナリティクスが取り組んでいるがん治療アルゴリズムの重要なポイントは何ですか?

-ソナイアナリティクスは、精確医学を通じてがんの治療法を開発しており、アルゴリズムを用いて患者さんに適切な治療法を提供することに重点を置いている。

AWSのSageMaker Studioの機能は何ですか?

-SageMaker Studioは、データの準備からモデルの展開までを一連のプロセスで管理し、機械学習エンジニアやデータサイエンティストが協力できる環境を提供している。

ソナイアナリティクスが使用しているAWSのサーバーレスコンピュートとは何ですか?

-AWSのサーバーレスコンピュートは、コードを実行するためのサーバーの管理を必要としないコンピュートサービスであり、LambdaやFargateなどが該当する。

ソナイアナリティクスが直面しているデータストレージの課題とは何ですか?

-ソナイアナリティクスは、petabytesのデータを効率的に管理し、コストを最適化する必要があり、S3のライフサイクル管理を活用してホットとコールドのストレージ間でデータを移動させている。

ソナイアナリティクスが開発しているAIアルゴリズムの目的は何ですか?

-ソナイアナリティクスは、AIアルゴリズムを開発してがんの治療法を迅速化し、病理学者の作業を支援することで、患者の診断と治療の時間を短縮することを目指している。

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)