Max Tegmark | On superhuman AI, future architectures, and the meaning of human existence

Summary

TLDRThe speaker reflects on their journey from pondering the cosmos to delving into artificial intelligence and neuroscience at MIT. They draw parallels between the unexpected advancements in AI and the history of flight, emphasizing the importance of humility in the face of rapid technological progress. The discussion explores the potential of AI to revolutionize education, the need for safety standards in AI development, and the future where AI could help solve humanity's most pressing issues. The speaker advocates for a future where humans remain in control, with AI as a tool for societal betterment, and stresses the importance of creating meaning in a universe that doesn't inherently provide it.

Takeaways

- 🌌 The speaker's lifelong fascination with the mysteries of the universe and the human mind led them to a career in AI and neuroscience research at MIT.

- 🤖 The rapid development of AI, particularly in language mastery, has surprised many experts, highlighting the importance of remaining humble and open to unexpected advancements.

- 🔄 The speaker compares the evolution of AI to the development of flight, suggesting that sometimes simpler solutions emerge before fully understanding complex biological counterparts.

- 💡 AI research can benefit from a broader perspective that includes both the vastness of cosmology and the intricacies of the human brain.

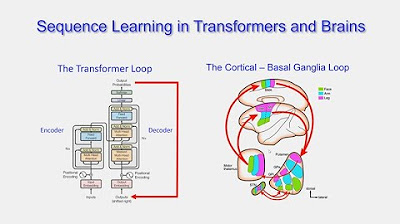

- 🔗 The speaker believes that future AI advancements will likely incorporate elements from the brain's structure, such as loops and recurrence, which are missing in current transformer models.

- 🧠 Human brains are efficient, using significantly less power than current AI data centers, indicating a need for more efficient AI models that can learn from fewer examples.

- 🔑 The speaker suggests that the key to creating superhuman AI lies in combining neural networks with symbolic reasoning, similar to human cognitive processes.

- 🌐 AI's ability to make analogies and generalize from one domain to another is a significant area of research, with implications for how models understand and translate knowledge.

- 🚀 The potential for AI to revolutionize education by personalizing learning and providing deep insights is an exciting prospect for the future.

- ⚠️ There is a call for safety standards and regulations in AI development to ensure that powerful technologies are used responsibly and ethically.

- 🛠️ The speaker emphasizes the need for AI systems to be more interpretable and trustworthy, suggesting that AI could help us understand itself through automated reasoning and symbolic regression.

Q & A

What were the two biggest mysteries that inspired the speaker's career?

-The speaker was inspired by the mysteries of the universe and the universe within the mind, which refers to intelligence and the workings of the human brain.

How has the speaker's research focus evolved over time?

-The speaker started with researching the outer universe and then shifted their focus to artificial intelligence and neuroscience, conducting research at MIT for the past eight years.

What comparison does the speaker make between the development of AI and the history of flight?

-The speaker compares the unexpected advancements in AI to the development of flight, noting that just as mechanical birds were not necessary to invent flying machines, understanding the human brain may not be necessary to create advanced AI.

What does the speaker think about the current state of AI and its potential future developments?

-The speaker believes that current AI technologies, like transformers, will be seen as primitive in the future, and that we will develop much better AI architectures that require less data, power, and electricity.

How does the speaker view the relationship between the human brain and AI systems?

-The speaker suggests that while AI systems like transformers are powerful, the human brain operates differently, using loops and less data. They believe future AI will incorporate elements of how the brain works.

What role do analogies play in the development of AI according to the speaker?

-Analogies are crucial for both human reasoning and AI development. They allow AI models to derive higher abstractions and perform transfer learning between different domains.

How does the speaker perceive the future of AI in terms of its ability to supersede human knowledge?

-The speaker is confident that AI will not only match but surpass human knowledge, especially with its ability to draw analogies across different disciplines and integrate vast amounts of data.

What potential changes in AI architecture does the speaker foresee?

-The speaker anticipates a shift from the current focus on transformers to new AI architectures that may be more efficient and powerful, possibly incorporating symbolic reasoning and other elements of human cognition.

What is the speaker's perspective on the integration of AI with tools and its implications for the future?

-The speaker sees the integration of AI with tools as a way to enhance AI capabilities, allowing AI to perform tasks and make decisions that combine the strengths of both AI and traditional software systems.

How does the speaker view the role of AI in education and knowledge dissemination?

-The speaker believes AI can revolutionize education by providing personalized learning experiences, understanding students' knowledge gaps, and presenting information in engaging ways.

What is the speaker's stance on the importance of safety and ethical considerations in AI development?

-The speaker emphasizes the need for safety standards and ethical considerations in AI development, advocating for regulations similar to those in other industries to ensure the responsible advancement of AI.

What historical event does the speaker draw a parallel with the current state of AI development?

-The speaker compares the current state of AI to the moment when the first nuclear reactor was built, suggesting that significant advancements and potential risks are on the horizon.

What does the speaker believe the best-case scenario for the future of AI looks like?

-In the best-case scenario, the speaker envisions a future where humans are still in control, major problems like famine and wars are solved, and AI is used responsibly for the betterment of society.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

CARTA: Computational Neuroscience and Anthropogeny with Terry Sejnowski

Cosmos A Spacetime Odyssey episode 1 part1

AI Unveiled beyond the buzz episode 1

Inteligência artificial: o que é, história e definição

VALE A PENA FAZER FACULDADE DE NEUROCIÊNCIA EM 2022? DÁ PRA GANHAR MUITO DINHEIRO COM NEUROCIÊNCIA?

Martin Shkreli on How Hedge Funds are Secret Way to Make Rich People Really Rich (Part 1)

5.0 / 5 (0 votes)