Multimodel Multimodal and Multiagent innovation with Azure AI | BRK104

Summary

TLDRMarco Castellana, VP of Azure AI, presents a futuristic AI demo showcasing multimodal, multilingual capabilities and AI agents. He introduces Azure AI Studio, GPT models, and the new video translator service. The session highlights the use of AI for accessibility, content safety, and responsible AI practices. Unity's integration of AI in game development is also featured, demonstrating Muse's ability to generate code and animations, emphasizing AI's transformative impact on creativity and efficiency.

Takeaways

- 🌟 Marco Castellana, VP Products of Azure AI, introduced the session with a focus on futuristic AI demonstrations, highlighting the advancements in Azure AI's capabilities.

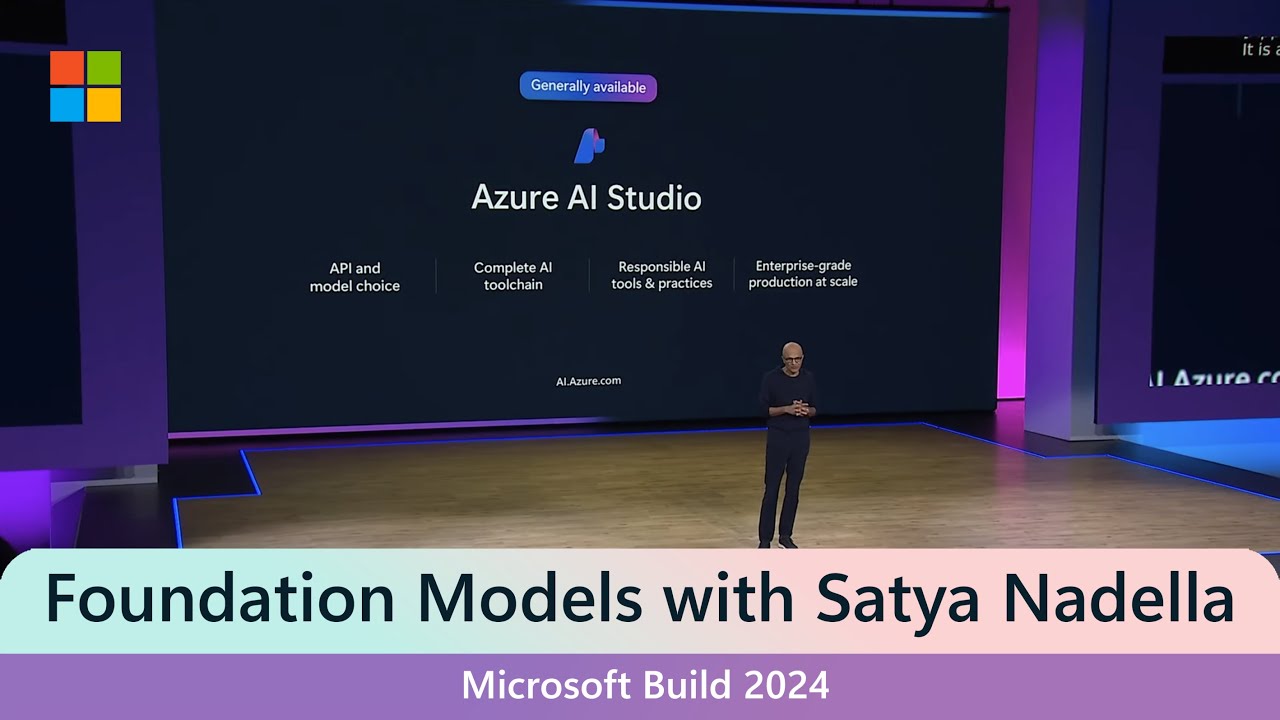

- 🔍 Azure AI Studio is now generally available, offering a platform for users to explore and utilize AI services, including Azure Machine Learning and responsible AI.

- 🌐 Azure AI collaborates with Open AI, providing access to the world's best foundation models through Azure Open AI, emphasizing the General Availability of GT4.

- 🚀 GPT 4.0 is an Omni model capable of handling speech, vision, and language, although speech support is not yet integrated, it can be combined with Azure Speech for full functionality.

- 🌐 The session showcased the vision capabilities of GPT 4.0, demonstrating its ability to understand and interact with visual data, such as filtering Power BI screens and reading menus for accessibility.

- 🌐 A new video translation service was introduced, capable of translating videos into other languages while maintaining the original speaker's voice and intonation.

- 📚 Azure AI Studio's Model Catalog was highlighted, featuring over 1600 models, including the recently released Phi 3 models, which offer low latency and can be run locally on edge devices.

- 🛡️ Responsible AI was a key theme, with discussions on the Responsible AI Transparency Report, content filtering, and the use of prompt shields to prevent jailbreak attacks on AI systems.

- 🤖 AI agents were showcased, demonstrating their ability to perform complex tasks, such as analyzing spreadsheets and generating code, with examples including GitHub Copilot Workspace and Muse Chat.

- 🎮 Unity's integration with AI was demonstrated through Muse Chat, which generates code for Unity games, and Muse Texture, which creates texture maps for 3D models in Unity.

Q & A

Who is Marco Castellana and what is his role at Azure AI?

-Marco Castellana is the VP Products of Azure AI. He is also their designated AI futurist, responsible for showcasing and discussing futuristic AI capabilities.

What is the significance of Azure AI Studio's general availability mentioned in the presentation?

-Azure AI Studio's general availability signifies that it is now fully launched and accessible to users. It is a platform where users can utilize various AI services and capabilities, including the latest models and tools.

What is the relationship between Azure AI and Open AI as discussed in the presentation?

-Azure AI and Open AI are best friends in the context of the presentation, indicating a strong partnership. Azure AI offers Open AI's foundation models through Azure Open AI, providing access to some of the world's best and most tested models.

What does GT4-O stand for and what are its capabilities?

-GT4-O stands for GPT 4 Omni, which is an Omni model capable of handling speech, vision, and language all within the same model. However, as of the presentation, speech support is not yet available in the model.

How does the vision capability of GPT 4 O assist users in filtering data?

-The vision capability of GPT 4 O can interpret screenshots, such as a Power BI screen, and provide instructions on how to filter data, like narrowing down to a specific country like Canada.

What is the Seeing AI application and how does it utilize AI for accessibility?

-The Seeing AI application is an AI tool that can read menus and other visual content, providing information to users, especially those with visual impairments. It can suggest vegan options on a menu or answer specific questions about menu items.

What is the new video translator service introduced in the presentation?

-The new video translator service is an AI feature that can translate videos into other languages while maintaining the original speaker's voice and intonation. This service is useful for advertising, training videos, and other multilingual content needs.

What are the Phi 3 models and how do they differ from the larger foundation models?

-The Phi 3 models are a family of smaller AI models that include variants like Phi 3 Mini, Phi 3 Medium, and Phi 3 Vision. They are lower in latency and can be run locally on edge devices, unlike the larger foundation models that require substantial computational power.

How does Azure AI Studio's evaluation capability help in assessing AI responses?

-Azure AI Studio's evaluation capability runs questions in bulk, retrieves answers, and tracks documents used to calculate metrics like groundedness, relevance, and retrieval score. This helps in determining the accuracy and appropriateness of AI responses.

What is the role of content safety and responsible AI in the development of AI applications?

-Content safety and responsible AI practices, such as those provided by Azure AI Content Safety, are crucial in filtering harmful content and ensuring that AI applications behave ethically and responsibly, preventing issues like hate speech or violence.

How can AI agents assist in complex tasks like data analysis or coding?

-AI agents can autonomously execute complex processes, including writing and running code, analyzing data, and generating reports. They can handle long-running tasks with memory, allowing them to pick up where they left off and adjust to new inputs or corrections.

What is Muse Chat and how does it integrate with Unity?

-Muse Chat is an AI-driven chat interface that provides answers to questions about Unity Engine and its product ecosystem. It is integrated with Unity, allowing users to get real-time assistance and generate code snippets directly within the Unity editor.

What are the benefits of using Muse in Unity for animation?

-Muse in Unity allows for quick generation of animations based on textual prompts, reducing the time and effort required for traditional animation techniques. It also enables users to make editable changes to the generated animations for fine-tuning.

How does the generative AI hackathon contribute to the development of new AI applications?

-The generative AI hackathon encourages innovation by providing a platform for developers to create and showcase new AI applications. The event led to the development of applications like Chat Edu, a student tutoring app, which won the first place and demonstrated the potential of AI in education.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Using Generative AI to Tackle Global Challenges

Foundation Models | Satya Nadella at Microsoft Build 2024

Microsoft Copilot Studio: Top Announcements from Ignite!

OpenAI'S "SECRET MODEL" Just LEAKED! (GPT-5 Release Date, Agents And More)

GEMINI 2.0 - ¡GOOGLE da UN GOLPE SOBRE LA MESA con su NUEVA GENERACIÓN!

AI Agents Explained: A Comprehensive Guide for Beginners

5.0 / 5 (0 votes)