Intro to Databricks Lakehouse Platform

Summary

TLDRDatabricks, founded by the creators of Apache Spark, Delta Lake, and MLflow, introduces the Lakehouse platform, a cloud-based solution that merges the strengths of data warehouses and data lakes. It offers an open, unified platform for data and AI, simplifying data management across all types. The platform's architecture supports various data and AI workloads, provides fine-grained governance through Unity Catalog, and ensures cloud-agnostic data governance. With its simplicity, openness, multi-cloud support, and flexibility, the Lakehouse platform empowers teams to innovate and collaborate effectively.

Takeaways

- 🌟 Databricks is the creator of the Lakehouse platform, which is an open and unified platform for data and AI.

- 🔍 Databricks was founded by the original creators of Apache Spark, Delta Lake, and MLflow.

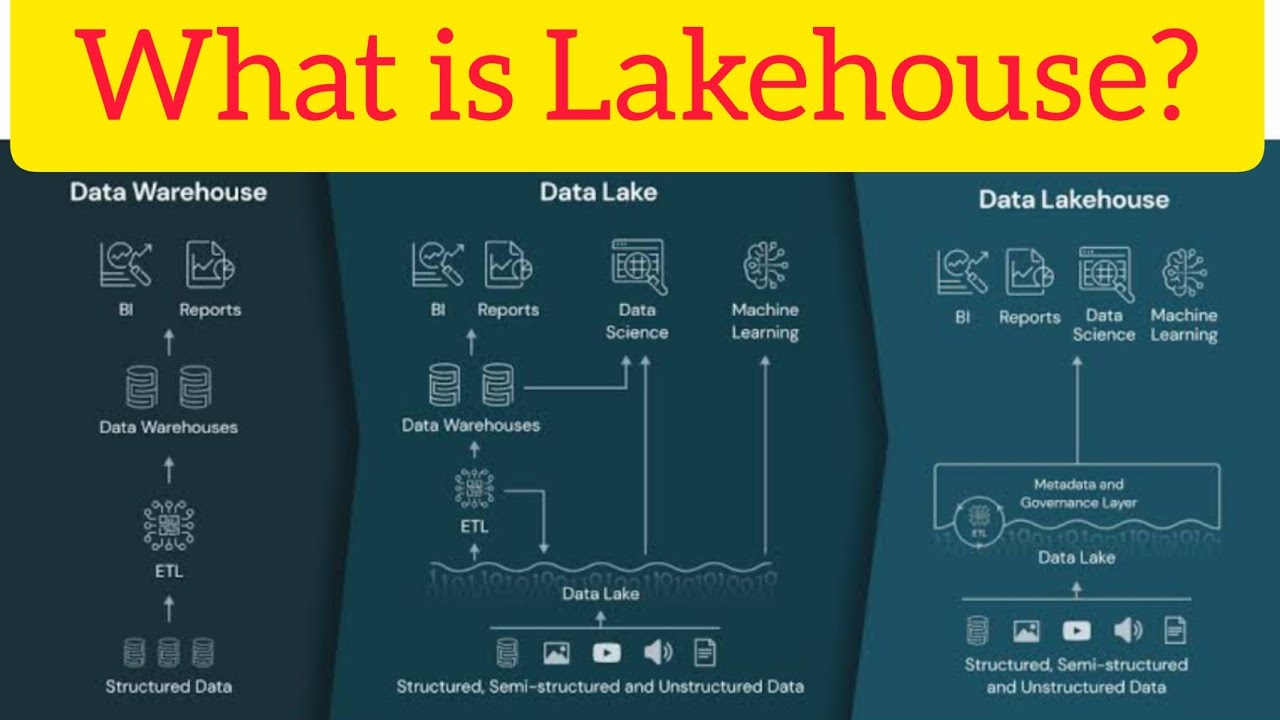

- 🏛️ The Lakehouse architecture is a new generation of open platforms that unify data warehousing and advanced analytics.

- 📚 Databricks coined the term 'Lakehouse' in a research paper co-authored with UC Berkeley and Stanford University in 2021.

- 🌐 The Lakehouse Paradigm is cloud-agnostic, allowing data governance wherever it is stored.

- 🛠️ Databricks Lakehouse Platform includes Delta Lake for data reliability and performance, Unity Catalog for fine-grained governance, and support for persona-based use cases.

- 💡 It provides instant and serverless compute, with Databricks managing the compute layer for the customer.

- 🔗 The platform combines the reliability of data warehouses with the openness and flexibility of data lakes, including support for machine learning.

- 🚀 Unified approach eliminates data silos, complicated structures, and fractured governance and security, simplifying data analytics and AI initiatives.

- 🌈 Databricks Lakehouse Platform is open, allowing unrestricted sharing of data and easy integration with open source projects and Databricks partners.

- ☁️ Supports multi-cloud environments, providing a consistent experience across different cloud platforms in use.

Q & A

What is the Databricks Lakehouse platform?

-The Databricks Lakehouse platform is a cloud-based solution that combines the best of data warehouses and data lakes to offer an open and unified platform for data and AI.

Who founded Databricks and what are their key contributions?

-Databricks was founded in 2013 by the original creators of Apache Spark, Delta Lake, and MLflow. They are known for pioneering the data lakehouse architecture and coining the term in a research paper co-authored with UC Berkeley and Stanford University.

What is the purpose of the lake house paradigm?

-The lake house paradigm is designed as an ideal data and AI platform for all data types, offering an open platform with a single security and governance model, capable of managing all data types and being cloud-agnostic.

How does the Databricks Lakehouse platform address the challenges of previous data environments?

-The platform eliminates the challenges of data silos, complicated structures, and fractured governance and security structures by providing a unified approach that combines the reliability and performance of data warehouses with the openness and flexibility of data lakes.

What architectural features does the Databricks Lakehouse platform offer?

-The platform offers features such as the reliability and performance of Delta Lake as the data lake foundation, fine-grained governance for data and AI with Unity Catalog, and support for persona-based use cases for all data team members.

What is the significance of the term 'unified platform' in the context of the Databricks Lakehouse platform?

-A unified platform signifies that the Databricks Lakehouse platform consolidates various data and AI use cases into a single, cohesive environment, simplifying operations and enhancing collaboration among data teams.

How does the Databricks Lakehouse platform support data practitioners?

-It supports data practitioners by providing a simple, open, and multi-cloud platform that allows them to easily collaborate, access all the data they need, and innovate without the constraints of traditional data environments.

What is the role of the Unity Catalog in the Databricks Lakehouse platform?

-The Unity Catalog provides fine-grained governance for data and AI within the Databricks Lakehouse platform, helping to manage data access and policies across the organization.

What type of workloads does the Databricks Lakehouse platform support?

-The platform supports a range of workloads for data teams, including data warehousing, data engineering, data streaming, data science, and machine learning.

How does the Databricks Lakehouse platform approach cloud-agnosticism?

-The platform is designed to work on the cloud platform currently in use by the organization, providing a consistent management, security, and governance experience across different cloud environments.

What is the significance of the Databricks partner network in the context of the Lakehouse platform?

-The Databricks partner network offers flexibility to use existing infrastructure, share data, and build a modern data stack with unrestricted access to open source data projects and a broad range of partners.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Intro To Databricks - What Is Databricks

What is Databricks? | Introduction to Databricks | Edureka

02 What is Data Lakehouse & Databricks Data Intelligence Platform | Benefits of Databricks Lakehouse

What is Lakehouse Architecture? Databricks Lakehouse architecture. #databricks #lakehouse #pyspark

Data Lakehouse: An Introduction

Intro to Databricks Lakehouse Platform Architecture and Security

5.0 / 5 (0 votes)