The Hidden Autopilot Data That Reveals Why Teslas Crash | WSJ

Summary

TLDRThe video script delves into the dangers and flaws of Tesla’s Autopilot technology, particularly after several fatal crashes. It highlights the case of Steven Hendrickson, who died in a crash involving an overturned truck while relying on Autopilot. Despite Tesla's claims of safety, experts warn that the camera-based system is prone to failure, especially in complex scenarios. With hundreds of crashes reported, the lack of transparency and limited backup sensors raise concerns about public trust. The investigation and ongoing lawsuits point to deeper issues with the technology’s design and its misleading marketing.

Takeaways

- 😀 Tesla's Autopilot technology has been involved in over a thousand crashes since 2021, with many details hidden from the public.

- 😀 Steven Hendrickson, a 35-year-old father, died in a crash in Fontana, California, while his Tesla Model 3 was operating on Autopilot.

- 😀 Tesla CEO Elon Musk maintains that Autopilot can reduce accidents by a factor of 10, but experts have raised concerns about the system's safety.

- 😀 Expert Missy Cummings, who has criticized Tesla, warned in 2016 that autonomous driving technology would inevitably lead to fatalities.

- 😀 Tesla's Autopilot system relies heavily on cameras, which can struggle to recognize certain obstacles, unlike other systems that use lidar and radar for better detection.

- 😀 A major issue with Tesla's Autopilot is the camera calibration, leading to inconsistent and inaccurate object detection across different cameras in the vehicle.

- 😀 There is a significant lack of transparency regarding the data from Tesla's Autopilot crashes, which is often withheld from the public and the families of victims.

- 😀 Tesla drivers are instructed to remain alert and ready to take control at all times, but this can create a false sense of security, as demonstrated in Hendrickson's case.

- 😀 Despite multiple investigations, the National Highway Traffic Safety Administration (NHTSA) has released limited information regarding Tesla Autopilot-related crashes.

- 😀 Tesla has been under scrutiny for its marketing of Autopilot, with the Department of Justice investigating whether the company misled the public about the car's capabilities.

Q & A

What happened on the morning of May 5th, 2021, involving Steven Hendrickson and his Tesla Model 3?

-Steven Hendrickson was driving his Tesla Model 3 in Autopilot mode when his car crashed into an overturned semi-truck in Fontana, California, at around 2:30 AM. Hendrickson was killed in the accident.

How many crashes involving Tesla's Autopilot system have been reported to federal regulators since 2021?

-Tesla has submitted reports to federal regulators about over a thousand crashes involving Autopilot since 2021.

What are some of the key risks associated with Tesla’s Autopilot system as discussed in the script?

-Tesla’s Autopilot system, relying primarily on cameras, faces significant risks when encountering unusual obstacles or situations it hasn't been trained on. These risks include failure to recognize obstacles, such as overturned trucks or stopped vehicles, leading to crashes.

Why is Tesla's reliance on cameras for Autopilot a concern, according to experts?

-Experts are concerned because Tesla's reliance on cameras, without lidar or other advanced sensors, creates gaps in the system's ability to detect and understand road hazards accurately, especially when encountering unfamiliar scenarios.

How does Tesla defend the safety of its Autopilot system despite concerns?

-Tesla, particularly CEO Elon Musk, defends the Autopilot system by claiming it can reduce accidents significantly in the long term and that it is safer than human driving, offering the potential to save millions of lives.

What is the position of safety expert Missy Cummings on semi-autonomous driving technologies?

-Missy Cummings, a leading expert on autonomous driving, has been critical of Tesla's technology. She warned in 2016 that someone would eventually die due to flaws in semi-autonomous vehicles, highlighting the inherent risks in such technologies.

What role does the National Highway Traffic Safety Administration (NHTSA) play in investigating Tesla's Autopilot system?

-The NHTSA has launched multiple investigations into Tesla's Autopilot system since 2021, although it has released limited information about the findings, and Teslas continue to operate on the roads.

What is Tesla’s response regarding driver responsibility while using Autopilot?

-Tesla states that drivers must remain alert and be ready to take control at any time while using Autopilot. They claim that Steven Hendrickson was warned 19 times to keep his hands on the wheel before the crash, and that the car initiated braking before impact.

How does the data and video from Tesla crashes help in understanding the system’s failures?

-Data and video from Tesla crashes can reveal how the system behaves in certain situations, highlighting its weaknesses. In Steven Hendrickson’s case, experts analyzed partial data and video to understand how Autopilot failed to recognize the overturned truck and prevent the crash.

What challenges do experts face when trying to analyze the data from Tesla crashes?

-Experts face challenges because Tesla does not easily release full crash data or video footage. Access to such data is often restricted, with Tesla claiming that much of it is proprietary, leaving crash analysis to rely on third-party sources or data from anonymous hackers.

What is the ethical dilemma posed by Tesla’s handling of Autopilot data and safety concerns?

-The ethical dilemma centers around Tesla's lack of transparency in releasing full crash data, which prevents proper public understanding and regulatory oversight. Additionally, Tesla's marketing and user reliance on Autopilot raise concerns about false trust in the technology, which could result in preventable accidents.

What might be the long-term implications of Tesla's approach to autonomous driving on the industry?

-Tesla's approach, especially its reliance on camera-based systems and limited transparency, may set a troubling precedent for autonomous driving technology. It could result in other companies following suit, with potentially unsafe systems being put on public roads before they are fully refined and tested.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

We Investigated Tesla’s Autopilot. It’s Scarier Than You Think

How Google Maps Sent 2 Teens Into a Death Trap, Only 1 Survived

ГОНКИ АВТОПИЛОТОВ ВОЗВРАЩАЮТСЯ! ПОУМНЕЛ ЛИ ИИ В ГТА 5 РП? (ECLIPSE GTA 5 RP)

Something SERIOUSLY Strange Just Happened / This Changes Everything

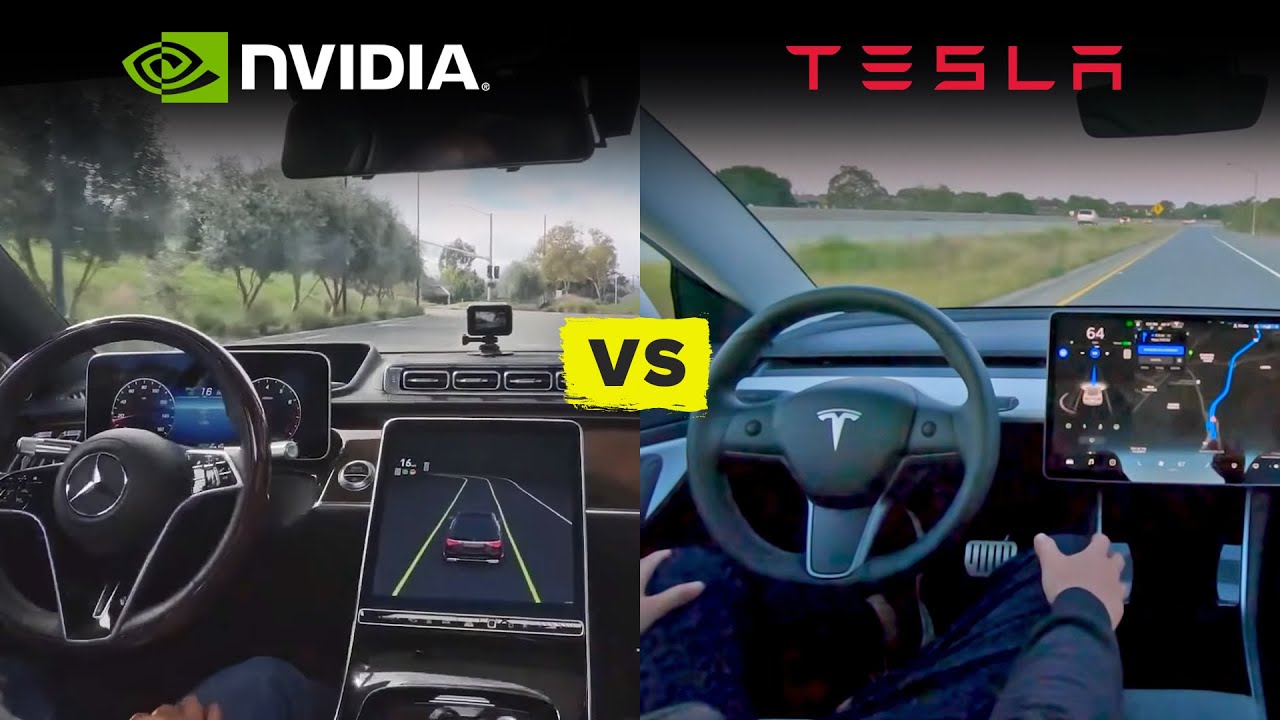

Nvidia Drive vs Tesla Full Self Driving (Watch the reveals)

Putting the Brakes on Teenage Driving

5.0 / 5 (0 votes)