Klasifikasi Kardiotokografi - UAS Data Mining UGM (SEPTIAN EKO PRASETYO)

Summary

TLDRThis research presentation focuses on comparing classification models for cardiotocography (CTG) data to assess fetal health. The study examines several models, including Naive Bayes, Random Forest, and Logistic Regression, with and without feature selection. Feature selection methods like CV subset, Information Gain, and Chi-Square were applied to improve classification accuracy. The results show that Random Forest, with Information Gain feature selection, achieved the highest accuracy of 93.74%. The research demonstrates how combining algorithms and feature selection techniques can significantly improve classification performance in medical data analysis.

Takeaways

- 😀 Cardiotocography (CTG) is used to monitor fetal health during pregnancy by tracking the fetal heart rate, uterine contractions, and fetal movements.

- 😀 CTG results help categorize fetal health into three classes: Normal, Suspect, and Pathological.

- 😀 The study compares multiple classification models including Naive Bayes, C4.5, Random Forest, Logistic Regression, KNN, and Multilayer Perceptron (MLP).

- 😀 Data preprocessing involved transforming numerical data into nominal categories and normalizing the data for better model performance.

- 😀 The dataset used contains 2126 records with 22 attributes and three classes: Normal, Suspect, and Pathological.

- 😀 Three feature selection methods were tested: CV Subset Evaluation (Best First), Information Gain, and Chi-Square.

- 😀 The best classification model without feature selection was Random Forest, achieving an accuracy of 93.4%.

- 😀 The Information Gain feature selection method improved Random Forest’s accuracy to 93.74%, showing its effectiveness in enhancing model performance.

- 😀 The use of feature selection generally improved accuracy for most models, with Random Forest being the most effective across all methods.

- 😀 Naive Bayes showed the lowest improvement in accuracy when feature selection was applied compared to other models.

- 😀 The research concludes that combining Random Forest with Information Gain feature selection yields the highest classification accuracy (93.74%) for CTG data.

Q & A

What is Cardiotokography (CTG) and what does it measure?

-Cardiotokography (CTG) is a method used to monitor the health of a fetus during pregnancy by recording important information such as the fetal heart rate, fetal movements, and uterine contractions. It helps in assessing whether the fetus is healthy or facing any health problems.

What are the three classes used in CTG to categorize fetal health?

-CTG categorizes fetal health into three classes: 'Normal' (healthy fetus), 'Suspect' (suspected health issues), and 'Pathological' (fetal health problems detected).

What was the goal of the research presented in the transcript?

-The research aimed to compare various classification models for CTG data and evaluate the impact of feature selection techniques (CV Subset, Information Gain, and Chi-Square) on the accuracy of these models.

What preprocessing steps were performed on the data before applying classification models?

-The data underwent two main preprocessing steps: transformation (converting numerical data to nominal data) and normalization (scaling data between 0 and 1) to reduce variance and improve algorithm performance.

What feature selection methods were used in this research?

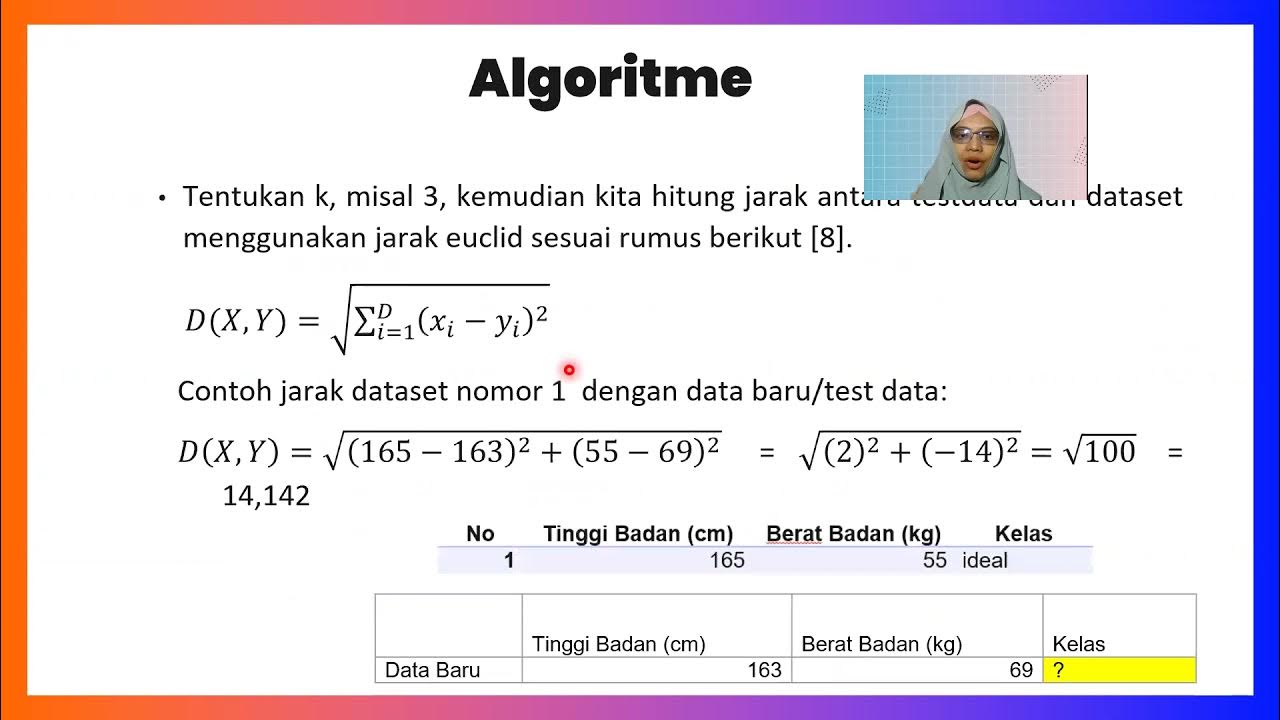

-The research used three feature selection methods: CV Subset (Best-First), Information Gain, and Chi-Square, each with different criteria for selecting the most relevant features from the dataset.

How does Information Gain feature selection work?

-Information Gain selects features based on their ability to reduce uncertainty (entropy) in the data, ranking attributes by how much they contribute to distinguishing between the different classes in the dataset.

What classification models were tested in this research?

-The following classification models were tested: Naive Bayes, C4.5 (J48), Random Forest, Logistic Regression, K-Nearest Neighbor (KNN), and Multilayer Perceptron (MLP).

How was the performance of the models evaluated?

-The performance of all models was evaluated using 10-fold cross-validation, where the data is split into 10 parts, with 9 parts used for training and 1 part for testing.

What were the results of the classification models without feature selection?

-Without feature selection, Random Forest achieved the highest accuracy at 93.4%, while Naive Bayes had the lowest accuracy at 79.68%.

What impact did feature selection have on the classification results?

-Feature selection improved the accuracy of the models. For instance, using the CV Subset, Information Gain, and Chi-Square methods increased the accuracy of Random Forest to 93.3%, 93.74%, and 93.46%, respectively. Naive Bayes also saw improved accuracy with feature selection.

What was the conclusion of the research regarding the best model and feature selection method?

-The research concluded that the combination of Random Forest and Information Gain feature selection resulted in the highest accuracy of 93.74%. This combination showed an improvement of 0.30% compared to using Random Forest without feature selection.

Why is normalization of data important in this study?

-Normalization is important because it scales the data between 0 and 1, reducing variance and ensuring that all features contribute equally to the model, which helps in improving the performance of classification algorithms.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

5.0 / 5 (0 votes)