L-5.20: Translation Lookaside Buffer(TLB) in Operating System in Hindi

Summary

TLDRThis video explains the concept of the Translation Lookaside Buffer (TLB), a critical component used to speed up memory access in computer systems. The speaker outlines the process of paging, where data is divided into pages and mapped to frames in main memory using a page table. The TLB acts as a faster cache for page table entries, reducing memory access time. Key concepts such as TLB hits and misses, effective memory access time, and the importance of high hit ratios are discussed, with a focus on practical application in competitive exams and university-level studies.

Takeaways

- 😀 TLB (Translation Lookaside Buffer) is a cache memory that stores recent page table entries to speed up memory access.

- 😀 The page table maps logical addresses (page numbers) to physical addresses (frame numbers) in memory.

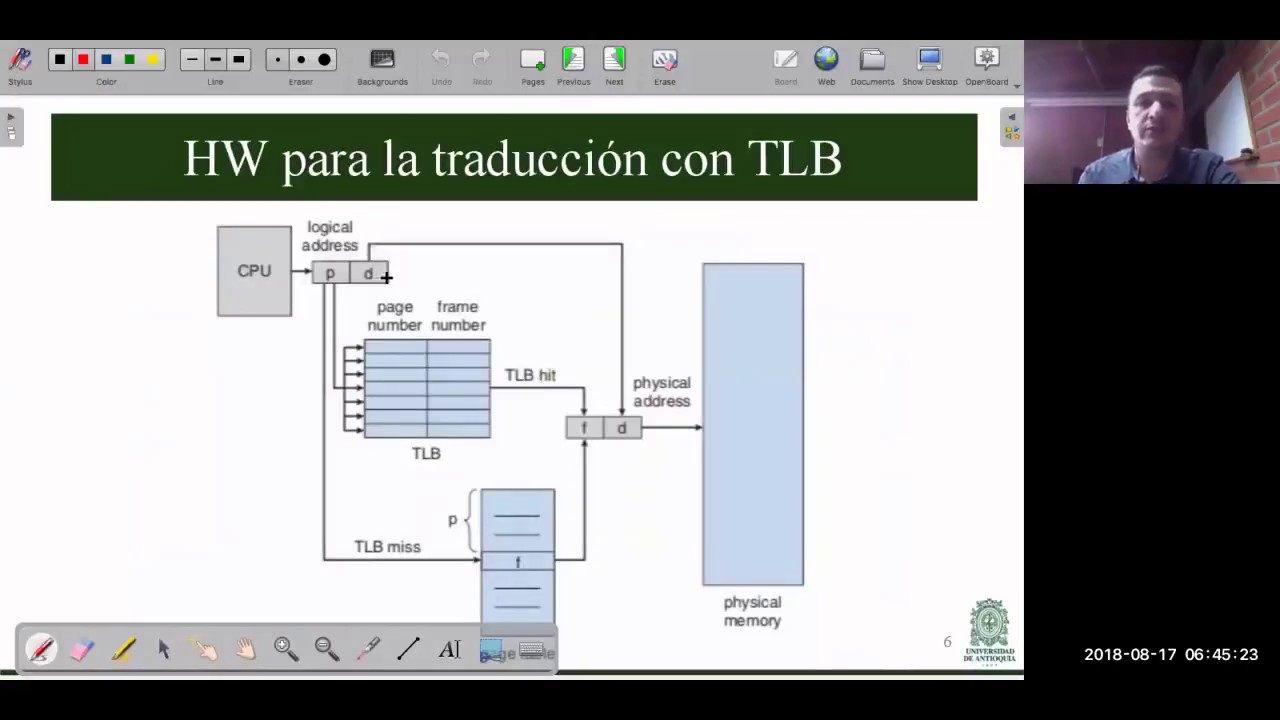

- 😀 In the paging process, the CPU generates a logical address consisting of a page number and offset, which is mapped to a frame number in memory.

- 😀 Without TLB, the CPU must access both the page table and the main memory, resulting in slower performance (2X time).

- 😀 The TLB speeds up memory access by storing the page table entries directly in cache, reducing the need to access the page table in memory.

- 😀 TLB hits occur when the CPU finds the required frame number in the TLB, reducing the overall memory access time.

- 😀 TLB misses occur when the required page entry is not in the TLB, forcing the CPU to access the page table in memory before accessing the page in RAM.

- 😀 The effective memory access time is the total time to access memory, which depends on whether the TLB hits or misses.

- 😀 High TLB hit ratios improve system performance by reducing the number of memory accesses needed for each operation.

- 😀 TLB miss ratios that are too high reduce the effectiveness of TLB, leading to slower memory access times and higher system latency.

- 😀 TLB is essential for optimizing memory access in systems with large amounts of memory and multiple levels of paging, commonly tested in exams and real-world applications.

Q & A

What is a Translation Lookaside Buffer (TLB)?

-A Translation Lookaside Buffer (TLB) is a small, fast memory cache that stores a limited number of entries from the page table, which maps virtual addresses to physical addresses. The TLB improves memory access speed by reducing the need to access the page table and main memory for address translation.

Why is TLB used in memory management systems?

-TLB is used to reduce the time it takes to translate virtual memory addresses into physical memory addresses. By storing recent translations, it avoids repeated lookups in the page table and accessing the slower main memory, thereby improving overall system performance.

How does paging work in a computer system?

-In paging, a process is divided into small units called pages, and the main memory is divided into frames. The page table acts as an index that maps virtual pages to physical frames in memory, enabling efficient memory management and address translation.

What is the issue with accessing the page table and memory directly?

-Accessing the page table and main memory directly takes time, resulting in two memory accesses for each page lookup: one to access the page table to find the frame number, and another to access the physical memory for the page itself. This can be slow, especially when page tables are large.

What is a TLB hit and how does it benefit memory access?

-A TLB hit occurs when the required page’s frame number is found in the TLB. This benefits memory access by reducing the need to access the page table and main memory, speeding up the process since TLB is faster than RAM.

What happens during a TLB miss?

-During a TLB miss, the system must search the page table for the corresponding frame number. Once found, it will then access the main memory to retrieve the page. This process takes longer than a TLB hit, as it involves accessing both the page table and the main memory.

How does multi-level paging impact memory access time?

-Multi-level paging can increase memory access time because the page table itself may be spread across multiple levels, requiring additional memory accesses to find the correct frame number. This can significantly slow down the process of address translation.

What are the advantages of TLB in terms of memory access?

-The primary advantage of TLB is its ability to speed up memory access by reducing the number of accesses to the slower main memory. By caching recently accessed page table entries, TLB significantly reduces the time spent in address translation, especially when there is a high hit ratio.

Why is the size of TLB limited, and what are the trade-offs?

-The size of TLB is limited due to cost constraints. A larger TLB would increase the system's cache memory size but also raise costs. The trade-off is balancing the number of entries in the TLB to maximize performance while keeping costs manageable.

What factors influence the effectiveness of TLB?

-The effectiveness of TLB depends largely on the **hit ratio**. A higher hit ratio means more page entries are found in the TLB, reducing access time. A high **miss ratio** results in more time spent accessing the page table and main memory, negating the benefits of TLB.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

L-5.21: Numerical on Translation Lookaside Buffer (TLB) | Operating System

How a Clever 1960s Memory Trick Changed Computing

What is virtual memory? – Gary explains

Sistemas Computacionais - Técnica de memória virtual: paginação e segmentação

C09P01 - Translation Lookaside Buffer TLB - ISI485 - Sistemas Operativos

RAM Explained - Random Access Memory

5.0 / 5 (0 votes)