1. Data Integration in context - Talend

Summary

TLDRThis video introduces data integration, focusing on its role in transforming raw data into valuable business insights. It explains key concepts like ETL (Extract, Transform, Load) processes, and highlights common use cases such as data migration, warehousing, and synchronization. Viewers also learn about Change Data Capture (CDC) and the benefits of efficient data integration, including scalability, flexibility, and improved data quality. The video emphasizes how tools like Talon simplify the integration process, enabling businesses to streamline operations, improve data accuracy, and enhance decision-making with minimal coding and maximum efficiency.

Takeaways

- 😀 Data integration is crucial for managing and consolidating data from diverse sources and formats, helping to enable analytics and business insights.

- 😀 ETL (Extract, Transform, Load) tools automate the process of extracting data from sources, transforming it into a usable format, and loading it into a repository for further analysis.

- 😀 Data migration involves transferring application data from old systems to new ones, often requiring data format conversion and automated workflows to save time and reduce errors.

- 😀 Data warehousing aggregates transactional data for analysis and business intelligence, often integrating data from both internal and external sources.

- 😀 Data consolidation combines data from multiple locations or systems, typically in cases such as mergers or acquisitions, to create a unified view for easier access and decision-making.

- 😀 Data synchronization ensures that information across different systems or locations remains up-to-date and consistent, eliminating discrepancies in shared data.

- 😀 Change Data Capture (CDC) enables real-time tracking of data changes, reducing network traffic and improving ETL efficiency by only capturing new or updated data.

- 😀 A graphical ETL tool minimizes the need for manual coding, offering a user-friendly interface to manage complex data transformations.

- 😀 Modern ETL tools should be versatile, scalable, and capable of handling large and complex datasets while ensuring high data quality through profiling, cleansing, and standardization.

- 😀 Automating data integration tasks, such as exception handling and profiling, helps speed up the process and improves collaboration between different teams and departments.

- 😀 Talent Data Integration simplifies the process of designing, developing, and deploying end-to-end data integration jobs with a drag-and-drop interface, improving workflow and speeding up response times to changing business needs.

Q & A

What is data integration and why is it important?

-Data integration is the process of combining data from multiple sources into a unified, centralized system. It is important because it enables businesses to access accurate, consistent data for analysis and decision-making, which is essential for generating valuable business insights.

What are the main challenges in data integration?

-The main challenges in data integration include handling different data formats, ensuring data consistency across various systems, and overcoming complexities in transforming and cleansing data. These tasks require specialized tools and methodologies to ensure that data is usable for analysis.

What does the ETL process stand for and what are its three steps?

-ETL stands for Extract, Transform, Load. The three steps are: 1) Extracting data from source systems, 2) Transforming the data by performing calculations, cleaning, and standardization, and 3) Loading the transformed data into a target system or repository for analysis.

How does an ETL tool simplify the data integration process?

-An ETL tool simplifies the process by automating the extraction, transformation, and loading of data, often with minimal coding required. This allows businesses to quickly integrate data from various sources, improving efficiency and reducing errors associated with manual processes.

What are some typical use cases for data integration?

-Typical use cases for data integration include data migration (moving data between systems), data warehousing (aggregating data for analysis), data consolidation (combining data due to mergers or acquisitions), and data synchronization (ensuring consistency across multiple systems).

What is Change Data Capture (CDC) and why is it important in data integration?

-Change Data Capture (CDC) is the process of identifying and capturing only the data that has changed since the last extraction. CDC is important because it helps reduce network traffic and ETL time, improving the efficiency of data integration and enabling real-time data updates.

What role do data profiling and cleansing play in data integration?

-Data profiling involves analyzing data to understand its structure, quality, and relationships, while data cleansing ensures that the data is accurate and consistent. These processes are crucial for improving data quality before it is loaded into a target system, ensuring that the data used for analysis is reliable.

What are the key benefits of using a modern ETL tool for data integration?

-Key benefits include scalability to handle large datasets, speed to automate and streamline processes, flexibility to work with diverse data sources, and the ability to improve data quality through profiling, cleansing, and de-duplication features. These tools also enhance collaboration with shared repositories and versioning capabilities.

How can data integration help in merging customer data across different systems?

-Data integration can help by standardizing and merging customer information from various sources, ensuring that data from different departments or systems is consistent and accurate. This reduces redundancy, improves data quality, and ensures that customer profiles are complete and reliable.

What is the significance of automating exception handling in data integration projects?

-Automating exception handling helps to quickly identify and address issues that arise during the data integration process, reducing manual intervention and minimizing delays. This leads to faster data processing and fewer errors, making the integration process more efficient and reliable.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Pertemuan 1 - Pengantar Data Mining | Kuliah Online Data Mining 2021 | Data Mining Indonesia

Data Mining Foundations Eps-01 Apa itu Data Mining?

The Impact of Generative AI on Business Intelligence

Data Buzzwords: BIG Data, IoT, Data Science and More | #Tableau Course #1

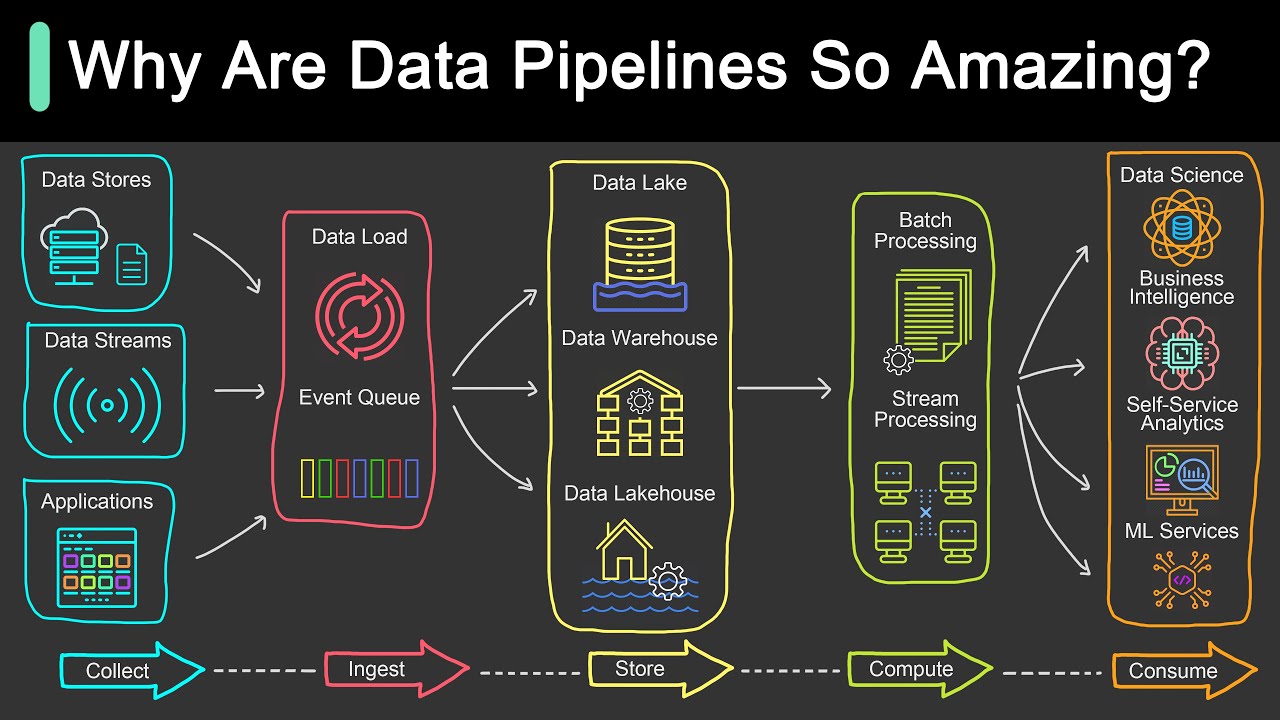

What is Data Pipeline? | Why Is It So Popular?

PBI - Kimiafarma CCV 1

5.0 / 5 (0 votes)