HandsOn Generated Adversarial Network

Summary

TLDRThis video tutorial introduces the concept of Generative Adversarial Networks (GAN) using Python. It covers the foundational steps for building a GAN model, including defining key variables like image size, latent space, and optimizer. The tutorial walks through the creation of the generator and discriminator components using a sequential neural network model, emphasizing the importance of layers and activation functions like Leaky ReLU and TanH. It concludes with a demonstration of compiling and configuring the GAN, preparing it for further training and testing in future sessions.

Takeaways

- 💻 The video covers the implementation of GAN (Generative Adversarial Network) using Python programming.

- 🔧 Necessary libraries include Keras, TensorFlow, and NumPy, which should be installed prior to building the model.

- 📊 The dataset used is the MNIST dataset, although other datasets can also be applied with minor changes.

- 📐 Image dimensions are defined as 28x28 pixels with a grayscale color channel, which corresponds to a single channel value.

- 🎛️ A latent variable representing the number of inputs for generating images is introduced, controlling the noise input for the GAN.

- ⚙️ The optimizer used is stochastic gradient descent (SGD) with a recommended learning rate of 0.0001.

- 🏗️ The generator model is built as a sequential neural network, using dense layers and LeakyReLU activation for stability.

- 📏 Batch normalization is added to the generator model to stabilize the learning process, with a momentum value of 0.8.

- 🖼️ The output of the generator model needs to be reshaped to the defined image size using NumPy for further processing.

- ⚖️ The discriminator model is also sequential, and both generator and discriminator are compiled with binary cross-entropy loss.

Q & A

What is the main topic of the script?

-The main topic of the script is about building a Generative Adversarial Network (GAN) using the Python programming language.

What are the prerequisites mentioned for building a GAN in the script?

-The prerequisites mentioned are having the libraries Keras and TensorFlow installed in the notebook or server.

Which dataset is used for the practical exercise in the script?

-The dataset used for the practical exercise is the EMNIST dataset.

What is the size of the image output defined for the GAN in the script?

-The size of the image output defined for the GAN is 28x28 pixels with a single channel (grayscale).

What is the role of the variable 'image_size' in the script?

-The variable 'image_size' contains information about the image dimensions and the color channels used.

What does the variable 'latent_dim' represent in the context of the script?

-The variable 'latent_dim' represents the number of latent variables that will form the image created by the GAN algorithm.

Which optimization algorithm is used for training the GAN in the script?

-The optimization algorithm used for training the GAN is Stochastic Gradient Descent with a learning rate of 0.0001.

How is the generator component of the GAN defined in the script?

-The generator component is defined as a sequential neural network model with layers of increasing size, starting with 256 units.

What activation function is used for the generator model in the script?

-The activation function used for the generator model is LeakyReLU with an alpha value of 0.2.

What is the purpose of Batch Normalization in the GAN model as mentioned in the script?

-Batch Normalization is used to make the GAN model more stable during training, with a momentum value of 0.8.

How is the discriminator component of the GAN defined in the script?

-The discriminator component is also defined as a sequential model, with layers of decreasing size, starting with 256 units.

What is the final output size of the generator model in the script?

-The final output size of the generator model is 28x28x1, which matches the defined image size.

What activation function is used at the end of the generator and discriminator models?

-At the end of the generator model, the activation function used is LeakyReLU, while for the final model, it is changed to Tanh.

How are the generator and discriminator components combined to form the GAN algorithm in the script?

-The generator and discriminator are combined by defining a new sequential model that includes both components and configuring it with binary crossentropy as the loss function and stochastic gradient descent as the optimizer.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Generative Adversarial Networks (GANs) - Computerphile

Generated Adversarial Network

Machine Learning Interview Questions 2024 | ML Interview Questions And Answers 2024 | Simplilearn

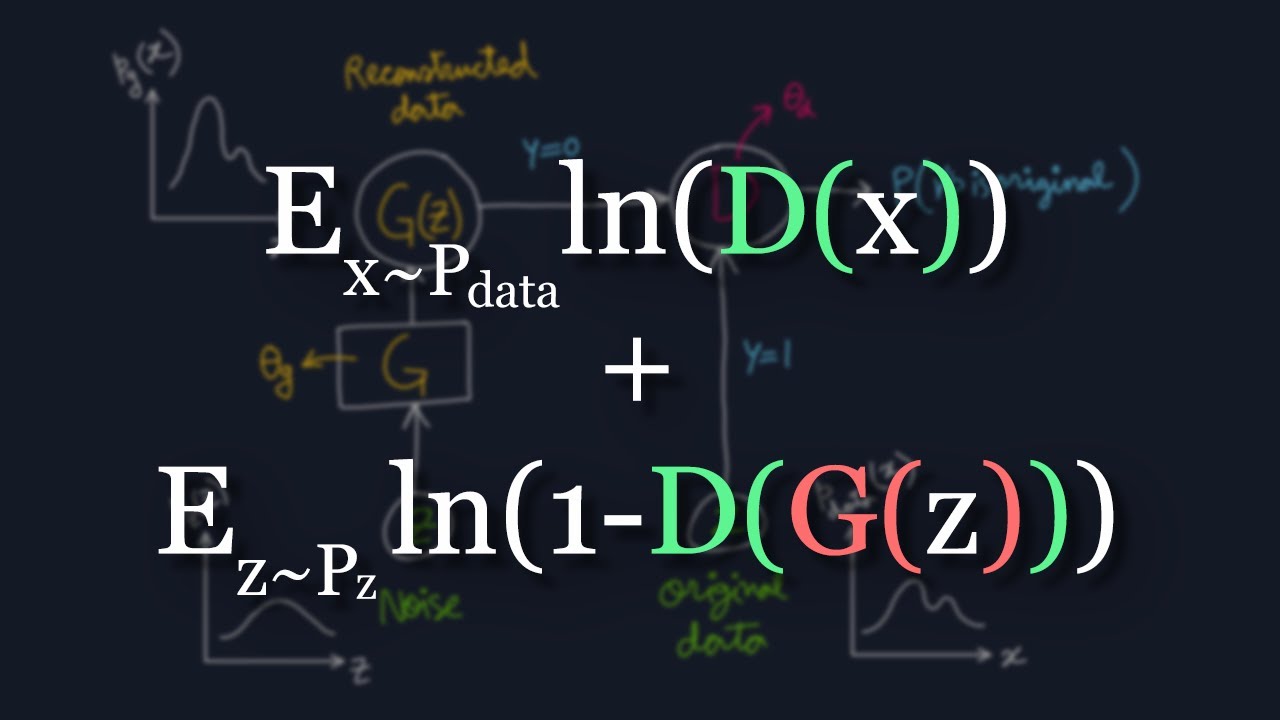

The Math Behind Generative Adversarial Networks Clearly Explained!

What is Zero-Shot Learning?

Detailed Prerequisites To Start Learning Agentic AI With Free Videos And Materials

5.0 / 5 (0 votes)