Apple Intelligence EXPLAINED: No iPhone 16 Needed!

Summary

TLDRThe video explores Apple's new AI features, focusing on how they leverage a hybrid AI system to balance on-device processing and cloud computing for privacy and efficiency. The new capabilities include smarter Siri, notification summarization, and on-device text rewriting. It highlights how these features enhance user experience without compromising security, and discusses Apple's unique approach to handling AI tasks. The video also suggests alternatives like ChatGPT and Google Lens for those who want similar features on older iPhones, emphasizing that while the upgrade isn't necessary, Apple's integrated experience offers a seamless user experience.

Takeaways

- 📱 Apple's upcoming iPhone models are set to feature advanced AI capabilities, but the specifics of how they operate have been somewhat unclear.

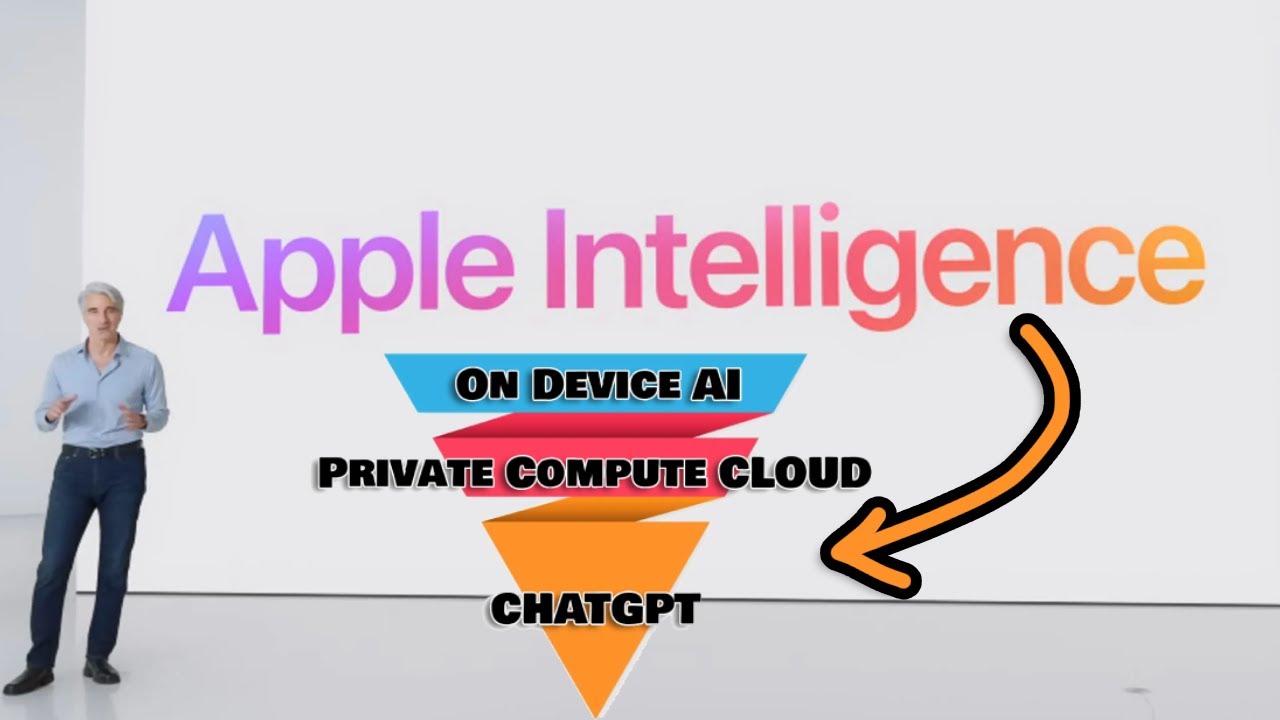

- 🤖 Apple uses a hybrid AI system that balances tasks between on-device processing and cloud computing to optimize performance and security.

- 🔒 Privacy is a key focus, with Apple's AI models designed to handle complex tasks locally, reducing the need to send data to external servers.

- 📊 On-device AI features like notification summarization and emoji generation are powered by lightweight models that run directly on the iPhone.

- 📝 The standout feature, on-device text summarization, uses a dedicated model to proofread and rewrite text in different styles,受限于iPhone的RAM和处理能力。

- 🌐 Third-party apps like ChatPlayground offer additional AI capabilities, such as an AI browser co-pilot that simplifies information gathering and text generation.

- 📝 Apple provides AI with clear, detailed instructions to guide its behavior, aiming to keep it on track and prevent it from 'hallucinating' or making up factual information.

- 💡 Apple's on-device intelligence relies on a model called OpenElm, which is small but efficient, requiring less RAM compared to larger models likeGBT.

- 🛠️ Adapters and quantization techniques allow Apple to further optimize AI models for specific tasks, reducing memory usage and improving speed.

- ☁️ Private Cloud Compute (PCC) is Apple's cloud-based AI that steps in when on-device models can't handle a task, with a focus on privacy and security.

- 🚀 Siri has been significantly upgraded to access on-device data, apps, and personal contacts, providing more integrated and personalized assistance.

- 📸 Visual intelligence, a feature of the new iPhone, allows users to point their camera at objects to get instant insights, similar to Google Lens but with deeper system integration.

- 🖼️ Image Playground is an on-device image generation tool that can create images from text prompts or suggestions based on personal context, although its styles are currently limited.

- 🧼 The 'clean up' feature in Photos, which removes unwanted objects from images, can be replicated using third-party apps like Google Photos, negating the need for a new iPhone upgrade.

Q & A

What is Apple's approach to AI processing on devices?

-Apple uses a hybrid AI system where simple tasks are handled on the device, and more complex tasks are processed using Apple's private compute, ensuring data security without relying on cloud servers.

How does Apple's notification summarizer work?

-The notification summarizer uses a lightweight AI model to analyze all notifications and sorts them by urgency or priority, providing a summary of important notifications without needing cloud processing.

What is the role of 'adapters' in Apple's AI system?

-Adapters are small, task-specific tweaks that can be added to the base AI model. They allow the model to switch between different tasks efficiently without needing separate models for each job.

How does Apple's AI system handle personalization?

-Apple's AI system uses personal context from stored data like messages, contacts, and calendar events to customize responses specifically for the user, ensuring privacy by keeping all data on the device.

What is the significance of 'quantization' in Apple's AI models?

-Quantization is a technique used to shrink AI models by lowering the precision of their parameters, allowing the model to trade off some accuracy for speed and efficiency while reducing memory usage.

How does Apple's Private Compute Center (PCC) work?

-PCC is Apple's cloud-based AI that kicks in when the on-device model can't handle a task. It decides whether to keep the task on the device or push it to the cloud, ensuring data privacy and security.

What is the new Siri's capability in terms of accessing personal data?

-The new Siri can access on-device data, apps, and personal contacts, providing more integrated and personalized assistance compared to previous versions.

How does Apple's 'Visual Intelligence' feature differ from Google Lens?

-Apple's Visual Intelligence is integrated into the system and uses a hybrid approach of on-device processing and cloud computing. It syncs with Siri and personal contacts, offering insights into objects, text, or landmarks by pointing the iPhone at them.

What is 'Image Playground' and how does it work?

-Image Playground is an image generation tool that can be accessed from iMessage or as a standalone app. It generates images using text prompts or suggestions based on recent activities and personal context.

How can users replicate Apple's AI features on older iPhones?

-Users can replicate some of Apple's AI features on older iPhones by using standalone apps like ChatGPT's Dolly for image generation or Google Lens for visual intelligence.

What is the main advantage of Apple's approach to AI compared to standalone apps?

-The main advantage of Apple's approach is the seamless integration of AI features across the entire system, providing a cohesive user experience that standalone apps may not match.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

iPhone 16 Presentation Translated into What Apple Really Means

Apple Intelligence: Use Cases You Should Know About

Apple's Surprise "AI" Punch!

The future of AI processing is at the edge - Durga Malladi, Qualcomm, Snapdragon Summit

Apple WWDC 2024 vs Microsoft Copilot: The Battle for AI Supremacy in 2024

Snapdragon just obsoleted Intel and AMD

5.0 / 5 (0 votes)