Large Language Models (LLMs) - Everything You NEED To Know

Summary

TLDRThis video offers a comprehensive guide to artificial intelligence and large language models (LLMs), explaining their evolution, functioning, and applications. It covers the history of LLMs from Eliza in 1966 to modern models like GPT-4 with 1.76 trillion parameters. The video discusses how LLMs work, including tokenization, embeddings, and Transformers. It also addresses ethical concerns, limitations, and the future of AI, including advancements in knowledge distillation and multimodality.

Takeaways

- 🌐 Large Language Models (LLMs) are a type of neural network trained on vast amounts of text data, including web content and books.

- 🤖 LLMs differ from traditional programming by teaching computers how to learn rather than instructing them with explicit rules.

- 📈 The capabilities of LLMs have evolved significantly since the 1966 ELIZA model, with advancements like the Transformer architecture enabling more sophisticated language understanding.

- 🚀 Popular applications of LLMs include image recognition, summarization, text generation, and programming assistance.

- 📚 The training process for LLMs involves tokenization, embeddings, and the use of Transformer algorithms to understand and generate human-like text.

- 💾 Vector databases play a crucial role in how LLMs process language by representing words as numerical vectors to capture semantic meanings.

- 🔍 The training of LLMs requires extensive data and computational resources, making it a costly and complex endeavor.

- 📉 Despite their capabilities, LLMs have limitations including struggles with logic, potential biases from training data, and the risk of generating false information.

- 🔧 Fine-tuning allows for the customization of pre-trained LLMs for specific tasks, making them more efficient and effective for targeted applications.

- 🌟 Ongoing research in LLMs focuses on knowledge distillation, retrieval augmented generation, and improving reasoning abilities to enhance their practical utility.

- 🔮 Ethical considerations around LLMs include the use of copyrighted material, potential for misuse, and the broader impact on various professions.

Q & A

What is the primary focus of the video?

-The video focuses on explaining large language models (LLMs), their workings, ethical considerations, applications, and the evolution of these technologies.

What is a large language model (LLM)?

-LLMs are a type of neural network trained on vast amounts of text data, designed to understand and generate human-like text based on patterns learned from the data.

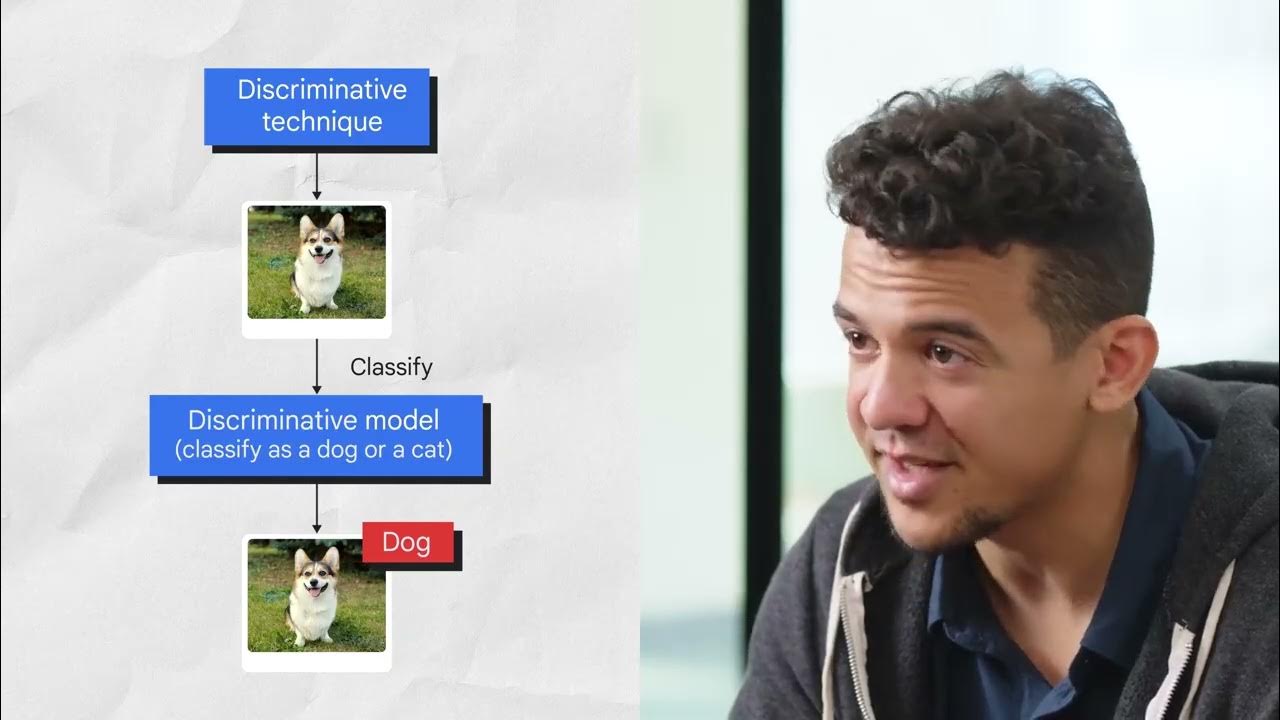

How do LLMs differ from traditional programming?

-Traditional programming is instruction-based, where you explicitly tell the computer what to do. LLMs, on the other hand, are trained to learn how to do things, offering a more flexible approach that can adapt to various applications.

What is a neural network?

-A neural network is a series of algorithms designed to recognize patterns in data by simulating the way the human brain works.

What is the significance of the 'Transformers' research paper in the context of LLMs?

-The 'Transformers' paper introduced a new architecture that greatly reduced training time and introduced features like self-attention, which revolutionized the development of LLMs, including GPT and Bert.

How does tokenization work in LLMs?

-Tokenization is the process of splitting text into individual tokens, which are essentially parts of words, allowing models to understand each word in the context it is used.

What are embeddings in the context of LLMs?

-Embeddings are numerical representations of tokens that help computers understand the meaning of words and their relationships to other words.

How are large language models trained?

-LLMs are trained by feeding pre-processed text data into the model, which then uses algorithms like Transformers to predict the next word based on context, adjusting the model's weights through millions of iterations.

What is fine-tuning in the context of LLMs?

-Fine-tuning involves adjusting a pre-trained LLM using specific data to improve its performance for a particular task, such as understanding pizza-related terminology for a pizza ordering system.

What are some limitations and challenges of LLMs?

-LLMs have limitations such as struggles with math and logic, potential biases from training data, the risk of spreading misinformation, high hardware requirements, and ethical concerns regarding data usage and potential misuse.

What are some real-world applications of LLMs?

-LLMs can be used for language translation, coding assistance, summarization, question answering, essay writing, translation, and even image and video creation.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Google’s AI Course for Beginners (in 10 minutes)!

Yann Le Cun brise le mythe : “L’IA fonce droit dans le mur”

Beyond the Hype: A Realistic Look at Large Language Models • Jodie Burchell • GOTO 2024

Ep 1 OpenAI Agents SDK Introduction|Urdu|Hindi| Unicorn Developers – Muhammad Usman

“What's wrong with LLMs and what we should be building instead” - Tom Dietterich - #VSCF2023

Introduction to Generative AI

5.0 / 5 (0 votes)