Building a RAG application using open-source models (Asking questions from a PDF using Llama2)

Summary

TLDREste video ofrece una guía detallada para ejecutar modelos locales de LLM en tu computadora, centrándose en modelos de código abierto como alternativas económicas y privadas a GPT. El presentador explica la importancia de comprender y utilizar LLM locales, destacando su accesibilidad, coste reducido y privacidad. Además, demuestra cómo construir un sistema de generación aumentado por recuperación (RAG), utilizando modelos locales para responder preguntas desde un PDF, subrayando el razonamiento detrás del código más que el código mismo. Este contenido es valioso para quienes buscan desplegar modelos de IA en escenarios sin conectividad o como respaldo a modelos como OpenAI.

Takeaways

- 🌟 Para ejecutar un modelo LLM local en tu computadora, se utilizan modelos open source que son accesibles y de bajo costo.

- 🛠️ Los modelos open source son importantes para la privacidad, permitiendo que las empresas manejen sus datos internamente sin conectarse a APIs externas.

- 🔄 La razón clave es entender la lógica detrás del código y no solo el código en sí, lo que se busca transmitir en el video.

- 📚 El proceso comienza con una exploración de la herramienta AMA, que sirve como envoltura común para diferentes modelos.

- 🔗 Se pueden descargar modelos como Lama 2, mol mixol, etc., a través de la plataforma AMA.

- 📋 La instalación de AMA es simple y disponible para diferentes sistemas operativos como Mac, Linux y Windows.

- 📈 Los modelos LLM son como fórmulas matemáticas gigantes, compuestos por parámetros de pesos y sesgos.

- 💻 Al descargar un modelo, se obtienen todos estos valores y se almacenan en el disco duro.

- 🔍 Se utiliza L chain para construir un sistema simple de rack desde cero, utilizando el modelo local para responder preguntas de un PDF.

- 🔖 Se crea un ambiente virtual en Visual Studio Code para instalar las librerías necesarias sin afectar el sistema principal.

- 📊 Se demuestra cómo se pueden obtener respuestas de un modelo local y cómo se puede manejar la información de un PDF para responder preguntas específicas.

Q & A

¿Por qué es importante saber cómo usar un LLM local?

-Es importante por varias razones: los modelos de código abierto están mejorando, son más económicos que los GPT para ciertos casos de uso, ofrecen ventajas de privacidad al no necesitar conectarse a una API externa, y son útiles en escenarios sin conectividad, como en robótica o dispositivos edge.

¿Qué beneficios ofrece el uso de modelos de código abierto en comparación con los modelos GPT?

-Los modelos de código abierto son más económicos y ofrecen soluciones eficaces para ciertos casos de uso sin requerir la potencia completa de los modelos GPT, lo que los hace especialmente valiosos para empresas preocupadas por la privacidad o para aplicaciones en entornos sin acceso a internet.

¿Cómo se puede utilizar un modelo de código abierto como respaldo de los modelos de OpenAI?

-Se puede configurar un modelo de código abierto para actuar como respaldo de los modelos de OpenAI, de manera que si la API de OpenAI sufre una caída, se puede cambiar inmediatamente al modelo de código abierto y mantener el flujo de trabajo sin interrupciones.

¿Qué es AMA y para qué sirve en el contexto de ejecutar un LLM localmente?

-AMA es un envoltorio común que permite ejecutar diferentes modelos de LLM, como Lama 2 o Mixol, localmente en un ordenador. Facilita la instalación y la ejecución de estos modelos proporcionando una interfaz común para su manejo.

¿Cuál es la diferencia principal entre los modelos de chat y los modelos de completamiento mencionados en el guion?

-Los modelos de chat están diseñados para conversaciones, devolviendo estructuras especiales para mensajes de IA y humanos, mientras que los modelos de completamiento generan respuestas directas en forma de texto sin estructuras adicionales, enfocándose en completar un texto basado en un prompt dado.

¿Qué papel juegan los embeddings en los sistemas de generación aumentada por recuperación (RAG)?

-Los embeddings permiten convertir documentos en representaciones vectoriales para compararlos con las preguntas de los usuarios. Esto facilita la identificación de las partes del documento más relevantes para responder a una pregunta, mejorando la precisión de las respuestas generadas por el modelo.

¿Cómo se utiliza L chain para construir un sistema RAG simple?

-L chain se utiliza para encadenar diferentes componentes, como cargadores de documentos, generadores de prompts, modelos de LLM y analizadores de salida, para crear un flujo de trabajo que permita al modelo responder preguntas basadas en el contenido de un documento cargado, como un PDF.

¿Qué ventajas ofrece el enfoque de usar una cadena (chain) en la construcción de sistemas RAG?

-El enfoque de cadena permite una modularidad y reutilización de componentes, facilitando la construcción de sistemas complejos al conectar diferentes piezas de funcionalidad de manera flexible y eficiente, y permitiendo ajustes y optimizaciones sin alterar el sistema en su conjunto.

¿Por qué es relevante la funcionalidad de 'streaming' y 'batching' al usar LLMs?

-El 'streaming' permite recibir respuestas en tiempo real a medida que el modelo las genera, mejorando la interactividad, mientras que el 'batching' permite procesar múltiples preguntas en paralelo, aumentando la eficiencia y reduciendo el tiempo de respuesta total.

¿Cuáles son los desafíos al utilizar modelos de LLM de código abierto en comparación con los modelos GPT de OpenAI?

-Los modelos de código abierto pueden no ser tan avanzados o precisos como los modelos GPT de OpenAI, lo que puede resultar en respuestas menos precisas o relevantes. Además, la implementación y mantenimiento local de estos modelos pueden requerir recursos adicionales y conocimientos técnicos.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

¡EMPIEZA A USAR la IA GRATIS en tu PC! 👉 3 Herramientas que DEBES CONOCER

TinyLlama: The Era of Small Language Models is Here

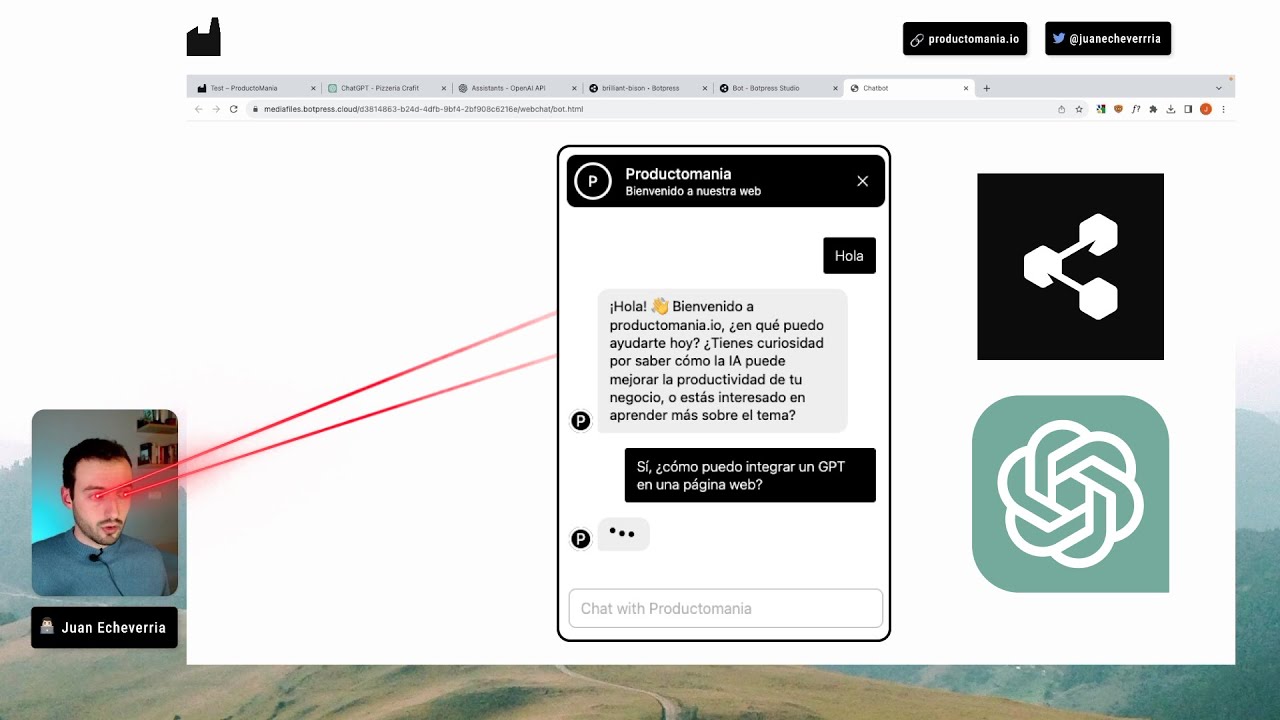

Cómo EMBEBER UN GPT en una página WEB [Tutorial paso a paso]

Qwen Just Casually Started the Local AI Revolution

¡Evita el desastre! Los 13 celulares QUE NO DEBES COMPRAR en 2024: ¡Cuidado extremo! 👎☠️📵

¡EJECUTA tu propio ChatGPT en LOCAL gratis y sin censura! (LM Studio + Mixtral)

5.0 / 5 (0 votes)