Unconstrained Multivariate Optimization

Summary

TLDRThis lecture explores unconstrained nonlinear optimization with multiple decision variables, extending the concepts from the univariate case. It introduces the idea of a general function of n variables and uses a two-dimensional example to illustrate the optimization process. The lecture explains the challenges of visualizing higher dimensions and introduces contour plots to aid in understanding. Key concepts include finding the minimum point in the decision variable space, understanding the role of gradient and Hessian matrix, and the conditions for a minimum, such as the gradient being zero and the Hessian being positive definite. The lecture concludes with a simple example to demonstrate these concepts and sets the stage for future discussions on numerical methods and constrained optimization.

Takeaways

- 📚 The lecture extends the concept of unconstrained nonlinear optimization from a single variable to multiple variables, explaining how optimization problems are approached with multiple decision variables.

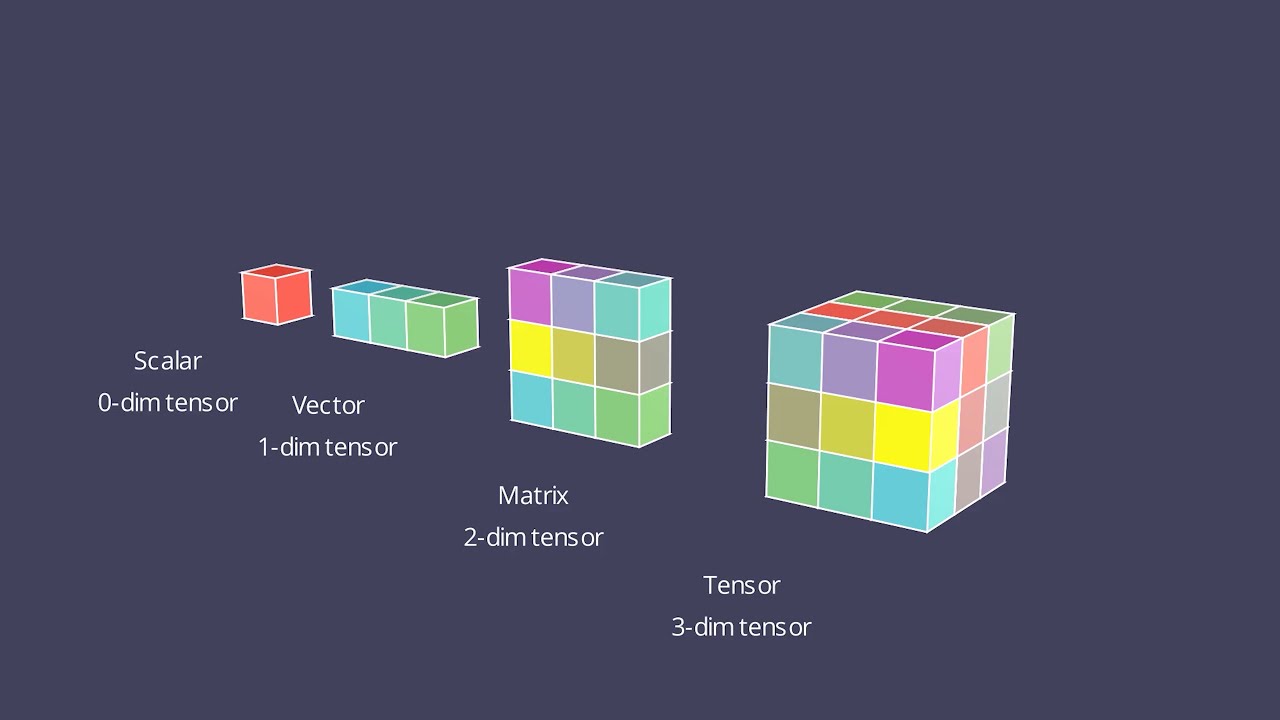

- 📈 Visualization of optimization problems becomes challenging with more than two variables, transitioning from two-dimensional plots to three-dimensional plots and beyond.

- 🔍 The minimum point in a multivariable function can be identified in a three-dimensional plot where the decision variables form the X-Y plane and the function value is the Z-axis.

- 📊 Contour plots are introduced as a tool to visualize and understand the optimization process in two dimensions by showing constant function value levels on the decision variable plane.

- 🤔 The lecture emphasizes the importance of choosing the right direction and distance to move in the decision variable space to improve the objective function value.

- 🧐 Analytical conditions for a minimum in multivariate problems are explored, including the necessity for the gradient to be zero and the Hessian matrix to be positive definite.

- 🔢 The gradient in multivariate optimization is a vector of partial derivatives with respect to each decision variable, generalizing the derivative from the univariate case.

- 📝 The Hessian matrix is a square matrix of second-order partial derivatives, which is crucial for determining the nature of the extremum (minimum or maximum).

- 🔍 The concept of positive definiteness of the Hessian matrix is introduced as a condition for a point to be a local minimum, which is linked to all eigenvalues of the matrix being positive.

- 📖 A simple example is used to illustrate the process of finding the optimum solution by setting the gradient to zero and verifying the solution with the Hessian matrix, highlighting the application of these concepts.

Q & A

What is the main focus of the lecture?

-The lecture focuses on extending the principles of unconstrained nonlinear optimization from the univariate case to multivariate cases, where multiple variables act as decision variables.

Why is it challenging to visualize optimization problems with more than two decision variables?

-Optimization problems with more than two decision variables are challenging to visualize because it requires three-dimensional plots for two variables, and higher dimensions become even more complex to represent graphically.

What is the significance of the third dimension in a three-dimensional plot for optimization problems?

-In a three-dimensional plot for optimization problems, the third dimension represents the value of the objective function, allowing for the visualization of the function's surface over the decision variable space.

What is a contour plot and how does it help in multivariate optimization?

-A contour plot is a graphical representation of the objective function's constant values on the decision variable plane. It helps visualize the levels of the function and guides the search for minimum or maximum points by showing the direction of increasing or decreasing function values.

How does the concept of a gradient differ from a derivative in optimization?

-In optimization, a gradient is a vector of partial derivatives with respect to each decision variable, replacing the single derivative used in univariate optimization. It points in the direction of the steepest ascent of the function.

What is the Hessian matrix and why is it important in multivariate optimization?

-The Hessian matrix is a square matrix of second-order partial derivatives of the objective function with respect to the decision variables. It is important because it provides information about the curvature of the function, which is crucial for determining whether a point is a minimum, maximum, or saddle point.

What are the analytical conditions for a minimum in a multivariate optimization problem?

-The analytical conditions for a minimum in a multivariate optimization problem are that the gradient of the function (∇F) equals zero and the Hessian matrix (H) is positive definite.

How does the concept of a local minimum differ from a global minimum in the context of optimization?

-A local minimum is the lowest point in a neighborhood of a point, but not necessarily the lowest point in the entire decision variable space. A global minimum is the lowest point across the entire space, making it the optimal solution for the optimization problem.

Why is it difficult to find the global minimum in a multivariate optimization problem?

-Finding the global minimum in a multivariate optimization problem is difficult because algorithms might get stuck in local minima, especially when the function has many hills and valleys. Without a comprehensive search or advanced algorithms, it's challenging to ensure that the global minimum is found.

What is the role of eigenvalues in determining the nature of an optimum point in multivariate optimization?

-In multivariate optimization, the eigenvalues of the Hessian matrix at a point help determine if the point is a minimum, maximum, or saddle point. If all eigenvalues are positive, the point is a local minimum; if all are negative, it's a local maximum; and if there's a mix of signs, it's a saddle point.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

Mathematical Optimization | Chapter 1: Introduction | Indonesian

Mathematical Optimization | Chapter 2 : Types of Mathematical Functions | Indonesian

V1. Data Types | Linear Algebra for Machine Learning #MathsforMachineLearning

Optimisasi Statistika - Kuliah 6 part 1

1 Linear Programming - Concept17072020

Solving Linear Equations

5.0 / 5 (0 votes)