Merge LLMs using Mergekit: Create your own Medical Mixture of Experts

Summary

TLDRThis AI Anytime video tutorial guides viewers on merging multiple large language models (LLMs) using Merge GID, a toolkit for combining models into a 'mixture of experts' system. The presenter demonstrates merging medical-specific LLMs, such as Biom Mistral 7B and Meditron 7B, to create an enhanced model for medical text analysis. The process involves setting up a GPU environment, cloning the Merge GID repository, installing dependencies, and configuring the merge using a YAML file. The merged model is then uploaded to Hugging Face for inference, showcasing how to leverage combined LLMs for specialized tasks like medical note generation.

Takeaways

- 🤖 Merge GID is a toolkit that allows merging multiple large language models (LLMs) to create a mixture of experts model.

- 🧠 The video focuses on how to merge medical-specific LLMs, including the Biom Mistal 7B and Meditron 7B models, to form a mixture of experts in the medical domain.

- 💻 The process uses Runpod as the computing platform because it offers more reliable A100 GPUs for this task, at a rate of around $1.89 per hour.

- ⚙️ Merge GID requires cloning its repository from GitHub and installing necessary dependencies, including Transformers and other libraries.

- 📁 A configuration file (config.yaml) is created to define the base model and expert models, as well as parameters like positive and negative prompts.

- 🔢 The process involves configuring Float16 precision, which helps reduce memory footprint and speeds up computation for neural network operations.

- 📦 After merging the models, the new LLM is pushed to Hugging Face using the Hugging Face API for future use cases and inference.

- 📜 The merged model is useful for medical entity recognition (NER) and can assist in administrative tasks like extracting keywords from medical documents.

- 📊 Merging models only makes sense when the base model struggles with specific tasks; otherwise, the performance trade-offs may not be worth the cost.

- 💡 The video aims to demonstrate the practical steps of merging LLMs and pushing the merged model to Hugging Face, offering further resources like code and related videos for users.

Q & A

What is the main focus of the AI Anytime video?

-The main focus of the AI Anytime video is to explore how to merge multiple large language models (LLMs) using Merge GID to create a mixture of experts model, specifically within the medical domain.

What is a mixture of experts model?

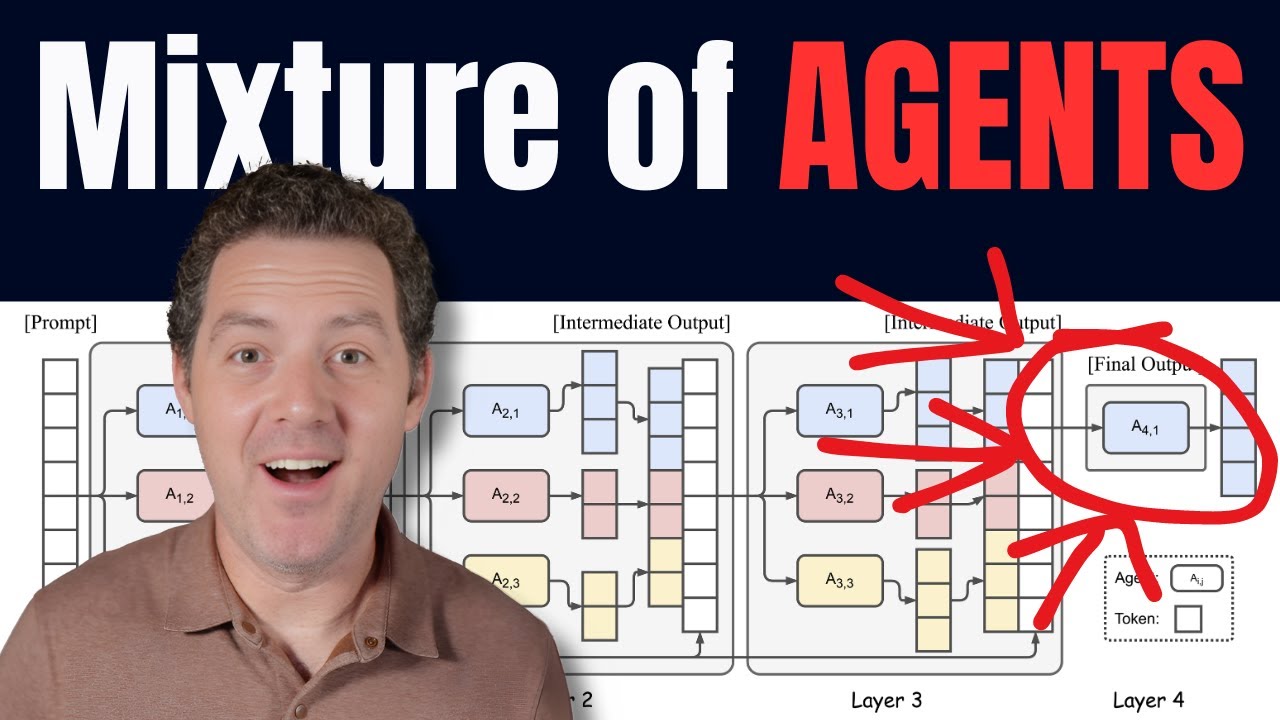

-A mixture of experts model is a type of generative AI ecosystem where multiple LLMs work in tandem, each specializing in different areas, to enhance the overall performance of the model.

What is Merge GID and what does it offer?

-Merge GID is described as a toolkit that helps merge multiple LLMs. It's a tool that allows users to combine different models to potentially improve their performance on specific tasks.

Why is the NVIDIA A100 GPU recommended for this process?

-The NVIDIA A100 GPU is recommended because it is capable of handling the computational demands of merging large language models, which requires significant processing power.

What is the significance of the 'base model' and 'experts model' in the merging process?

-In the merging process, the 'base model' serves as the primary model, while 'experts models' are additional models that contribute specialized knowledge to enhance the base model's capabilities.

What are the specific models used as the base and experts in the video?

-The base model used is Biom Mistral 7B, and the experts models include Meditron 7B and a model for medical named entity recognition.

Why is float16 used in the merging process?

-Float16 is used to reduce the memory footprint of the models being merged and to speed up the computation process, which is beneficial when working with large models.

How does the video demonstrate the creation of a config file for merging?

-The video demonstrates the creation of a config file by defining the base model, experts models, and other parameters such as positive and negative prompts, which are then written into a YAML file.

What is the purpose of pushing the merged model to Hugging Face?

-The purpose of pushing the merged model to Hugging Face is to make the model accessible for use in various applications and to leverage Hugging Face's platform for model hosting and version control.

How can one perform inference with the merged model as shown in the video?

-Inference with the merged model can be performed by loading the model and tokenizer using Hugging Face's Transformers library, creating a pipeline, and then passing prompts to the pipeline to generate text.

What are the considerations for merging LLMs as highlighted in the video?

-The video highlights that LLMs should be merged only when necessary, such as when the base model alone cannot perform the task adequately, and that there are performance trade-offs to consider, including computational power, memory, and cost.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

LM Studio Tutorial: Run Large Language Models (LLM) on Your Laptop

Mixture-of-Agents Enhances Large Language Model Capabilities

MoA BEATS GPT4o With Open-Source Models!! (With Code!)

What I've Learned Testing 100+ AI Tools For Research

Turn ANY Website into LLM Knowledge in SECONDS

Introduction to large language models

5.0 / 5 (0 votes)