Everything You Need to Know About Control Theory

Summary

TLDRThis video script discusses control theory, a mathematical framework essential for developing autonomous systems. It explains the difference between open-loop (feed-forward) and closed-loop (feedback) control, emphasizing the importance of system modeling, state estimation, planning, and analysis. The script also touches on various control methods, the challenges of noise and observability, and the significance of creating accurate mathematical models for effective system control.

Takeaways

- 🚗 Control theory is essential for designing autonomous systems like self-driving cars, building temperature management, and efficient distillation columns.

- 🔄 A dynamical system can be influenced by both control inputs (intended actions like steering a car) and disturbances (unintended forces like wind).

- 🔄 To automate a process, you can use an open-loop controller, which doesn't require knowledge of the system's current state, or a closed-loop controller that uses feedback from the system.

- 🔄 Feed-forward controllers use a reference input to generate control signals without needing to measure the system's state, but they require a good understanding of system dynamics.

- 🔄 Feedback controllers use the system's current state and reference to adjust control inputs, making them self-correcting but also potentially dangerous if not designed properly.

- 🔄 Models are crucial in control theory for designing controllers, estimating states, planning, and analyzing system performance.

- 🔄 System identification involves creating mathematical models of a system's dynamics, which can be done through physics-based equations or data-driven methods.

- 🔄 Planning is necessary to create a reference for the control system to follow, such as navigating a self-driving car to a destination while avoiding obstacles.

- 🔄 State estimation is required to accurately determine the system's state from noisy sensor measurements, which can be achieved through various algorithms like Kalman filters.

- 🔄 Ensuring the designed control system meets requirements involves analysis, simulation, and testing, using tools like Bode plots, Nyquist diagrams, and simulation software like Matlab and Simulink.

- 🔄 There are various types of feedback controllers, including linear, nonlinear, robust, adaptive, optimal, and intelligent controllers, each suited to different systems and requirements.

Q & A

What is control theory?

-Control theory is a mathematical framework that provides tools to develop autonomous systems by managing how they respond to inputs and change over time.

How does a dynamical system respond to inputs?

-A dynamical system responds to both control inputs (intended actions like steering a car) and disturbances (unintended forces like wind), which interact with its internal dynamics to change its state over time.

What is an open-loop controller and how does it work?

-An open-loop controller, also known as a feed-forward controller, generates control signals based on a reference input without needing to measure the system's actual state. It's a straightforward approach that doesn't account for current conditions or disturbances.

Why is a mathematical model important in control theory?

-A mathematical model is crucial as it captures the dynamics of a system, allowing for the design of controllers that can predict and compensate for the system's behavior under various conditions.

How does feedback control differ from feed-forward control?

-Feedback control, or closed-loop control, uses both the reference and the current state of the system to determine control inputs, creating a self-correcting mechanism that adjusts for deviations due to disturbances or modeling errors.

What are the potential dangers of feedback control?

-Feedback control can be dangerous because it changes the system's dynamics, potentially altering its stability. If not designed correctly, it can make a system less stable or even unstable.

Why is planning important in control systems?

-Planning is essential for creating a reference that the controller can follow. It involves determining the desired state of the system and generating a strategy to achieve it, considering constraints and environmental factors.

What is state estimation and why is it necessary?

-State estimation is the process of accurately estimating the current state of a system based on noisy sensor measurements. It's necessary for feedback control systems to make informed decisions despite measurement inaccuracies.

How can measurement noise affect a feedback system?

-Noise in measurements can lead to incorrect state estimations, causing the controller to react to false readings instead of the true state of the system, which can result in suboptimal or unstable control.

What are some methods for ensuring a control system meets its requirements?

-Methods include analysis using tools like Bode plots or Nyquist diagrams, simulation using software like Matlab and Simulink, and testing to verify stability, performance, and adherence to specifications.

Why is observability important in feedback control systems?

-Observability ensures that all necessary states of the system can be inferred from the available measurements. It's crucial for feedback control to function correctly, as the controller must 'see' the system's state to adjust its inputs accordingly.

Outlines

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowMindmap

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowKeywords

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowHighlights

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowTranscripts

This section is available to paid users only. Please upgrade to access this part.

Upgrade NowBrowse More Related Video

The Foundation of Mathematics - Numberphile

LEC-1 | Control System Engineering Introduction | What is a system? | GATE 2021 | Norman S.Nise Book

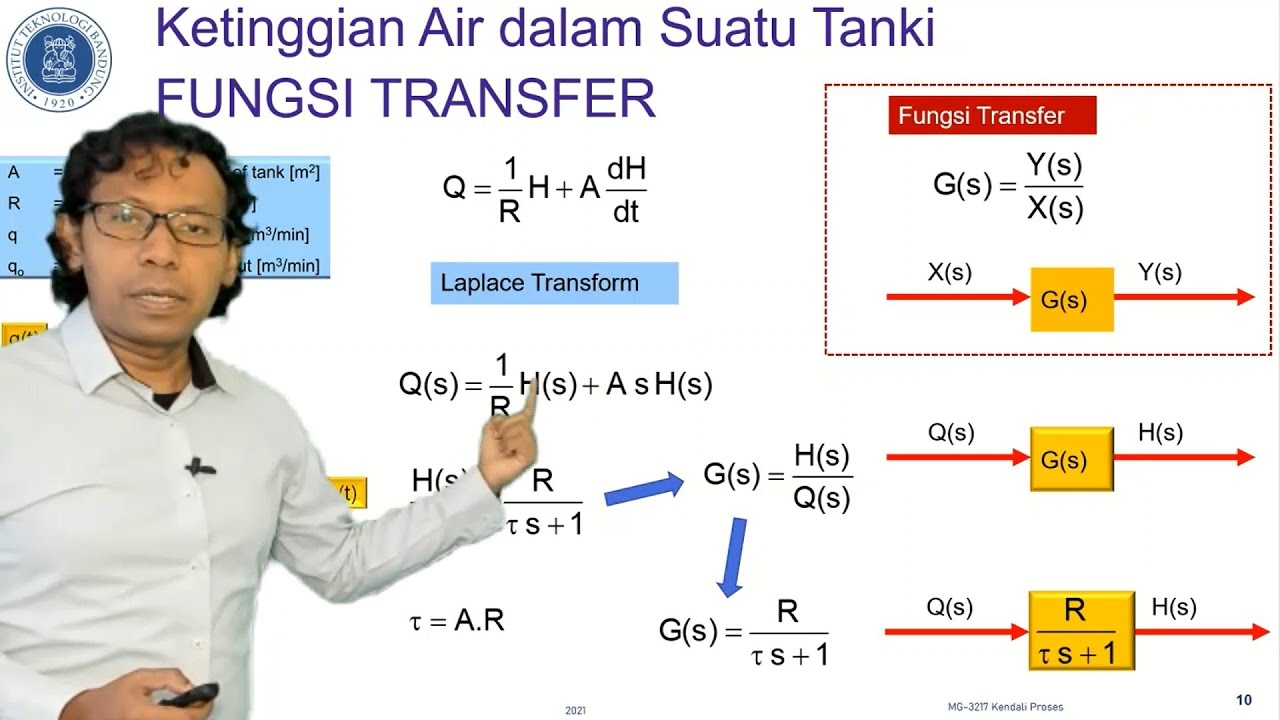

03. MG3217 Kendali Proses S01: Respons Sistem Orde - 1

One Step Closer to a 'Grand Unified Theory of Math': Geometric Langlands

The Internet Is Dead. But Something Is Alive.

Building Reliable Agentic Systems: Eno Reyes

5.0 / 5 (0 votes)